text

stringlengths 3

14.4k

| source

stringclasses 273

values | url

stringlengths 47

172

| source_section

stringlengths 0

95

| file_type

stringclasses 1

value | id

stringlengths 3

6

|

|---|---|---|---|---|---|

Motion LoRAs are a collection of LoRAs that work with the `guoyww/animatediff-motion-adapter-v1-5-2` checkpoint. These LoRAs are responsible for adding specific types of motion to the animations.

```python

import torch

from diffusers import AnimateDiffPipeline, DDIMScheduler, MotionAdapter

from diffusers.utils import export_to_gif

# Load the motion adapter

adapter = MotionAdapter.from_pretrained("guoyww/animatediff-motion-adapter-v1-5-2", torch_dtype=torch.float16)

# load SD 1.5 based finetuned model

model_id = "SG161222/Realistic_Vision_V5.1_noVAE"

pipe = AnimateDiffPipeline.from_pretrained(model_id, motion_adapter=adapter, torch_dtype=torch.float16)

pipe.load_lora_weights(

"guoyww/animatediff-motion-lora-zoom-out", adapter_name="zoom-out"

)

scheduler = DDIMScheduler.from_pretrained(

model_id,

subfolder="scheduler",

clip_sample=False,

beta_schedule="linear",

timestep_spacing="linspace",

steps_offset=1,

)

pipe.scheduler = scheduler

# enable memory savings

pipe.enable_vae_slicing()

pipe.enable_model_cpu_offload()

output = pipe(

prompt=(

"masterpiece, bestquality, highlydetailed, ultradetailed, sunset, "

"orange sky, warm lighting, fishing boats, ocean waves seagulls, "

"rippling water, wharf, silhouette, serene atmosphere, dusk, evening glow, "

"golden hour, coastal landscape, seaside scenery"

),

negative_prompt="bad quality, worse quality",

num_frames=16,

guidance_scale=7.5,

num_inference_steps=25,

generator=torch.Generator("cpu").manual_seed(42),

)

frames = output.frames[0]

export_to_gif(frames, "animation.gif")

```

<table>

<tr>

<td><center>

masterpiece, bestquality, sunset.

<br>

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/animatediff-zoom-out-lora.gif"

alt="masterpiece, bestquality, sunset"

style="width: 300px;" />

</center></td>

</tr>

</table>

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/animatediff.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/animatediff/#using-motion-loras

|

#using-motion-loras

|

.md

|

158_12

|

You can also leverage the [PEFT](https://github.com/huggingface/peft) backend to combine Motion LoRA's and create more complex animations.

First install PEFT with

```shell

pip install peft

```

Then you can use the following code to combine Motion LoRAs.

```python

import torch

from diffusers import AnimateDiffPipeline, DDIMScheduler, MotionAdapter

from diffusers.utils import export_to_gif

# Load the motion adapter

adapter = MotionAdapter.from_pretrained("guoyww/animatediff-motion-adapter-v1-5-2", torch_dtype=torch.float16)

# load SD 1.5 based finetuned model

model_id = "SG161222/Realistic_Vision_V5.1_noVAE"

pipe = AnimateDiffPipeline.from_pretrained(model_id, motion_adapter=adapter, torch_dtype=torch.float16)

pipe.load_lora_weights(

"diffusers/animatediff-motion-lora-zoom-out", adapter_name="zoom-out",

)

pipe.load_lora_weights(

"diffusers/animatediff-motion-lora-pan-left", adapter_name="pan-left",

)

pipe.set_adapters(["zoom-out", "pan-left"], adapter_weights=[1.0, 1.0])

scheduler = DDIMScheduler.from_pretrained(

model_id,

subfolder="scheduler",

clip_sample=False,

timestep_spacing="linspace",

beta_schedule="linear",

steps_offset=1,

)

pipe.scheduler = scheduler

# enable memory savings

pipe.enable_vae_slicing()

pipe.enable_model_cpu_offload()

output = pipe(

prompt=(

"masterpiece, bestquality, highlydetailed, ultradetailed, sunset, "

"orange sky, warm lighting, fishing boats, ocean waves seagulls, "

"rippling water, wharf, silhouette, serene atmosphere, dusk, evening glow, "

"golden hour, coastal landscape, seaside scenery"

),

negative_prompt="bad quality, worse quality",

num_frames=16,

guidance_scale=7.5,

num_inference_steps=25,

generator=torch.Generator("cpu").manual_seed(42),

)

frames = output.frames[0]

export_to_gif(frames, "animation.gif")

```

<table>

<tr>

<td><center>

masterpiece, bestquality, sunset.

<br>

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/animatediff-zoom-out-pan-left-lora.gif"

alt="masterpiece, bestquality, sunset"

style="width: 300px;" />

</center></td>

</tr>

</table>

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/animatediff.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/animatediff/#using-motion-loras-with-peft

|

#using-motion-loras-with-peft

|

.md

|

158_13

|

[FreeInit: Bridging Initialization Gap in Video Diffusion Models](https://arxiv.org/abs/2312.07537) by Tianxing Wu, Chenyang Si, Yuming Jiang, Ziqi Huang, Ziwei Liu.

FreeInit is an effective method that improves temporal consistency and overall quality of videos generated using video-diffusion-models without any addition training. It can be applied to AnimateDiff, ModelScope, VideoCrafter and various other video generation models seamlessly at inference time, and works by iteratively refining the latent-initialization noise. More details can be found it the paper.

The following example demonstrates the usage of FreeInit.

```python

import torch

from diffusers import MotionAdapter, AnimateDiffPipeline, DDIMScheduler

from diffusers.utils import export_to_gif

adapter = MotionAdapter.from_pretrained("guoyww/animatediff-motion-adapter-v1-5-2")

model_id = "SG161222/Realistic_Vision_V5.1_noVAE"

pipe = AnimateDiffPipeline.from_pretrained(model_id, motion_adapter=adapter, torch_dtype=torch.float16).to("cuda")

pipe.scheduler = DDIMScheduler.from_pretrained(

model_id,

subfolder="scheduler",

beta_schedule="linear",

clip_sample=False,

timestep_spacing="linspace",

steps_offset=1

)

# enable memory savings

pipe.enable_vae_slicing()

pipe.enable_vae_tiling()

# enable FreeInit

# Refer to the enable_free_init documentation for a full list of configurable parameters

pipe.enable_free_init(method="butterworth", use_fast_sampling=True)

# run inference

output = pipe(

prompt="a panda playing a guitar, on a boat, in the ocean, high quality",

negative_prompt="bad quality, worse quality",

num_frames=16,

guidance_scale=7.5,

num_inference_steps=20,

generator=torch.Generator("cpu").manual_seed(666),

)

# disable FreeInit

pipe.disable_free_init()

frames = output.frames[0]

export_to_gif(frames, "animation.gif")

```

<Tip warning={true}>

FreeInit is not really free - the improved quality comes at the cost of extra computation. It requires sampling a few extra times depending on the `num_iters` parameter that is set when enabling it. Setting the `use_fast_sampling` parameter to `True` can improve the overall performance (at the cost of lower quality compared to when `use_fast_sampling=False` but still better results than vanilla video generation models).

</Tip>

<Tip>

Make sure to check out the Schedulers [guide](../../using-diffusers/schedulers) to learn how to explore the tradeoff between scheduler speed and quality, and see the [reuse components across pipelines](../../using-diffusers/loading#reuse-a-pipeline) section to learn how to efficiently load the same components into multiple pipelines.

</Tip>

<table>

<tr>

<th align=center>Without FreeInit enabled</th>

<th align=center>With FreeInit enabled</th>

</tr>

<tr>

<td align=center>

panda playing a guitar

<br />

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/animatediff-no-freeinit.gif"

alt="panda playing a guitar"

style="width: 300px;" />

</td>

<td align=center>

panda playing a guitar

<br/>

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/animatediff-freeinit.gif"

alt="panda playing a guitar"

style="width: 300px;" />

</td>

</tr>

</table>

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/animatediff.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/animatediff/#using-freeinit

|

#using-freeinit

|

.md

|

158_14

|

[AnimateLCM](https://animatelcm.github.io/) is a motion module checkpoint and an [LCM LoRA](https://huggingface.co/docs/diffusers/using-diffusers/inference_with_lcm_lora) that have been created using a consistency learning strategy that decouples the distillation of the image generation priors and the motion generation priors.

```python

import torch

from diffusers import AnimateDiffPipeline, LCMScheduler, MotionAdapter

from diffusers.utils import export_to_gif

adapter = MotionAdapter.from_pretrained("wangfuyun/AnimateLCM")

pipe = AnimateDiffPipeline.from_pretrained("emilianJR/epiCRealism", motion_adapter=adapter)

pipe.scheduler = LCMScheduler.from_config(pipe.scheduler.config, beta_schedule="linear")

pipe.load_lora_weights("wangfuyun/AnimateLCM", weight_name="sd15_lora_beta.safetensors", adapter_name="lcm-lora")

pipe.enable_vae_slicing()

pipe.enable_model_cpu_offload()

output = pipe(

prompt="A space rocket with trails of smoke behind it launching into space from the desert, 4k, high resolution",

negative_prompt="bad quality, worse quality, low resolution",

num_frames=16,

guidance_scale=1.5,

num_inference_steps=6,

generator=torch.Generator("cpu").manual_seed(0),

)

frames = output.frames[0]

export_to_gif(frames, "animatelcm.gif")

```

<table>

<tr>

<td><center>

A space rocket, 4K.

<br>

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/animatelcm-output.gif"

alt="A space rocket, 4K"

style="width: 300px;" />

</center></td>

</tr>

</table>

AnimateLCM is also compatible with existing [Motion LoRAs](https://huggingface.co/collections/dn6/animatediff-motion-loras-654cb8ad732b9e3cf4d3c17e).

```python

import torch

from diffusers import AnimateDiffPipeline, LCMScheduler, MotionAdapter

from diffusers.utils import export_to_gif

adapter = MotionAdapter.from_pretrained("wangfuyun/AnimateLCM")

pipe = AnimateDiffPipeline.from_pretrained("emilianJR/epiCRealism", motion_adapter=adapter)

pipe.scheduler = LCMScheduler.from_config(pipe.scheduler.config, beta_schedule="linear")

pipe.load_lora_weights("wangfuyun/AnimateLCM", weight_name="sd15_lora_beta.safetensors", adapter_name="lcm-lora")

pipe.load_lora_weights("guoyww/animatediff-motion-lora-tilt-up", adapter_name="tilt-up")

pipe.set_adapters(["lcm-lora", "tilt-up"], [1.0, 0.8])

pipe.enable_vae_slicing()

pipe.enable_model_cpu_offload()

output = pipe(

prompt="A space rocket with trails of smoke behind it launching into space from the desert, 4k, high resolution",

negative_prompt="bad quality, worse quality, low resolution",

num_frames=16,

guidance_scale=1.5,

num_inference_steps=6,

generator=torch.Generator("cpu").manual_seed(0),

)

frames = output.frames[0]

export_to_gif(frames, "animatelcm-motion-lora.gif")

```

<table>

<tr>

<td><center>

A space rocket, 4K.

<br>

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/animatelcm-motion-lora.gif"

alt="A space rocket, 4K"

style="width: 300px;" />

</center></td>

</tr>

</table>

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/animatediff.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/animatediff/#using-animatelcm

|

#using-animatelcm

|

.md

|

158_15

|

[FreeNoise: Tuning-Free Longer Video Diffusion via Noise Rescheduling](https://arxiv.org/abs/2310.15169) by Haonan Qiu, Menghan Xia, Yong Zhang, Yingqing He, Xintao Wang, Ying Shan, Ziwei Liu.

FreeNoise is a sampling mechanism that can generate longer videos with short-video generation models by employing noise-rescheduling, temporal attention over sliding windows, and weighted averaging of latent frames. It also can be used with multiple prompts to allow for interpolated video generations. More details are available in the paper.

The currently supported AnimateDiff pipelines that can be used with FreeNoise are:

- [`AnimateDiffPipeline`]

- [`AnimateDiffControlNetPipeline`]

- [`AnimateDiffVideoToVideoPipeline`]

- [`AnimateDiffVideoToVideoControlNetPipeline`]

In order to use FreeNoise, a single line needs to be added to the inference code after loading your pipelines.

```diff

+ pipe.enable_free_noise()

```

After this, either a single prompt could be used, or multiple prompts can be passed as a dictionary of integer-string pairs. The integer keys of the dictionary correspond to the frame index at which the influence of that prompt would be maximum. Each frame index should map to a single string prompt. The prompts for intermediate frame indices, that are not passed in the dictionary, are created by interpolating between the frame prompts that are passed. By default, simple linear interpolation is used. However, you can customize this behaviour with a callback to the `prompt_interpolation_callback` parameter when enabling FreeNoise.

Full example:

```python

import torch

from diffusers import AutoencoderKL, AnimateDiffPipeline, LCMScheduler, MotionAdapter

from diffusers.utils import export_to_video, load_image

# Load pipeline

dtype = torch.float16

motion_adapter = MotionAdapter.from_pretrained("wangfuyun/AnimateLCM", torch_dtype=dtype)

vae = AutoencoderKL.from_pretrained("stabilityai/sd-vae-ft-mse", torch_dtype=dtype)

pipe = AnimateDiffPipeline.from_pretrained("emilianJR/epiCRealism", motion_adapter=motion_adapter, vae=vae, torch_dtype=dtype)

pipe.scheduler = LCMScheduler.from_config(pipe.scheduler.config, beta_schedule="linear")

pipe.load_lora_weights(

"wangfuyun/AnimateLCM", weight_name="AnimateLCM_sd15_t2v_lora.safetensors", adapter_name="lcm_lora"

)

pipe.set_adapters(["lcm_lora"], [0.8])

# Enable FreeNoise for long prompt generation

pipe.enable_free_noise(context_length=16, context_stride=4)

pipe.to("cuda")

# Can be a single prompt, or a dictionary with frame timesteps

prompt = {

0: "A caterpillar on a leaf, high quality, photorealistic",

40: "A caterpillar transforming into a cocoon, on a leaf, near flowers, photorealistic",

80: "A cocoon on a leaf, flowers in the backgrond, photorealistic",

120: "A cocoon maturing and a butterfly being born, flowers and leaves visible in the background, photorealistic",

160: "A beautiful butterfly, vibrant colors, sitting on a leaf, flowers in the background, photorealistic",

200: "A beautiful butterfly, flying away in a forest, photorealistic",

240: "A cyberpunk butterfly, neon lights, glowing",

}

negative_prompt = "bad quality, worst quality, jpeg artifacts"

# Run inference

output = pipe(

prompt=prompt,

negative_prompt=negative_prompt,

num_frames=256,

guidance_scale=2.5,

num_inference_steps=10,

generator=torch.Generator("cpu").manual_seed(0),

)

# Save video

frames = output.frames[0]

export_to_video(frames, "output.mp4", fps=16)

```

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/animatediff.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/animatediff/#using-freenoise

|

#using-freenoise

|

.md

|

158_16

|

Since FreeNoise processes multiple frames together, there are parts in the modeling where the memory required exceeds that available on normal consumer GPUs. The main memory bottlenecks that we identified are spatial and temporal attention blocks, upsampling and downsampling blocks, resnet blocks and feed-forward layers. Since most of these blocks operate effectively only on the channel/embedding dimension, one can perform chunked inference across the batch dimensions. The batch dimension in AnimateDiff are either spatial (`[B x F, H x W, C]`) or temporal (`B x H x W, F, C`) in nature (note that it may seem counter-intuitive, but the batch dimension here are correct, because spatial blocks process across the `B x F` dimension while the temporal blocks process across the `B x H x W` dimension). We introduce a `SplitInferenceModule` that makes it easier to chunk across any dimension and perform inference. This saves a lot of memory but comes at the cost of requiring more time for inference.

```diff

# Load pipeline and adapters

# ...

+ pipe.enable_free_noise_split_inference()

+ pipe.unet.enable_forward_chunking(16)

```

The call to `pipe.enable_free_noise_split_inference` method accepts two parameters: `spatial_split_size` (defaults to `256`) and `temporal_split_size` (defaults to `16`). These can be configured based on how much VRAM you have available. A lower split size results in lower memory usage but slower inference, whereas a larger split size results in faster inference at the cost of more memory.

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/animatediff.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/animatediff/#freenoise-memory-savings

|

#freenoise-memory-savings

|

.md

|

158_17

|

`diffusers>=0.30.0` supports loading the AnimateDiff checkpoints into the `MotionAdapter` in their original format via `from_single_file`

```python

from diffusers import MotionAdapter

ckpt_path = "https://huggingface.co/Lightricks/LongAnimateDiff/blob/main/lt_long_mm_32_frames.ckpt"

adapter = MotionAdapter.from_single_file(ckpt_path, torch_dtype=torch.float16)

pipe = AnimateDiffPipeline.from_pretrained("emilianJR/epiCRealism", motion_adapter=adapter)

```

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/animatediff.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/animatediff/#using-fromsinglefile-with-the-motionadapter

|

#using-fromsinglefile-with-the-motionadapter

|

.md

|

158_18

|

AnimateDiffPipeline

Pipeline for text-to-video generation.

This model inherits from [`DiffusionPipeline`]. Check the superclass documentation for the generic methods

implemented for all pipelines (downloading, saving, running on a particular device, etc.).

The pipeline also inherits the following loading methods:

- [`~loaders.TextualInversionLoaderMixin.load_textual_inversion`] for loading textual inversion embeddings

- [`~loaders.StableDiffusionLoraLoaderMixin.load_lora_weights`] for loading LoRA weights

- [`~loaders.StableDiffusionLoraLoaderMixin.save_lora_weights`] for saving LoRA weights

- [`~loaders.IPAdapterMixin.load_ip_adapter`] for loading IP Adapters

Args:

vae ([`AutoencoderKL`]):

Variational Auto-Encoder (VAE) Model to encode and decode images to and from latent representations.

text_encoder ([`CLIPTextModel`]):

Frozen text-encoder ([clip-vit-large-patch14](https://huggingface.co/openai/clip-vit-large-patch14)).

tokenizer (`CLIPTokenizer`):

A [`~transformers.CLIPTokenizer`] to tokenize text.

unet ([`UNet2DConditionModel`]):

A [`UNet2DConditionModel`] used to create a UNetMotionModel to denoise the encoded video latents.

motion_adapter ([`MotionAdapter`]):

A [`MotionAdapter`] to be used in combination with `unet` to denoise the encoded video latents.

scheduler ([`SchedulerMixin`]):

A scheduler to be used in combination with `unet` to denoise the encoded image latents. Can be one of

[`DDIMScheduler`], [`LMSDiscreteScheduler`], or [`PNDMScheduler`].

- all

- __call__

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/animatediff.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/animatediff/#animatediffpipeline

|

#animatediffpipeline

|

.md

|

158_19

|

AnimateDiffControlNetPipeline

Pipeline for text-to-video generation with ControlNet guidance.

This model inherits from [`DiffusionPipeline`]. Check the superclass documentation for the generic methods

implemented for all pipelines (downloading, saving, running on a particular device, etc.).

The pipeline also inherits the following loading methods:

- [`~loaders.TextualInversionLoaderMixin.load_textual_inversion`] for loading textual inversion embeddings

- [`~loaders.StableDiffusionLoraLoaderMixin.load_lora_weights`] for loading LoRA weights

- [`~loaders.StableDiffusionLoraLoaderMixin.save_lora_weights`] for saving LoRA weights

- [`~loaders.IPAdapterMixin.load_ip_adapter`] for loading IP Adapters

Args:

vae ([`AutoencoderKL`]):

Variational Auto-Encoder (VAE) Model to encode and decode images to and from latent representations.

text_encoder ([`CLIPTextModel`]):

Frozen text-encoder ([clip-vit-large-patch14](https://huggingface.co/openai/clip-vit-large-patch14)).

tokenizer (`CLIPTokenizer`):

A [`~transformers.CLIPTokenizer`] to tokenize text.

unet ([`UNet2DConditionModel`]):

A [`UNet2DConditionModel`] used to create a UNetMotionModel to denoise the encoded video latents.

motion_adapter ([`MotionAdapter`]):

A [`MotionAdapter`] to be used in combination with `unet` to denoise the encoded video latents.

scheduler ([`SchedulerMixin`]):

A scheduler to be used in combination with `unet` to denoise the encoded image latents. Can be one of

[`DDIMScheduler`], [`LMSDiscreteScheduler`], or [`PNDMScheduler`].

- all

- __call__

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/animatediff.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/animatediff/#animatediffcontrolnetpipeline

|

#animatediffcontrolnetpipeline

|

.md

|

158_20

|

AnimateDiffSparseControlNetPipeline

Pipeline for controlled text-to-video generation using the method described in [SparseCtrl: Adding Sparse Controls

to Text-to-Video Diffusion Models](https://arxiv.org/abs/2311.16933).

This model inherits from [`DiffusionPipeline`]. Check the superclass documentation for the generic methods

implemented for all pipelines (downloading, saving, running on a particular device, etc.).

The pipeline also inherits the following loading methods:

- [`~loaders.TextualInversionLoaderMixin.load_textual_inversion`] for loading textual inversion embeddings

- [`~loaders.StableDiffusionLoraLoaderMixin.load_lora_weights`] for loading LoRA weights

- [`~loaders.StableDiffusionLoraLoaderMixin.save_lora_weights`] for saving LoRA weights

- [`~loaders.IPAdapterMixin.load_ip_adapter`] for loading IP Adapters

Args:

vae ([`AutoencoderKL`]):

Variational Auto-Encoder (VAE) Model to encode and decode images to and from latent representations.

text_encoder ([`CLIPTextModel`]):

Frozen text-encoder ([clip-vit-large-patch14](https://huggingface.co/openai/clip-vit-large-patch14)).

tokenizer (`CLIPTokenizer`):

A [`~transformers.CLIPTokenizer`] to tokenize text.

unet ([`UNet2DConditionModel`]):

A [`UNet2DConditionModel`] used to create a UNetMotionModel to denoise the encoded video latents.

motion_adapter ([`MotionAdapter`]):

A [`MotionAdapter`] to be used in combination with `unet` to denoise the encoded video latents.

scheduler ([`SchedulerMixin`]):

A scheduler to be used in combination with `unet` to denoise the encoded image latents. Can be one of

[`DDIMScheduler`], [`LMSDiscreteScheduler`], or [`PNDMScheduler`].

- all

- __call__

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/animatediff.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/animatediff/#animatediffsparsecontrolnetpipeline

|

#animatediffsparsecontrolnetpipeline

|

.md

|

158_21

|

AnimateDiffSDXLPipeline

Pipeline for text-to-video generation using Stable Diffusion XL.

This model inherits from [`DiffusionPipeline`]. Check the superclass documentation for the generic methods the

library implements for all the pipelines (such as downloading or saving, running on a particular device, etc.)

The pipeline also inherits the following loading methods:

- [`~loaders.TextualInversionLoaderMixin.load_textual_inversion`] for loading textual inversion embeddings

- [`~loaders.FromSingleFileMixin.from_single_file`] for loading `.ckpt` files

- [`~loaders.StableDiffusionXLLoraLoaderMixin.load_lora_weights`] for loading LoRA weights

- [`~loaders.StableDiffusionXLLoraLoaderMixin.save_lora_weights`] for saving LoRA weights

- [`~loaders.IPAdapterMixin.load_ip_adapter`] for loading IP Adapters

Args:

vae ([`AutoencoderKL`]):

Variational Auto-Encoder (VAE) Model to encode and decode images to and from latent representations.

text_encoder ([`CLIPTextModel`]):

Frozen text-encoder. Stable Diffusion XL uses the text portion of

[CLIP](https://huggingface.co/docs/transformers/model_doc/clip#transformers.CLIPTextModel), specifically

the [clip-vit-large-patch14](https://huggingface.co/openai/clip-vit-large-patch14) variant.

text_encoder_2 ([` CLIPTextModelWithProjection`]):

Second frozen text-encoder. Stable Diffusion XL uses the text and pool portion of

[CLIP](https://huggingface.co/docs/transformers/model_doc/clip#transformers.CLIPTextModelWithProjection),

specifically the

[laion/CLIP-ViT-bigG-14-laion2B-39B-b160k](https://huggingface.co/laion/CLIP-ViT-bigG-14-laion2B-39B-b160k)

variant.

tokenizer (`CLIPTokenizer`):

Tokenizer of class

[CLIPTokenizer](https://huggingface.co/docs/transformers/v4.21.0/en/model_doc/clip#transformers.CLIPTokenizer).

tokenizer_2 (`CLIPTokenizer`):

Second Tokenizer of class

[CLIPTokenizer](https://huggingface.co/docs/transformers/v4.21.0/en/model_doc/clip#transformers.CLIPTokenizer).

unet ([`UNet2DConditionModel`]):

Conditional U-Net architecture to denoise the encoded image latents.

scheduler ([`SchedulerMixin`]):

A scheduler to be used in combination with `unet` to denoise the encoded image latents. Can be one of

[`DDIMScheduler`], [`LMSDiscreteScheduler`], or [`PNDMScheduler`].

force_zeros_for_empty_prompt (`bool`, *optional*, defaults to `"True"`):

Whether the negative prompt embeddings shall be forced to always be set to 0. Also see the config of

`stabilityai/stable-diffusion-xl-base-1-0`.

- all

- __call__

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/animatediff.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/animatediff/#animatediffsdxlpipeline

|

#animatediffsdxlpipeline

|

.md

|

158_22

|

AnimateDiffVideoToVideoPipeline

Pipeline for video-to-video generation.

This model inherits from [`DiffusionPipeline`]. Check the superclass documentation for the generic methods

implemented for all pipelines (downloading, saving, running on a particular device, etc.).

The pipeline also inherits the following loading methods:

- [`~loaders.TextualInversionLoaderMixin.load_textual_inversion`] for loading textual inversion embeddings

- [`~loaders.StableDiffusionLoraLoaderMixin.load_lora_weights`] for loading LoRA weights

- [`~loaders.StableDiffusionLoraLoaderMixin.save_lora_weights`] for saving LoRA weights

- [`~loaders.IPAdapterMixin.load_ip_adapter`] for loading IP Adapters

Args:

vae ([`AutoencoderKL`]):

Variational Auto-Encoder (VAE) Model to encode and decode images to and from latent representations.

text_encoder ([`CLIPTextModel`]):

Frozen text-encoder ([clip-vit-large-patch14](https://huggingface.co/openai/clip-vit-large-patch14)).

tokenizer (`CLIPTokenizer`):

A [`~transformers.CLIPTokenizer`] to tokenize text.

unet ([`UNet2DConditionModel`]):

A [`UNet2DConditionModel`] used to create a UNetMotionModel to denoise the encoded video latents.

motion_adapter ([`MotionAdapter`]):

A [`MotionAdapter`] to be used in combination with `unet` to denoise the encoded video latents.

scheduler ([`SchedulerMixin`]):

A scheduler to be used in combination with `unet` to denoise the encoded image latents. Can be one of

[`DDIMScheduler`], [`LMSDiscreteScheduler`], or [`PNDMScheduler`].

- all

- __call__

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/animatediff.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/animatediff/#animatediffvideotovideopipeline

|

#animatediffvideotovideopipeline

|

.md

|

158_23

|

AnimateDiffVideoToVideoControlNetPipeline

Pipeline for video-to-video generation with ControlNet guidance.

This model inherits from [`DiffusionPipeline`]. Check the superclass documentation for the generic methods

implemented for all pipelines (downloading, saving, running on a particular device, etc.).

The pipeline also inherits the following loading methods:

- [`~loaders.TextualInversionLoaderMixin.load_textual_inversion`] for loading textual inversion embeddings

- [`~loaders.StableDiffusionLoraLoaderMixin.load_lora_weights`] for loading LoRA weights

- [`~loaders.StableDiffusionLoraLoaderMixin.save_lora_weights`] for saving LoRA weights

- [`~loaders.IPAdapterMixin.load_ip_adapter`] for loading IP Adapters

Args:

vae ([`AutoencoderKL`]):

Variational Auto-Encoder (VAE) Model to encode and decode images to and from latent representations.

text_encoder ([`CLIPTextModel`]):

Frozen text-encoder ([clip-vit-large-patch14](https://huggingface.co/openai/clip-vit-large-patch14)).

tokenizer (`CLIPTokenizer`):

A [`~transformers.CLIPTokenizer`] to tokenize text.

unet ([`UNet2DConditionModel`]):

A [`UNet2DConditionModel`] used to create a UNetMotionModel to denoise the encoded video latents.

motion_adapter ([`MotionAdapter`]):

A [`MotionAdapter`] to be used in combination with `unet` to denoise the encoded video latents.

controlnet ([`ControlNetModel`] or `List[ControlNetModel]` or `Tuple[ControlNetModel]` or `MultiControlNetModel`):

Provides additional conditioning to the `unet` during the denoising process. If you set multiple

ControlNets as a list, the outputs from each ControlNet are added together to create one combined

additional conditioning.

scheduler ([`SchedulerMixin`]):

A scheduler to be used in combination with `unet` to denoise the encoded image latents. Can be one of

[`DDIMScheduler`], [`LMSDiscreteScheduler`], or [`PNDMScheduler`].

- all

- __call__

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/animatediff.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/animatediff/#animatediffvideotovideocontrolnetpipeline

|

#animatediffvideotovideocontrolnetpipeline

|

.md

|

158_24

|

AnimateDiffPipelineOutput

Output class for AnimateDiff pipelines.

Args:

frames (`torch.Tensor`, `np.ndarray`, or List[List[PIL.Image.Image]]):

List of video outputs - It can be a nested list of length `batch_size,` with each sub-list containing

denoised

PIL image sequences of length `num_frames.` It can also be a NumPy array or Torch tensor of shape

`(batch_size, num_frames, channels, height, width)`

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/animatediff.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/animatediff/#animatediffpipelineoutput

|

#animatediffpipelineoutput

|

.md

|

158_25

|

<!--Copyright 2024 The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

specific language governing permissions and limitations under the License.

-->

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/text_to_video_zero.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/text_to_video_zero/

|

.md

|

159_0

|

|

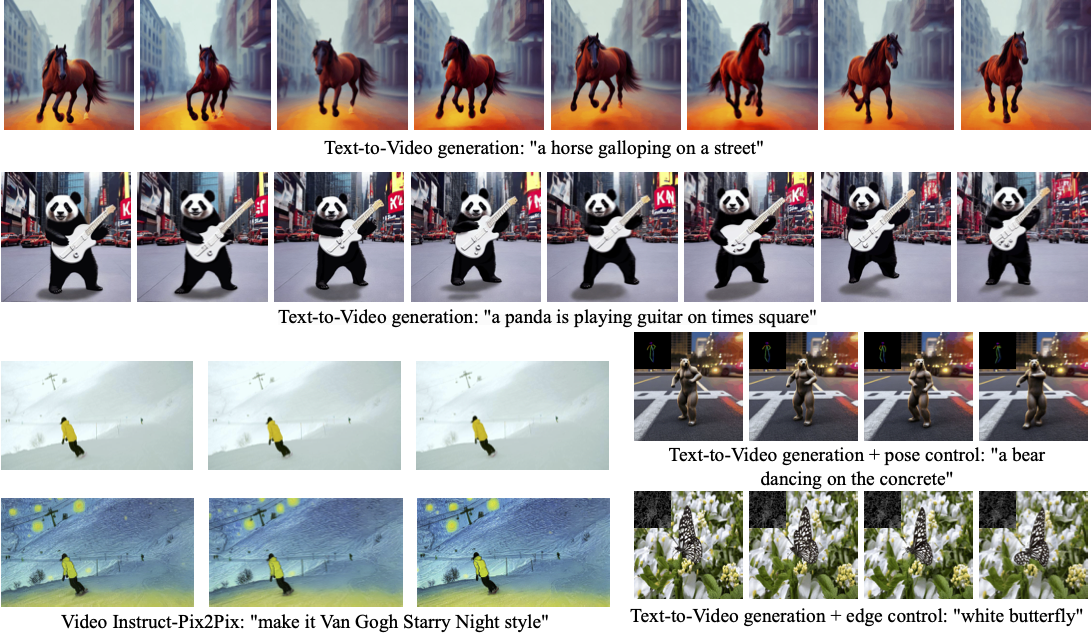

[Text2Video-Zero: Text-to-Image Diffusion Models are Zero-Shot Video Generators](https://huggingface.co/papers/2303.13439) is by Levon Khachatryan, Andranik Movsisyan, Vahram Tadevosyan, Roberto Henschel, [Zhangyang Wang](https://www.ece.utexas.edu/people/faculty/atlas-wang), Shant Navasardyan, [Humphrey Shi](https://www.humphreyshi.com).

Text2Video-Zero enables zero-shot video generation using either:

1. A textual prompt

2. A prompt combined with guidance from poses or edges

3. Video Instruct-Pix2Pix (instruction-guided video editing)

Results are temporally consistent and closely follow the guidance and textual prompts.

The abstract from the paper is:

*Recent text-to-video generation approaches rely on computationally heavy training and require large-scale video datasets. In this paper, we introduce a new task of zero-shot text-to-video generation and propose a low-cost approach (without any training or optimization) by leveraging the power of existing text-to-image synthesis methods (e.g., Stable Diffusion), making them suitable for the video domain.

Our key modifications include (i) enriching the latent codes of the generated frames with motion dynamics to keep the global scene and the background time consistent; and (ii) reprogramming frame-level self-attention using a new cross-frame attention of each frame on the first frame, to preserve the context, appearance, and identity of the foreground object.

Experiments show that this leads to low overhead, yet high-quality and remarkably consistent video generation. Moreover, our approach is not limited to text-to-video synthesis but is also applicable to other tasks such as conditional and content-specialized video generation, and Video Instruct-Pix2Pix, i.e., instruction-guided video editing.

As experiments show, our method performs comparably or sometimes better than recent approaches, despite not being trained on additional video data.*

You can find additional information about Text2Video-Zero on the [project page](https://text2video-zero.github.io/), [paper](https://arxiv.org/abs/2303.13439), and [original codebase](https://github.com/Picsart-AI-Research/Text2Video-Zero).

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/text_to_video_zero.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/text_to_video_zero/#text2video-zero

|

#text2video-zero

|

.md

|

159_1

|

To generate a video from prompt, run the following Python code:

```python

import torch

from diffusers import TextToVideoZeroPipeline

import imageio

model_id = "stable-diffusion-v1-5/stable-diffusion-v1-5"

pipe = TextToVideoZeroPipeline.from_pretrained(model_id, torch_dtype=torch.float16).to("cuda")

prompt = "A panda is playing guitar on times square"

result = pipe(prompt=prompt).images

result = [(r * 255).astype("uint8") for r in result]

imageio.mimsave("video.mp4", result, fps=4)

```

You can change these parameters in the pipeline call:

* Motion field strength (see the [paper](https://arxiv.org/abs/2303.13439), Sect. 3.3.1):

* `motion_field_strength_x` and `motion_field_strength_y`. Default: `motion_field_strength_x=12`, `motion_field_strength_y=12`

* `T` and `T'` (see the [paper](https://arxiv.org/abs/2303.13439), Sect. 3.3.1)

* `t0` and `t1` in the range `{0, ..., num_inference_steps}`. Default: `t0=45`, `t1=48`

* Video length:

* `video_length`, the number of frames video_length to be generated. Default: `video_length=8`

We can also generate longer videos by doing the processing in a chunk-by-chunk manner:

```python

import torch

from diffusers import TextToVideoZeroPipeline

import numpy as np

model_id = "stable-diffusion-v1-5/stable-diffusion-v1-5"

pipe = TextToVideoZeroPipeline.from_pretrained(model_id, torch_dtype=torch.float16).to("cuda")

seed = 0

video_length = 24 #24 ÷ 4fps = 6 seconds

chunk_size = 8

prompt = "A panda is playing guitar on times square"

# Generate the video chunk-by-chunk

result = []

chunk_ids = np.arange(0, video_length, chunk_size - 1)

generator = torch.Generator(device="cuda")

for i in range(len(chunk_ids)):

print(f"Processing chunk {i + 1} / {len(chunk_ids)}")

ch_start = chunk_ids[i]

ch_end = video_length if i == len(chunk_ids) - 1 else chunk_ids[i + 1]

# Attach the first frame for Cross Frame Attention

frame_ids = [0] + list(range(ch_start, ch_end))

# Fix the seed for the temporal consistency

generator.manual_seed(seed)

output = pipe(prompt=prompt, video_length=len(frame_ids), generator=generator, frame_ids=frame_ids)

result.append(output.images[1:])

# Concatenate chunks and save

result = np.concatenate(result)

result = [(r * 255).astype("uint8") for r in result]

imageio.mimsave("video.mp4", result, fps=4)

```

- #### SDXL Support

In order to use the SDXL model when generating a video from prompt, use the `TextToVideoZeroSDXLPipeline` pipeline:

```python

import torch

from diffusers import TextToVideoZeroSDXLPipeline

model_id = "stabilityai/stable-diffusion-xl-base-1.0"

pipe = TextToVideoZeroSDXLPipeline.from_pretrained(

model_id, torch_dtype=torch.float16, variant="fp16", use_safetensors=True

).to("cuda")

```

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/text_to_video_zero.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/text_to_video_zero/#text-to-video

|

#text-to-video

|

.md

|

159_2

|

To generate a video from prompt with additional pose control

1. Download a demo video

```python

from huggingface_hub import hf_hub_download

filename = "__assets__/poses_skeleton_gifs/dance1_corr.mp4"

repo_id = "PAIR/Text2Video-Zero"

video_path = hf_hub_download(repo_type="space", repo_id=repo_id, filename=filename)

```

2. Read video containing extracted pose images

```python

from PIL import Image

import imageio

reader = imageio.get_reader(video_path, "ffmpeg")

frame_count = 8

pose_images = [Image.fromarray(reader.get_data(i)) for i in range(frame_count)]

```

To extract pose from actual video, read [ControlNet documentation](controlnet).

3. Run `StableDiffusionControlNetPipeline` with our custom attention processor

```python

import torch

from diffusers import StableDiffusionControlNetPipeline, ControlNetModel

from diffusers.pipelines.text_to_video_synthesis.pipeline_text_to_video_zero import CrossFrameAttnProcessor

model_id = "stable-diffusion-v1-5/stable-diffusion-v1-5"

controlnet = ControlNetModel.from_pretrained("lllyasviel/sd-controlnet-openpose", torch_dtype=torch.float16)

pipe = StableDiffusionControlNetPipeline.from_pretrained(

model_id, controlnet=controlnet, torch_dtype=torch.float16

).to("cuda")

# Set the attention processor

pipe.unet.set_attn_processor(CrossFrameAttnProcessor(batch_size=2))

pipe.controlnet.set_attn_processor(CrossFrameAttnProcessor(batch_size=2))

# fix latents for all frames

latents = torch.randn((1, 4, 64, 64), device="cuda", dtype=torch.float16).repeat(len(pose_images), 1, 1, 1)

prompt = "Darth Vader dancing in a desert"

result = pipe(prompt=[prompt] * len(pose_images), image=pose_images, latents=latents).images

imageio.mimsave("video.mp4", result, fps=4)

```

- #### SDXL Support

Since our attention processor also works with SDXL, it can be utilized to generate a video from prompt using ControlNet models powered by SDXL:

```python

import torch

from diffusers import StableDiffusionXLControlNetPipeline, ControlNetModel

from diffusers.pipelines.text_to_video_synthesis.pipeline_text_to_video_zero import CrossFrameAttnProcessor

controlnet_model_id = 'thibaud/controlnet-openpose-sdxl-1.0'

model_id = 'stabilityai/stable-diffusion-xl-base-1.0'

controlnet = ControlNetModel.from_pretrained(controlnet_model_id, torch_dtype=torch.float16)

pipe = StableDiffusionControlNetPipeline.from_pretrained(

model_id, controlnet=controlnet, torch_dtype=torch.float16

).to('cuda')

# Set the attention processor

pipe.unet.set_attn_processor(CrossFrameAttnProcessor(batch_size=2))

pipe.controlnet.set_attn_processor(CrossFrameAttnProcessor(batch_size=2))

# fix latents for all frames

latents = torch.randn((1, 4, 128, 128), device="cuda", dtype=torch.float16).repeat(len(pose_images), 1, 1, 1)

prompt = "Darth Vader dancing in a desert"

result = pipe(prompt=[prompt] * len(pose_images), image=pose_images, latents=latents).images

imageio.mimsave("video.mp4", result, fps=4)

```

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/text_to_video_zero.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/text_to_video_zero/#text-to-video-with-pose-control

|

#text-to-video-with-pose-control

|

.md

|

159_3

|

To generate a video from prompt with additional Canny edge control, follow the same steps described above for pose-guided generation using [Canny edge ControlNet model](https://huggingface.co/lllyasviel/sd-controlnet-canny).

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/text_to_video_zero.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/text_to_video_zero/#text-to-video-with-edge-control

|

#text-to-video-with-edge-control

|

.md

|

159_4

|

To perform text-guided video editing (with [InstructPix2Pix](pix2pix)):

1. Download a demo video

```python

from huggingface_hub import hf_hub_download

filename = "__assets__/pix2pix video/camel.mp4"

repo_id = "PAIR/Text2Video-Zero"

video_path = hf_hub_download(repo_type="space", repo_id=repo_id, filename=filename)

```

2. Read video from path

```python

from PIL import Image

import imageio

reader = imageio.get_reader(video_path, "ffmpeg")

frame_count = 8

video = [Image.fromarray(reader.get_data(i)) for i in range(frame_count)]

```

3. Run `StableDiffusionInstructPix2PixPipeline` with our custom attention processor

```python

import torch

from diffusers import StableDiffusionInstructPix2PixPipeline

from diffusers.pipelines.text_to_video_synthesis.pipeline_text_to_video_zero import CrossFrameAttnProcessor

model_id = "timbrooks/instruct-pix2pix"

pipe = StableDiffusionInstructPix2PixPipeline.from_pretrained(model_id, torch_dtype=torch.float16).to("cuda")

pipe.unet.set_attn_processor(CrossFrameAttnProcessor(batch_size=3))

prompt = "make it Van Gogh Starry Night style"

result = pipe(prompt=[prompt] * len(video), image=video).images

imageio.mimsave("edited_video.mp4", result, fps=4)

```

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/text_to_video_zero.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/text_to_video_zero/#video-instruct-pix2pix

|

#video-instruct-pix2pix

|

.md

|

159_5

|

Methods **Text-To-Video**, **Text-To-Video with Pose Control** and **Text-To-Video with Edge Control**

can run with custom [DreamBooth](../../training/dreambooth) models, as shown below for

[Canny edge ControlNet model](https://huggingface.co/lllyasviel/sd-controlnet-canny) and

[Avatar style DreamBooth](https://huggingface.co/PAIR/text2video-zero-controlnet-canny-avatar) model:

1. Download a demo video

```python

from huggingface_hub import hf_hub_download

filename = "__assets__/canny_videos_mp4/girl_turning.mp4"

repo_id = "PAIR/Text2Video-Zero"

video_path = hf_hub_download(repo_type="space", repo_id=repo_id, filename=filename)

```

2. Read video from path

```python

from PIL import Image

import imageio

reader = imageio.get_reader(video_path, "ffmpeg")

frame_count = 8

canny_edges = [Image.fromarray(reader.get_data(i)) for i in range(frame_count)]

```

3. Run `StableDiffusionControlNetPipeline` with custom trained DreamBooth model

```python

import torch

from diffusers import StableDiffusionControlNetPipeline, ControlNetModel

from diffusers.pipelines.text_to_video_synthesis.pipeline_text_to_video_zero import CrossFrameAttnProcessor

# set model id to custom model

model_id = "PAIR/text2video-zero-controlnet-canny-avatar"

controlnet = ControlNetModel.from_pretrained("lllyasviel/sd-controlnet-canny", torch_dtype=torch.float16)

pipe = StableDiffusionControlNetPipeline.from_pretrained(

model_id, controlnet=controlnet, torch_dtype=torch.float16

).to("cuda")

# Set the attention processor

pipe.unet.set_attn_processor(CrossFrameAttnProcessor(batch_size=2))

pipe.controlnet.set_attn_processor(CrossFrameAttnProcessor(batch_size=2))

# fix latents for all frames

latents = torch.randn((1, 4, 64, 64), device="cuda", dtype=torch.float16).repeat(len(canny_edges), 1, 1, 1)

prompt = "oil painting of a beautiful girl avatar style"

result = pipe(prompt=[prompt] * len(canny_edges), image=canny_edges, latents=latents).images

imageio.mimsave("video.mp4", result, fps=4)

```

You can filter out some available DreamBooth-trained models with [this link](https://huggingface.co/models?search=dreambooth).

<Tip>

Make sure to check out the Schedulers [guide](../../using-diffusers/schedulers) to learn how to explore the tradeoff between scheduler speed and quality, and see the [reuse components across pipelines](../../using-diffusers/loading#reuse-a-pipeline) section to learn how to efficiently load the same components into multiple pipelines.

</Tip>

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/text_to_video_zero.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/text_to_video_zero/#dreambooth-specialization

|

#dreambooth-specialization

|

.md

|

159_6

|

TextToVideoZeroPipeline

Pipeline for zero-shot text-to-video generation using Stable Diffusion.

This model inherits from [`DiffusionPipeline`]. Check the superclass documentation for the generic methods

implemented for all pipelines (downloading, saving, running on a particular device, etc.).

Args:

vae ([`AutoencoderKL`]):

Variational Auto-Encoder (VAE) Model to encode and decode images to and from latent representations.

text_encoder ([`CLIPTextModel`]):

Frozen text-encoder ([clip-vit-large-patch14](https://huggingface.co/openai/clip-vit-large-patch14)).

tokenizer (`CLIPTokenizer`):

A [`~transformers.CLIPTokenizer`] to tokenize text.

unet ([`UNet2DConditionModel`]):

A [`UNet3DConditionModel`] to denoise the encoded video latents.

scheduler ([`SchedulerMixin`]):

A scheduler to be used in combination with `unet` to denoise the encoded image latents. Can be one of

[`DDIMScheduler`], [`LMSDiscreteScheduler`], or [`PNDMScheduler`].

safety_checker ([`StableDiffusionSafetyChecker`]):

Classification module that estimates whether generated images could be considered offensive or harmful.

Please refer to the [model card](https://huggingface.co/stable-diffusion-v1-5/stable-diffusion-v1-5) for

more details about a model's potential harms.

feature_extractor ([`CLIPImageProcessor`]):

A [`CLIPImageProcessor`] to extract features from generated images; used as inputs to the `safety_checker`.

- all

- __call__

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/text_to_video_zero.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/text_to_video_zero/#texttovideozeropipeline

|

#texttovideozeropipeline

|

.md

|

159_7

|

TextToVideoZeroSDXLPipeline

Pipeline for zero-shot text-to-video generation using Stable Diffusion XL.

This model inherits from [`DiffusionPipeline`]. Check the superclass documentation for the generic methods

implemented for all pipelines (downloading, saving, running on a particular device, etc.).

Args:

vae ([`AutoencoderKL`]):

Variational Auto-Encoder (VAE) Model to encode and decode images to and from latent representations.

text_encoder ([`CLIPTextModel`]):

Frozen text-encoder. Stable Diffusion XL uses the text portion of

[CLIP](https://huggingface.co/docs/transformers/model_doc/clip#transformers.CLIPTextModel), specifically

the [clip-vit-large-patch14](https://huggingface.co/openai/clip-vit-large-patch14) variant.

text_encoder_2 ([` CLIPTextModelWithProjection`]):

Second frozen text-encoder. Stable Diffusion XL uses the text and pool portion of

[CLIP](https://huggingface.co/docs/transformers/model_doc/clip#transformers.CLIPTextModelWithProjection),

specifically the

[laion/CLIP-ViT-bigG-14-laion2B-39B-b160k](https://huggingface.co/laion/CLIP-ViT-bigG-14-laion2B-39B-b160k)

variant.

tokenizer (`CLIPTokenizer`):

Tokenizer of class

[CLIPTokenizer](https://huggingface.co/docs/transformers/v4.21.0/en/model_doc/clip#transformers.CLIPTokenizer).

tokenizer_2 (`CLIPTokenizer`):

Second Tokenizer of class

[CLIPTokenizer](https://huggingface.co/docs/transformers/v4.21.0/en/model_doc/clip#transformers.CLIPTokenizer).

unet ([`UNet2DConditionModel`]): Conditional U-Net architecture to denoise the encoded image latents.

scheduler ([`SchedulerMixin`]):

A scheduler to be used in combination with `unet` to denoise the encoded image latents. Can be one of

[`DDIMScheduler`], [`LMSDiscreteScheduler`], or [`PNDMScheduler`].

- all

- __call__

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/text_to_video_zero.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/text_to_video_zero/#texttovideozerosdxlpipeline

|

#texttovideozerosdxlpipeline

|

.md

|

159_8

|

TextToVideoPipelineOutput

Output class for zero-shot text-to-video pipeline.

Args:

images (`[List[PIL.Image.Image]`, `np.ndarray`]):

List of denoised PIL images of length `batch_size` or NumPy array of shape `(batch_size, height, width,

num_channels)`.

nsfw_content_detected (`[List[bool]]`):

List indicating whether the corresponding generated image contains "not-safe-for-work" (nsfw) content or

`None` if safety checking could not be performed.

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/text_to_video_zero.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/text_to_video_zero/#texttovideopipelineoutput

|

#texttovideopipelineoutput

|

.md

|

159_9

|

<!--Copyright 2024 The HuggingFace Team and Tencent Hunyuan Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

specific language governing permissions and limitations under the License.

-->

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/controlnet_hunyuandit.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/controlnet_hunyuandit/

|

.md

|

160_0

|

|

HunyuanDiTControlNetPipeline is an implementation of ControlNet for [Hunyuan-DiT](https://arxiv.org/abs/2405.08748).

ControlNet was introduced in [Adding Conditional Control to Text-to-Image Diffusion Models](https://huggingface.co/papers/2302.05543) by Lvmin Zhang, Anyi Rao, and Maneesh Agrawala.

With a ControlNet model, you can provide an additional control image to condition and control Hunyuan-DiT generation. For example, if you provide a depth map, the ControlNet model generates an image that'll preserve the spatial information from the depth map. It is a more flexible and accurate way to control the image generation process.

The abstract from the paper is:

*We present ControlNet, a neural network architecture to add spatial conditioning controls to large, pretrained text-to-image diffusion models. ControlNet locks the production-ready large diffusion models, and reuses their deep and robust encoding layers pretrained with billions of images as a strong backbone to learn a diverse set of conditional controls. The neural architecture is connected with "zero convolutions" (zero-initialized convolution layers) that progressively grow the parameters from zero and ensure that no harmful noise could affect the finetuning. We test various conditioning controls, eg, edges, depth, segmentation, human pose, etc, with Stable Diffusion, using single or multiple conditions, with or without prompts. We show that the training of ControlNets is robust with small (<50k) and large (>1m) datasets. Extensive results show that ControlNet may facilitate wider applications to control image diffusion models.*

This code is implemented by Tencent Hunyuan Team. You can find pre-trained checkpoints for Hunyuan-DiT ControlNets on [Tencent Hunyuan](https://huggingface.co/Tencent-Hunyuan).

<Tip>

Make sure to check out the Schedulers [guide](../../using-diffusers/schedulers) to learn how to explore the tradeoff between scheduler speed and quality, and see the [reuse components across pipelines](../../using-diffusers/loading#reuse-a-pipeline) section to learn how to efficiently load the same components into multiple pipelines.

</Tip>

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/controlnet_hunyuandit.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/controlnet_hunyuandit/#controlnet-with-hunyuan-dit

|

#controlnet-with-hunyuan-dit

|

.md

|

160_1

|

HunyuanDiTControlNetPipeline

Pipeline for English/Chinese-to-image generation using HunyuanDiT.

This model inherits from [`DiffusionPipeline`]. Check the superclass documentation for the generic methods the

library implements for all the pipelines (such as downloading or saving, running on a particular device, etc.)

HunyuanDiT uses two text encoders: [mT5](https://huggingface.co/google/mt5-base) and [bilingual CLIP](fine-tuned by

ourselves)

Args:

vae ([`AutoencoderKL`]):

Variational Auto-Encoder (VAE) Model to encode and decode images to and from latent representations. We use

`sdxl-vae-fp16-fix`.

text_encoder (Optional[`~transformers.BertModel`, `~transformers.CLIPTextModel`]):

Frozen text-encoder ([clip-vit-large-patch14](https://huggingface.co/openai/clip-vit-large-patch14)).

HunyuanDiT uses a fine-tuned [bilingual CLIP].

tokenizer (Optional[`~transformers.BertTokenizer`, `~transformers.CLIPTokenizer`]):

A `BertTokenizer` or `CLIPTokenizer` to tokenize text.

transformer ([`HunyuanDiT2DModel`]):

The HunyuanDiT model designed by Tencent Hunyuan.

text_encoder_2 (`T5EncoderModel`):

The mT5 embedder. Specifically, it is 't5-v1_1-xxl'.

tokenizer_2 (`MT5Tokenizer`):

The tokenizer for the mT5 embedder.

scheduler ([`DDPMScheduler`]):

A scheduler to be used in combination with HunyuanDiT to denoise the encoded image latents.

controlnet ([`HunyuanDiT2DControlNetModel`] or `List[HunyuanDiT2DControlNetModel]` or [`HunyuanDiT2DControlNetModel`]):

Provides additional conditioning to the `unet` during the denoising process. If you set multiple

ControlNets as a list, the outputs from each ControlNet are added together to create one combined

additional conditioning.

- all

- __call__

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/controlnet_hunyuandit.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/controlnet_hunyuandit/#hunyuanditcontrolnetpipeline

|

#hunyuanditcontrolnetpipeline

|

.md

|

160_2

|

<!--Copyright 2024 The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

specific language governing permissions and limitations under the License.

-->

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/kandinsky3.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/kandinsky3/

|

.md

|

161_0

|

|

Kandinsky 3 is created by [Vladimir Arkhipkin](https://github.com/oriBetelgeuse),[Anastasia Maltseva](https://github.com/NastyaMittseva),[Igor Pavlov](https://github.com/boomb0om),[Andrei Filatov](https://github.com/anvilarth),[Arseniy Shakhmatov](https://github.com/cene555),[Andrey Kuznetsov](https://github.com/kuznetsoffandrey),[Denis Dimitrov](https://github.com/denndimitrov), [Zein Shaheen](https://github.com/zeinsh)

The description from it's GitHub page:

*Kandinsky 3.0 is an open-source text-to-image diffusion model built upon the Kandinsky2-x model family. In comparison to its predecessors, enhancements have been made to the text understanding and visual quality of the model, achieved by increasing the size of the text encoder and Diffusion U-Net models, respectively.*

Its architecture includes 3 main components:

1. [FLAN-UL2](https://huggingface.co/google/flan-ul2), which is an encoder decoder model based on the T5 architecture.

2. New U-Net architecture featuring BigGAN-deep blocks doubles depth while maintaining the same number of parameters.

3. Sber-MoVQGAN is a decoder proven to have superior results in image restoration.

The original codebase can be found at [ai-forever/Kandinsky-3](https://github.com/ai-forever/Kandinsky-3).

<Tip>

Check out the [Kandinsky Community](https://huggingface.co/kandinsky-community) organization on the Hub for the official model checkpoints for tasks like text-to-image, image-to-image, and inpainting.

</Tip>

<Tip>

Make sure to check out the schedulers [guide](../../using-diffusers/schedulers) to learn how to explore the tradeoff between scheduler speed and quality, and see the [reuse components across pipelines](../../using-diffusers/loading#reuse-a-pipeline) section to learn how to efficiently load the same components into multiple pipelines.

</Tip>

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/kandinsky3.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/kandinsky3/#kandinsky-3

|

#kandinsky-3

|

.md

|

161_1

|

Kandinsky3Pipeline

- all

- __call__

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/kandinsky3.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/kandinsky3/#kandinsky3pipeline

|

#kandinsky3pipeline

|

.md

|

161_2

|

Kandinsky3Img2ImgPipeline

- all

- __call__

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/kandinsky3.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/kandinsky3/#kandinsky3img2imgpipeline

|

#kandinsky3img2imgpipeline

|

.md

|

161_3

|

<!--Copyright 2024 The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

specific language governing permissions and limitations under the License.

-->

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/controlnet_sd3.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/controlnet_sd3/

|

.md

|

162_0

|

|

StableDiffusion3ControlNetPipeline is an implementation of ControlNet for Stable Diffusion 3.

ControlNet was introduced in [Adding Conditional Control to Text-to-Image Diffusion Models](https://huggingface.co/papers/2302.05543) by Lvmin Zhang, Anyi Rao, and Maneesh Agrawala.

With a ControlNet model, you can provide an additional control image to condition and control Stable Diffusion generation. For example, if you provide a depth map, the ControlNet model generates an image that'll preserve the spatial information from the depth map. It is a more flexible and accurate way to control the image generation process.

The abstract from the paper is:

*We present ControlNet, a neural network architecture to add spatial conditioning controls to large, pretrained text-to-image diffusion models. ControlNet locks the production-ready large diffusion models, and reuses their deep and robust encoding layers pretrained with billions of images as a strong backbone to learn a diverse set of conditional controls. The neural architecture is connected with "zero convolutions" (zero-initialized convolution layers) that progressively grow the parameters from zero and ensure that no harmful noise could affect the finetuning. We test various conditioning controls, eg, edges, depth, segmentation, human pose, etc, with Stable Diffusion, using single or multiple conditions, with or without prompts. We show that the training of ControlNets is robust with small (<50k) and large (>1m) datasets. Extensive results show that ControlNet may facilitate wider applications to control image diffusion models.*

This controlnet code is mainly implemented by [The InstantX Team](https://huggingface.co/InstantX). The inpainting-related code was developed by [The Alimama Creative Team](https://huggingface.co/alimama-creative). You can find pre-trained checkpoints for SD3-ControlNet in the table below:

| ControlNet type | Developer | Link |

| -------- | ---------- | ---- |

| Canny | [The InstantX Team](https://huggingface.co/InstantX) | [Link](https://huggingface.co/InstantX/SD3-Controlnet-Canny) |

| Depth | [The InstantX Team](https://huggingface.co/InstantX) | [Link](https://huggingface.co/InstantX/SD3-Controlnet-Depth) |

| Pose | [The InstantX Team](https://huggingface.co/InstantX) | [Link](https://huggingface.co/InstantX/SD3-Controlnet-Pose) |

| Tile | [The InstantX Team](https://huggingface.co/InstantX) | [Link](https://huggingface.co/InstantX/SD3-Controlnet-Tile) |

| Inpainting | [The AlimamaCreative Team](https://huggingface.co/alimama-creative) | [link](https://huggingface.co/alimama-creative/SD3-Controlnet-Inpainting) |

<Tip>

Make sure to check out the Schedulers [guide](../../using-diffusers/schedulers) to learn how to explore the tradeoff between scheduler speed and quality, and see the [reuse components across pipelines](../../using-diffusers/loading#reuse-a-pipeline) section to learn how to efficiently load the same components into multiple pipelines.

</Tip>

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/controlnet_sd3.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/controlnet_sd3/#controlnet-with-stable-diffusion-3

|

#controlnet-with-stable-diffusion-3

|

.md

|

162_1

|

StableDiffusion3ControlNetPipeline

Args:

transformer ([`SD3Transformer2DModel`]):

Conditional Transformer (MMDiT) architecture to denoise the encoded image latents.

scheduler ([`FlowMatchEulerDiscreteScheduler`]):

A scheduler to be used in combination with `transformer` to denoise the encoded image latents.

vae ([`AutoencoderKL`]):

Variational Auto-Encoder (VAE) Model to encode and decode images to and from latent representations.

text_encoder ([`CLIPTextModelWithProjection`]):

[CLIP](https://huggingface.co/docs/transformers/model_doc/clip#transformers.CLIPTextModelWithProjection),

specifically the [clip-vit-large-patch14](https://huggingface.co/openai/clip-vit-large-patch14) variant,

with an additional added projection layer that is initialized with a diagonal matrix with the `hidden_size`

as its dimension.

text_encoder_2 ([`CLIPTextModelWithProjection`]):

[CLIP](https://huggingface.co/docs/transformers/model_doc/clip#transformers.CLIPTextModelWithProjection),

specifically the

[laion/CLIP-ViT-bigG-14-laion2B-39B-b160k](https://huggingface.co/laion/CLIP-ViT-bigG-14-laion2B-39B-b160k)

variant.

text_encoder_3 ([`T5EncoderModel`]):

Frozen text-encoder. Stable Diffusion 3 uses

[T5](https://huggingface.co/docs/transformers/model_doc/t5#transformers.T5EncoderModel), specifically the

[t5-v1_1-xxl](https://huggingface.co/google/t5-v1_1-xxl) variant.

tokenizer (`CLIPTokenizer`):

Tokenizer of class

[CLIPTokenizer](https://huggingface.co/docs/transformers/v4.21.0/en/model_doc/clip#transformers.CLIPTokenizer).

tokenizer_2 (`CLIPTokenizer`):

Second Tokenizer of class

[CLIPTokenizer](https://huggingface.co/docs/transformers/v4.21.0/en/model_doc/clip#transformers.CLIPTokenizer).

tokenizer_3 (`T5TokenizerFast`):

Tokenizer of class

[T5Tokenizer](https://huggingface.co/docs/transformers/model_doc/t5#transformers.T5Tokenizer).

controlnet ([`SD3ControlNetModel`] or `List[SD3ControlNetModel]` or [`SD3MultiControlNetModel`]):

Provides additional conditioning to the `unet` during the denoising process. If you set multiple

ControlNets as a list, the outputs from each ControlNet are added together to create one combined

additional conditioning.

image_encoder (`PreTrainedModel`, *optional*):

Pre-trained Vision Model for IP Adapter.

feature_extractor (`BaseImageProcessor`, *optional*):

Image processor for IP Adapter.

- all

- __call__

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/controlnet_sd3.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/controlnet_sd3/#stablediffusion3controlnetpipeline

|

#stablediffusion3controlnetpipeline

|

.md

|

162_2

|

StableDiffusion3ControlNetInpaintingPipeline

Args:

transformer ([`SD3Transformer2DModel`]):

Conditional Transformer (MMDiT) architecture to denoise the encoded image latents.

scheduler ([`FlowMatchEulerDiscreteScheduler`]):

A scheduler to be used in combination with `transformer` to denoise the encoded image latents.

vae ([`AutoencoderKL`]):

Variational Auto-Encoder (VAE) Model to encode and decode images to and from latent representations.

text_encoder ([`CLIPTextModelWithProjection`]):

[CLIP](https://huggingface.co/docs/transformers/model_doc/clip#transformers.CLIPTextModelWithProjection),

specifically the [clip-vit-large-patch14](https://huggingface.co/openai/clip-vit-large-patch14) variant,

with an additional added projection layer that is initialized with a diagonal matrix with the `hidden_size`

as its dimension.

text_encoder_2 ([`CLIPTextModelWithProjection`]):

[CLIP](https://huggingface.co/docs/transformers/model_doc/clip#transformers.CLIPTextModelWithProjection),

specifically the

[laion/CLIP-ViT-bigG-14-laion2B-39B-b160k](https://huggingface.co/laion/CLIP-ViT-bigG-14-laion2B-39B-b160k)

variant.

text_encoder_3 ([`T5EncoderModel`]):

Frozen text-encoder. Stable Diffusion 3 uses

[T5](https://huggingface.co/docs/transformers/model_doc/t5#transformers.T5EncoderModel), specifically the

[t5-v1_1-xxl](https://huggingface.co/google/t5-v1_1-xxl) variant.

tokenizer (`CLIPTokenizer`):

Tokenizer of class

[CLIPTokenizer](https://huggingface.co/docs/transformers/v4.21.0/en/model_doc/clip#transformers.CLIPTokenizer).

tokenizer_2 (`CLIPTokenizer`):

Second Tokenizer of class

[CLIPTokenizer](https://huggingface.co/docs/transformers/v4.21.0/en/model_doc/clip#transformers.CLIPTokenizer).

tokenizer_3 (`T5TokenizerFast`):

Tokenizer of class

[T5Tokenizer](https://huggingface.co/docs/transformers/model_doc/t5#transformers.T5Tokenizer).

controlnet ([`SD3ControlNetModel`] or `List[SD3ControlNetModel]` or [`SD3MultiControlNetModel`]):

Provides additional conditioning to the `transformer` during the denoising process. If you set multiple

ControlNets as a list, the outputs from each ControlNet are added together to create one combined

additional conditioning.

image_encoder (`PreTrainedModel`, *optional*):

Pre-trained Vision Model for IP Adapter.

feature_extractor (`BaseImageProcessor`, *optional*):

Image processor for IP Adapter.

- all

- __call__

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/controlnet_sd3.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/controlnet_sd3/#stablediffusion3controlnetinpaintingpipeline

|

#stablediffusion3controlnetinpaintingpipeline

|

.md

|

162_3

|

StableDiffusion3PipelineOutput

Output class for Stable Diffusion pipelines.

Args:

images (`List[PIL.Image.Image]` or `np.ndarray`)

List of denoised PIL images of length `batch_size` or numpy array of shape `(batch_size, height, width,

num_channels)`. PIL images or numpy array present the denoised images of the diffusion pipeline.

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/controlnet_sd3.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/controlnet_sd3/#stablediffusion3pipelineoutput

|

#stablediffusion3pipelineoutput

|

.md

|

162_4

|

<!--Copyright 2024 The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

specific language governing permissions and limitations under the License.

-->

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/auto_pipeline.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/auto_pipeline/

|

.md

|

163_0

|

|

The `AutoPipeline` is designed to make it easy to load a checkpoint for a task without needing to know the specific pipeline class. Based on the task, the `AutoPipeline` automatically retrieves the correct pipeline class from the checkpoint `model_index.json` file.

> [!TIP]

> Check out the [AutoPipeline](../../tutorials/autopipeline) tutorial to learn how to use this API!

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/auto_pipeline.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/auto_pipeline/#autopipeline

|

#autopipeline

|

.md

|

163_1

|

AutoPipelineForText2Image

[`AutoPipelineForText2Image`] is a generic pipeline class that instantiates a text-to-image pipeline class. The

specific underlying pipeline class is automatically selected from either the

[`~AutoPipelineForText2Image.from_pretrained`] or [`~AutoPipelineForText2Image.from_pipe`] methods.

This class cannot be instantiated using `__init__()` (throws an error).

Class attributes:

- **config_name** (`str`) -- The configuration filename that stores the class and module names of all the

diffusion pipeline's components.

- all

- from_pretrained

- from_pipe

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/auto_pipeline.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/auto_pipeline/#autopipelinefortext2image

|

#autopipelinefortext2image

|

.md

|

163_2

|

AutoPipelineForImage2Image

[`AutoPipelineForImage2Image`] is a generic pipeline class that instantiates an image-to-image pipeline class. The

specific underlying pipeline class is automatically selected from either the

[`~AutoPipelineForImage2Image.from_pretrained`] or [`~AutoPipelineForImage2Image.from_pipe`] methods.

This class cannot be instantiated using `__init__()` (throws an error).

Class attributes:

- **config_name** (`str`) -- The configuration filename that stores the class and module names of all the

diffusion pipeline's components.

- all

- from_pretrained

- from_pipe

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/auto_pipeline.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/auto_pipeline/#autopipelineforimage2image

|

#autopipelineforimage2image

|

.md

|

163_3

|

AutoPipelineForInpainting

[`AutoPipelineForInpainting`] is a generic pipeline class that instantiates an inpainting pipeline class. The

specific underlying pipeline class is automatically selected from either the

[`~AutoPipelineForInpainting.from_pretrained`] or [`~AutoPipelineForInpainting.from_pipe`] methods.

This class cannot be instantiated using `__init__()` (throws an error).

Class attributes:

- **config_name** (`str`) -- The configuration filename that stores the class and module names of all the

diffusion pipeline's components.

- all

- from_pretrained

- from_pipe

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/auto_pipeline.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/auto_pipeline/#autopipelineforinpainting

|

#autopipelineforinpainting

|

.md

|

163_4

|

<!--Copyright 2024 The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with