text

stringlengths 3

14.4k

| source

stringclasses 273

values | url

stringlengths 47

172

| source_section

stringlengths 0

95

| file_type

stringclasses 1

value | id

stringlengths 3

6

|

|---|---|---|---|---|---|

Use [`torch.compile`](https://huggingface.co/docs/diffusers/main/en/tutorials/fast_diffusion#torchcompile) to reduce the inference latency.

First, load the pipeline:

```python

from diffusers import HunyuanDiTPipeline

import torch

pipeline = HunyuanDiTPipeline.from_pretrained(

"Tencent-Hunyuan/HunyuanDiT-Diffusers", torch_dtype=torch.float16

).to("cuda")

```

Then change the memory layout of the pipelines `transformer` and `vae` components to `torch.channels-last`:

```python

pipeline.transformer.to(memory_format=torch.channels_last)

pipeline.vae.to(memory_format=torch.channels_last)

```

Finally, compile the components and run inference:

```python

pipeline.transformer = torch.compile(pipeline.transformer, mode="max-autotune", fullgraph=True)

pipeline.vae.decode = torch.compile(pipeline.vae.decode, mode="max-autotune", fullgraph=True)

image = pipeline(prompt="一个宇航员在骑马").images[0]

```

The [benchmark](https://gist.github.com/sayakpaul/29d3a14905cfcbf611fe71ebd22e9b23) results on a 80GB A100 machine are:

```bash

With torch.compile(): Average inference time: 12.470 seconds.

Without torch.compile(): Average inference time: 20.570 seconds.

```

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/hunyuandit.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/hunyuandit/#inference

|

#inference

|

.md

|

143_3

|

By loading the T5 text encoder in 8 bits, you can run the pipeline in just under 6 GBs of GPU VRAM. Refer to [this script](https://gist.github.com/sayakpaul/3154605f6af05b98a41081aaba5ca43e) for details.

Furthermore, you can use the [`~HunyuanDiT2DModel.enable_forward_chunking`] method to reduce memory usage. Feed-forward chunking runs the feed-forward layers in a transformer block in a loop instead of all at once. This gives you a trade-off between memory consumption and inference runtime.

```diff

+ pipeline.transformer.enable_forward_chunking(chunk_size=1, dim=1)

```

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/hunyuandit.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/hunyuandit/#memory-optimization

|

#memory-optimization

|

.md

|

143_4

|

HunyuanDiTPipeline

Pipeline for English/Chinese-to-image generation using HunyuanDiT.

This model inherits from [`DiffusionPipeline`]. Check the superclass documentation for the generic methods the

library implements for all the pipelines (such as downloading or saving, running on a particular device, etc.)

HunyuanDiT uses two text encoders: [mT5](https://huggingface.co/google/mt5-base) and [bilingual CLIP](fine-tuned by

ourselves)

Args:

vae ([`AutoencoderKL`]):

Variational Auto-Encoder (VAE) Model to encode and decode images to and from latent representations. We use

`sdxl-vae-fp16-fix`.

text_encoder (Optional[`~transformers.BertModel`, `~transformers.CLIPTextModel`]):

Frozen text-encoder ([clip-vit-large-patch14](https://huggingface.co/openai/clip-vit-large-patch14)).

HunyuanDiT uses a fine-tuned [bilingual CLIP].

tokenizer (Optional[`~transformers.BertTokenizer`, `~transformers.CLIPTokenizer`]):

A `BertTokenizer` or `CLIPTokenizer` to tokenize text.

transformer ([`HunyuanDiT2DModel`]):

The HunyuanDiT model designed by Tencent Hunyuan.

text_encoder_2 (`T5EncoderModel`):

The mT5 embedder. Specifically, it is 't5-v1_1-xxl'.

tokenizer_2 (`MT5Tokenizer`):

The tokenizer for the mT5 embedder.

scheduler ([`DDPMScheduler`]):

A scheduler to be used in combination with HunyuanDiT to denoise the encoded image latents.

- all

- __call__

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/hunyuandit.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/hunyuandit/#hunyuanditpipeline

|

#hunyuanditpipeline

|

.md

|

143_5

|

<!--Copyright 2024 The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

specific language governing permissions and limitations under the License.

-->

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/pixart_sigma.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/pixart_sigma/

|

.md

|

144_0

|

|

[PixArt-Σ: Weak-to-Strong Training of Diffusion Transformer for 4K Text-to-Image Generation](https://huggingface.co/papers/2403.04692) is Junsong Chen, Jincheng Yu, Chongjian Ge, Lewei Yao, Enze Xie, Yue Wu, Zhongdao Wang, James Kwok, Ping Luo, Huchuan Lu, and Zhenguo Li.

The abstract from the paper is:

*In this paper, we introduce PixArt-Σ, a Diffusion Transformer model (DiT) capable of directly generating images at 4K resolution. PixArt-Σ represents a significant advancement over its predecessor, PixArt-α, offering images of markedly higher fidelity and improved alignment with text prompts. A key feature of PixArt-Σ is its training efficiency. Leveraging the foundational pre-training of PixArt-α, it evolves from the ‘weaker’ baseline to a ‘stronger’ model via incorporating higher quality data, a process we term “weak-to-strong training”. The advancements in PixArt-Σ are twofold: (1) High-Quality Training Data: PixArt-Σ incorporates superior-quality image data, paired with more precise and detailed image captions. (2) Efficient Token Compression: we propose a novel attention module within the DiT framework that compresses both keys and values, significantly improving efficiency and facilitating ultra-high-resolution image generation. Thanks to these improvements, PixArt-Σ achieves superior image quality and user prompt adherence capabilities with significantly smaller model size (0.6B parameters) than existing text-to-image diffusion models, such as SDXL (2.6B parameters) and SD Cascade (5.1B parameters). Moreover, PixArt-Σ’s capability to generate 4K images supports the creation of high-resolution posters and wallpapers, efficiently bolstering the production of highquality visual content in industries such as film and gaming.*

You can find the original codebase at [PixArt-alpha/PixArt-sigma](https://github.com/PixArt-alpha/PixArt-sigma) and all the available checkpoints at [PixArt-alpha](https://huggingface.co/PixArt-alpha).

Some notes about this pipeline:

* It uses a Transformer backbone (instead of a UNet) for denoising. As such it has a similar architecture as [DiT](https://hf.co/docs/transformers/model_doc/dit).

* It was trained using text conditions computed from T5. This aspect makes the pipeline better at following complex text prompts with intricate details.

* It is good at producing high-resolution images at different aspect ratios. To get the best results, the authors recommend some size brackets which can be found [here](https://github.com/PixArt-alpha/PixArt-sigma/blob/master/diffusion/data/datasets/utils.py).

* It rivals the quality of state-of-the-art text-to-image generation systems (as of this writing) such as PixArt-α, Stable Diffusion XL, Playground V2.0 and DALL-E 3, while being more efficient than them.

* It shows the ability of generating super high resolution images, such as 2048px or even 4K.

* It shows that text-to-image models can grow from a weak model to a stronger one through several improvements (VAEs, datasets, and so on.)

<Tip>

Make sure to check out the Schedulers [guide](../../using-diffusers/schedulers) to learn how to explore the tradeoff between scheduler speed and quality, and see the [reuse components across pipelines](../../using-diffusers/loading#reuse-a-pipeline) section to learn how to efficiently load the same components into multiple pipelines.

</Tip>

<Tip>

You can further improve generation quality by passing the generated image from [`PixArtSigmaPipeline`] to the [SDXL refiner](../../using-diffusers/sdxl#base-to-refiner-model) model.

</Tip>

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/pixart_sigma.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/pixart_sigma/#pixart-σ

|

#pixart-σ

|

.md

|

144_1

|

Run the [`PixArtSigmaPipeline`] with under 8GB GPU VRAM by loading the text encoder in 8-bit precision. Let's walk through a full-fledged example.

First, install the [bitsandbytes](https://github.com/TimDettmers/bitsandbytes) library:

```bash

pip install -U bitsandbytes

```

Then load the text encoder in 8-bit:

```python

from transformers import T5EncoderModel

from diffusers import PixArtSigmaPipeline

import torch

text_encoder = T5EncoderModel.from_pretrained(

"PixArt-alpha/PixArt-Sigma-XL-2-1024-MS",

subfolder="text_encoder",

load_in_8bit=True,

device_map="auto",

)

pipe = PixArtSigmaPipeline.from_pretrained(

"PixArt-alpha/PixArt-Sigma-XL-2-1024-MS",

text_encoder=text_encoder,

transformer=None,

device_map="balanced"

)

```

Now, use the `pipe` to encode a prompt:

```python

with torch.no_grad():

prompt = "cute cat"

prompt_embeds, prompt_attention_mask, negative_embeds, negative_prompt_attention_mask = pipe.encode_prompt(prompt)

```

Since text embeddings have been computed, remove the `text_encoder` and `pipe` from the memory, and free up some GPU VRAM:

```python

import gc

def flush():

gc.collect()

torch.cuda.empty_cache()

del text_encoder

del pipe

flush()

```

Then compute the latents with the prompt embeddings as inputs:

```python

pipe = PixArtSigmaPipeline.from_pretrained(

"PixArt-alpha/PixArt-Sigma-XL-2-1024-MS",

text_encoder=None,

torch_dtype=torch.float16,

).to("cuda")

latents = pipe(

negative_prompt=None,

prompt_embeds=prompt_embeds,

negative_prompt_embeds=negative_embeds,

prompt_attention_mask=prompt_attention_mask,

negative_prompt_attention_mask=negative_prompt_attention_mask,

num_images_per_prompt=1,

output_type="latent",

).images

del pipe.transformer

flush()

```

<Tip>

Notice that while initializing `pipe`, you're setting `text_encoder` to `None` so that it's not loaded.

</Tip>

Once the latents are computed, pass it off to the VAE to decode into a real image:

```python

with torch.no_grad():

image = pipe.vae.decode(latents / pipe.vae.config.scaling_factor, return_dict=False)[0]

image = pipe.image_processor.postprocess(image, output_type="pil")[0]

image.save("cat.png")

```

By deleting components you aren't using and flushing the GPU VRAM, you should be able to run [`PixArtSigmaPipeline`] with under 8GB GPU VRAM.

If you want a report of your memory-usage, run this [script](https://gist.github.com/sayakpaul/3ae0f847001d342af27018a96f467e4e).

<Tip warning={true}>

Text embeddings computed in 8-bit can impact the quality of the generated images because of the information loss in the representation space caused by the reduced precision. It's recommended to compare the outputs with and without 8-bit.

</Tip>

While loading the `text_encoder`, you set `load_in_8bit` to `True`. You could also specify `load_in_4bit` to bring your memory requirements down even further to under 7GB.

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/pixart_sigma.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/pixart_sigma/#inference-with-under-8gb-gpu-vram

|

#inference-with-under-8gb-gpu-vram

|

.md

|

144_2

|

PixArtSigmaPipeline

Pipeline for text-to-image generation using PixArt-Sigma.

- all

- __call__

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/pixart_sigma.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/pixart_sigma/#pixartsigmapipeline

|

#pixartsigmapipeline

|

.md

|

144_3

|

<!--Copyright 2024 The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

specific language governing permissions and limitations under the License.

-->

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/pag.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/pag/

|

.md

|

145_0

|

|

[Perturbed-Attention Guidance (PAG)](https://ku-cvlab.github.io/Perturbed-Attention-Guidance/) is a new diffusion sampling guidance that improves sample quality across both unconditional and conditional settings, achieving this without requiring further training or the integration of external modules.

PAG was introduced in [Self-Rectifying Diffusion Sampling with Perturbed-Attention Guidance](https://huggingface.co/papers/2403.17377) by Donghoon Ahn, Hyoungwon Cho, Jaewon Min, Wooseok Jang, Jungwoo Kim, SeonHwa Kim, Hyun Hee Park, Kyong Hwan Jin and Seungryong Kim.

The abstract from the paper is:

*Recent studies have demonstrated that diffusion models are capable of generating high-quality samples, but their quality heavily depends on sampling guidance techniques, such as classifier guidance (CG) and classifier-free guidance (CFG). These techniques are often not applicable in unconditional generation or in various downstream tasks such as image restoration. In this paper, we propose a novel sampling guidance, called Perturbed-Attention Guidance (PAG), which improves diffusion sample quality across both unconditional and conditional settings, achieving this without requiring additional training or the integration of external modules. PAG is designed to progressively enhance the structure of samples throughout the denoising process. It involves generating intermediate samples with degraded structure by substituting selected self-attention maps in diffusion U-Net with an identity matrix, by considering the self-attention mechanisms' ability to capture structural information, and guiding the denoising process away from these degraded samples. In both ADM and Stable Diffusion, PAG surprisingly improves sample quality in conditional and even unconditional scenarios. Moreover, PAG significantly improves the baseline performance in various downstream tasks where existing guidances such as CG or CFG cannot be fully utilized, including ControlNet with empty prompts and image restoration such as inpainting and deblurring.*

PAG can be used by specifying the `pag_applied_layers` as a parameter when instantiating a PAG pipeline. It can be a single string or a list of strings. Each string can be a unique layer identifier or a regular expression to identify one or more layers.

- Full identifier as a normal string: `down_blocks.2.attentions.0.transformer_blocks.0.attn1.processor`

- Full identifier as a RegEx: `down_blocks.2.(attentions|motion_modules).0.transformer_blocks.0.attn1.processor`

- Partial identifier as a RegEx: `down_blocks.2`, or `attn1`

- List of identifiers (can be combo of strings and ReGex): `["blocks.1", "blocks.(14|20)", r"down_blocks\.(2,3)"]`

<Tip warning={true}>

Since RegEx is supported as a way for matching layer identifiers, it is crucial to use it correctly otherwise there might be unexpected behaviour. The recommended way to use PAG is by specifying layers as `blocks.{layer_index}` and `blocks.({layer_index_1|layer_index_2|...})`. Using it in any other way, while doable, may bypass our basic validation checks and give you unexpected results.

</Tip>

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/pag.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/pag/#perturbed-attention-guidance

|

#perturbed-attention-guidance

|

.md

|

145_1

|

AnimateDiffPAGPipeline

Pipeline for text-to-video generation using

[AnimateDiff](https://huggingface.co/docs/diffusers/en/api/pipelines/animatediff) and [Perturbed Attention

Guidance](https://huggingface.co/docs/diffusers/en/using-diffusers/pag).

This model inherits from [`DiffusionPipeline`]. Check the superclass documentation for the generic methods

implemented for all pipelines (downloading, saving, running on a particular device, etc.).

The pipeline also inherits the following loading methods:

- [`~loaders.TextualInversionLoaderMixin.load_textual_inversion`] for loading textual inversion embeddings

- [`~loaders.StableDiffusionLoraLoaderMixin.load_lora_weights`] for loading LoRA weights

- [`~loaders.StableDiffusionLoraLoaderMixin.save_lora_weights`] for saving LoRA weights

- [`~loaders.IPAdapterMixin.load_ip_adapter`] for loading IP Adapters

Args:

vae ([`AutoencoderKL`]):

Variational Auto-Encoder (VAE) Model to encode and decode images to and from latent representations.

text_encoder ([`CLIPTextModel`]):

Frozen text-encoder ([clip-vit-large-patch14](https://huggingface.co/openai/clip-vit-large-patch14)).

tokenizer (`CLIPTokenizer`):

A [`~transformers.CLIPTokenizer`] to tokenize text.

unet ([`UNet2DConditionModel`]):

A [`UNet2DConditionModel`] used to create a UNetMotionModel to denoise the encoded video latents.

motion_adapter ([`MotionAdapter`]):

A [`MotionAdapter`] to be used in combination with `unet` to denoise the encoded video latents.

scheduler ([`SchedulerMixin`]):

A scheduler to be used in combination with `unet` to denoise the encoded image latents. Can be one of

[`DDIMScheduler`], [`LMSDiscreteScheduler`], or [`PNDMScheduler`].

- all

- __call__

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/pag.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/pag/#animatediffpagpipeline

|

#animatediffpagpipeline

|

.md

|

145_2

|

HunyuanDiTPAGPipeline

Pipeline for English/Chinese-to-image generation using HunyuanDiT and [Perturbed Attention

Guidance](https://huggingface.co/docs/diffusers/en/using-diffusers/pag).

This model inherits from [`DiffusionPipeline`]. Check the superclass documentation for the generic methods the

library implements for all the pipelines (such as downloading or saving, running on a particular device, etc.)

HunyuanDiT uses two text encoders: [mT5](https://huggingface.co/google/mt5-base) and [bilingual CLIP](fine-tuned by

ourselves)

Args:

vae ([`AutoencoderKL`]):

Variational Auto-Encoder (VAE) Model to encode and decode images to and from latent representations. We use

`sdxl-vae-fp16-fix`.

text_encoder (Optional[`~transformers.BertModel`, `~transformers.CLIPTextModel`]):

Frozen text-encoder ([clip-vit-large-patch14](https://huggingface.co/openai/clip-vit-large-patch14)).

HunyuanDiT uses a fine-tuned [bilingual CLIP].

tokenizer (Optional[`~transformers.BertTokenizer`, `~transformers.CLIPTokenizer`]):

A `BertTokenizer` or `CLIPTokenizer` to tokenize text.

transformer ([`HunyuanDiT2DModel`]):

The HunyuanDiT model designed by Tencent Hunyuan.

text_encoder_2 (`T5EncoderModel`):

The mT5 embedder. Specifically, it is 't5-v1_1-xxl'.

tokenizer_2 (`MT5Tokenizer`):

The tokenizer for the mT5 embedder.

scheduler ([`DDPMScheduler`]):

A scheduler to be used in combination with HunyuanDiT to denoise the encoded image latents.

- all

- __call__

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/pag.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/pag/#hunyuanditpagpipeline

|

#hunyuanditpagpipeline

|

.md

|

145_3

|

KolorsPAGPipeline

- all

- __call__

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/pag.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/pag/#kolorspagpipeline

|

#kolorspagpipeline

|

.md

|

145_4

|

StableDiffusionPAGInpaintPipeline

Pipeline for text-to-image generation using Stable Diffusion.

This model inherits from [`DiffusionPipeline`]. Check the superclass documentation for the generic methods

implemented for all pipelines (downloading, saving, running on a particular device, etc.).

The pipeline also inherits the following loading methods:

- [`~loaders.TextualInversionLoaderMixin.load_textual_inversion`] for loading textual inversion embeddings

- [`~loaders.StableDiffusionLoraLoaderMixin.load_lora_weights`] for loading LoRA weights

- [`~loaders.StableDiffusionLoraLoaderMixin.save_lora_weights`] for saving LoRA weights

- [`~loaders.FromSingleFileMixin.from_single_file`] for loading `.ckpt` files

- [`~loaders.IPAdapterMixin.load_ip_adapter`] for loading IP Adapters

Args:

vae ([`AutoencoderKL`]):

Variational Auto-Encoder (VAE) model to encode and decode images to and from latent representations.

text_encoder ([`~transformers.CLIPTextModel`]):

Frozen text-encoder ([clip-vit-large-patch14](https://huggingface.co/openai/clip-vit-large-patch14)).

tokenizer ([`~transformers.CLIPTokenizer`]):

A `CLIPTokenizer` to tokenize text.

unet ([`UNet2DConditionModel`]):

A `UNet2DConditionModel` to denoise the encoded image latents.

scheduler ([`SchedulerMixin`]):

A scheduler to be used in combination with `unet` to denoise the encoded image latents. Can be one of

[`DDIMScheduler`], [`LMSDiscreteScheduler`], or [`PNDMScheduler`].

safety_checker ([`StableDiffusionSafetyChecker`]):

Classification module that estimates whether generated images could be considered offensive or harmful.

Please refer to the [model card](https://huggingface.co/runwayml/stable-diffusion-v1-5) for more details

about a model's potential harms.

feature_extractor ([`~transformers.CLIPImageProcessor`]):

A `CLIPImageProcessor` to extract features from generated images; used as inputs to the `safety_checker`.

- all

- __call__

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/pag.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/pag/#stablediffusionpaginpaintpipeline

|

#stablediffusionpaginpaintpipeline

|

.md

|

145_5

|

StableDiffusionPAGPipeline

Pipeline for text-to-image generation using Stable Diffusion.

This model inherits from [`DiffusionPipeline`]. Check the superclass documentation for the generic methods

implemented for all pipelines (downloading, saving, running on a particular device, etc.).

The pipeline also inherits the following loading methods:

- [`~loaders.TextualInversionLoaderMixin.load_textual_inversion`] for loading textual inversion embeddings

- [`~loaders.StableDiffusionLoraLoaderMixin.load_lora_weights`] for loading LoRA weights

- [`~loaders.StableDiffusionLoraLoaderMixin.save_lora_weights`] for saving LoRA weights

- [`~loaders.FromSingleFileMixin.from_single_file`] for loading `.ckpt` files

- [`~loaders.IPAdapterMixin.load_ip_adapter`] for loading IP Adapters

Args:

vae ([`AutoencoderKL`]):

Variational Auto-Encoder (VAE) model to encode and decode images to and from latent representations.

text_encoder ([`~transformers.CLIPTextModel`]):

Frozen text-encoder ([clip-vit-large-patch14](https://huggingface.co/openai/clip-vit-large-patch14)).

tokenizer ([`~transformers.CLIPTokenizer`]):

A `CLIPTokenizer` to tokenize text.

unet ([`UNet2DConditionModel`]):

A `UNet2DConditionModel` to denoise the encoded image latents.

scheduler ([`SchedulerMixin`]):

A scheduler to be used in combination with `unet` to denoise the encoded image latents. Can be one of

[`DDIMScheduler`], [`LMSDiscreteScheduler`], or [`PNDMScheduler`].

safety_checker ([`StableDiffusionSafetyChecker`]):

Classification module that estimates whether generated images could be considered offensive or harmful.

Please refer to the [model card](https://huggingface.co/runwayml/stable-diffusion-v1-5) for more details

about a model's potential harms.

feature_extractor ([`~transformers.CLIPImageProcessor`]):

A `CLIPImageProcessor` to extract features from generated images; used as inputs to the `safety_checker`.

- all

- __call__

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/pag.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/pag/#stablediffusionpagpipeline

|

#stablediffusionpagpipeline

|

.md

|

145_6

|

StableDiffusionPAGImg2ImgPipeline

Pipeline for text-guided image-to-image generation using Stable Diffusion.

This model inherits from [`DiffusionPipeline`]. Check the superclass documentation for the generic methods

implemented for all pipelines (downloading, saving, running on a particular device, etc.).

The pipeline also inherits the following loading methods:

- [`~loaders.TextualInversionLoaderMixin.load_textual_inversion`] for loading textual inversion embeddings

- [`~loaders.StableDiffusionLoraLoaderMixin.load_lora_weights`] for loading LoRA weights

- [`~loaders.StableDiffusionLoraLoaderMixin.save_lora_weights`] for saving LoRA weights

- [`~loaders.FromSingleFileMixin.from_single_file`] for loading `.ckpt` files

- [`~loaders.IPAdapterMixin.load_ip_adapter`] for loading IP Adapters

Args:

vae ([`AutoencoderKL`]):

Variational Auto-Encoder (VAE) model to encode and decode images to and from latent representations.

text_encoder ([`~transformers.CLIPTextModel`]):

Frozen text-encoder ([clip-vit-large-patch14](https://huggingface.co/openai/clip-vit-large-patch14)).

tokenizer ([`~transformers.CLIPTokenizer`]):

A `CLIPTokenizer` to tokenize text.

unet ([`UNet2DConditionModel`]):

A `UNet2DConditionModel` to denoise the encoded image latents.

scheduler ([`SchedulerMixin`]):

A scheduler to be used in combination with `unet` to denoise the encoded image latents. Can be one of

[`DDIMScheduler`], [`LMSDiscreteScheduler`], or [`PNDMScheduler`].

safety_checker ([`StableDiffusionSafetyChecker`]):

Classification module that estimates whether generated images could be considered offensive or harmful.

Please refer to the [model card](https://huggingface.co/runwayml/stable-diffusion-v1-5) for more details

about a model's potential harms.

feature_extractor ([`~transformers.CLIPImageProcessor`]):

A `CLIPImageProcessor` to extract features from generated images; used as inputs to the `safety_checker`.

- all

- __call__

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/pag.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/pag/#stablediffusionpagimg2imgpipeline

|

#stablediffusionpagimg2imgpipeline

|

.md

|

145_7

|

StableDiffusionControlNetPAGPipeline

Pipeline for text-to-image generation using Stable Diffusion with ControlNet guidance.

This model inherits from [`DiffusionPipeline`]. Check the superclass documentation for the generic methods

implemented for all pipelines (downloading, saving, running on a particular device, etc.).

The pipeline also inherits the following loading methods:

- [`~loaders.TextualInversionLoaderMixin.load_textual_inversion`] for loading textual inversion embeddings

- [`~loaders.StableDiffusionLoraLoaderMixin.load_lora_weights`] for loading LoRA weights

- [`~loaders.StableDiffusionLoraLoaderMixin.save_lora_weights`] for saving LoRA weights

- [`~loaders.FromSingleFileMixin.from_single_file`] for loading `.ckpt` files

- [`~loaders.IPAdapterMixin.load_ip_adapter`] for loading IP Adapters

Args:

vae ([`AutoencoderKL`]):

Variational Auto-Encoder (VAE) model to encode and decode images to and from latent representations.

text_encoder ([`~transformers.CLIPTextModel`]):

Frozen text-encoder ([clip-vit-large-patch14](https://huggingface.co/openai/clip-vit-large-patch14)).

tokenizer ([`~transformers.CLIPTokenizer`]):

A `CLIPTokenizer` to tokenize text.

unet ([`UNet2DConditionModel`]):

A `UNet2DConditionModel` to denoise the encoded image latents.

controlnet ([`ControlNetModel`] or `List[ControlNetModel]`):

Provides additional conditioning to the `unet` during the denoising process. If you set multiple

ControlNets as a list, the outputs from each ControlNet are added together to create one combined

additional conditioning.

scheduler ([`SchedulerMixin`]):

A scheduler to be used in combination with `unet` to denoise the encoded image latents. Can be one of

[`DDIMScheduler`], [`LMSDiscreteScheduler`], or [`PNDMScheduler`].

safety_checker ([`StableDiffusionSafetyChecker`]):

Classification module that estimates whether generated images could be considered offensive or harmful.

Please refer to the [model card](https://huggingface.co/runwayml/stable-diffusion-v1-5) for more details

about a model's potential harms.

feature_extractor ([`~transformers.CLIPImageProcessor`]):

A `CLIPImageProcessor` to extract features from generated images; used as inputs to the `safety_checker`.

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/pag.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/pag/#stablediffusioncontrolnetpagpipeline

|

#stablediffusioncontrolnetpagpipeline

|

.md

|

145_8

|

StableDiffusionControlNetPAGInpaintPipeline

Pipeline for image inpainting using Stable Diffusion with ControlNet guidance.

This model inherits from [`DiffusionPipeline`]. Check the superclass documentation for the generic methods

implemented for all pipelines (downloading, saving, running on a particular device, etc.).

The pipeline also inherits the following loading methods:

- [`~loaders.TextualInversionLoaderMixin.load_textual_inversion`] for loading textual inversion embeddings

- [`~loaders.StableDiffusionLoraLoaderMixin.load_lora_weights`] for loading LoRA weights

- [`~loaders.StableDiffusionLoraLoaderMixin.save_lora_weights`] for saving LoRA weights

- [`~loaders.FromSingleFileMixin.from_single_file`] for loading `.ckpt` files

- [`~loaders.IPAdapterMixin.load_ip_adapter`] for loading IP Adapters

<Tip>

This pipeline can be used with checkpoints that have been specifically fine-tuned for inpainting

([runwayml/stable-diffusion-inpainting](https://huggingface.co/runwayml/stable-diffusion-inpainting)) as well as

default text-to-image Stable Diffusion checkpoints

([runwayml/stable-diffusion-v1-5](https://huggingface.co/runwayml/stable-diffusion-v1-5)). Default text-to-image

Stable Diffusion checkpoints might be preferable for ControlNets that have been fine-tuned on those, such as

[lllyasviel/control_v11p_sd15_inpaint](https://huggingface.co/lllyasviel/control_v11p_sd15_inpaint).

</Tip>

Args:

vae ([`AutoencoderKL`]):

Variational Auto-Encoder (VAE) model to encode and decode images to and from latent representations.

text_encoder ([`~transformers.CLIPTextModel`]):

Frozen text-encoder ([clip-vit-large-patch14](https://huggingface.co/openai/clip-vit-large-patch14)).

tokenizer ([`~transformers.CLIPTokenizer`]):

A `CLIPTokenizer` to tokenize text.

unet ([`UNet2DConditionModel`]):

A `UNet2DConditionModel` to denoise the encoded image latents.

controlnet ([`ControlNetModel`] or `List[ControlNetModel]`):

Provides additional conditioning to the `unet` during the denoising process. If you set multiple

ControlNets as a list, the outputs from each ControlNet are added together to create one combined

additional conditioning.

scheduler ([`SchedulerMixin`]):

A scheduler to be used in combination with `unet` to denoise the encoded image latents. Can be one of

[`DDIMScheduler`], [`LMSDiscreteScheduler`], or [`PNDMScheduler`].

safety_checker ([`StableDiffusionSafetyChecker`]):

Classification module that estimates whether generated images could be considered offensive or harmful.

Please refer to the [model card](https://huggingface.co/runwayml/stable-diffusion-v1-5) for more details

about a model's potential harms.

feature_extractor ([`~transformers.CLIPImageProcessor`]):

A `CLIPImageProcessor` to extract features from generated images; used as inputs to the `safety_checker`.

- all

- __call__

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/pag.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/pag/#stablediffusioncontrolnetpaginpaintpipeline

|

#stablediffusioncontrolnetpaginpaintpipeline

|

.md

|

145_9

|

StableDiffusionXLPAGPipeline

Pipeline for text-to-image generation using Stable Diffusion XL.

This model inherits from [`DiffusionPipeline`]. Check the superclass documentation for the generic methods the

library implements for all the pipelines (such as downloading or saving, running on a particular device, etc.)

The pipeline also inherits the following loading methods:

- [`~loaders.TextualInversionLoaderMixin.load_textual_inversion`] for loading textual inversion embeddings

- [`~loaders.FromSingleFileMixin.from_single_file`] for loading `.ckpt` files

- [`~loaders.StableDiffusionXLLoraLoaderMixin.load_lora_weights`] for loading LoRA weights

- [`~loaders.StableDiffusionXLLoraLoaderMixin.save_lora_weights`] for saving LoRA weights

- [`~loaders.IPAdapterMixin.load_ip_adapter`] for loading IP Adapters

Args:

vae ([`AutoencoderKL`]):

Variational Auto-Encoder (VAE) Model to encode and decode images to and from latent representations.

text_encoder ([`CLIPTextModel`]):

Frozen text-encoder. Stable Diffusion XL uses the text portion of

[CLIP](https://huggingface.co/docs/transformers/model_doc/clip#transformers.CLIPTextModel), specifically

the [clip-vit-large-patch14](https://huggingface.co/openai/clip-vit-large-patch14) variant.

text_encoder_2 ([` CLIPTextModelWithProjection`]):

Second frozen text-encoder. Stable Diffusion XL uses the text and pool portion of

[CLIP](https://huggingface.co/docs/transformers/model_doc/clip#transformers.CLIPTextModelWithProjection),

specifically the

[laion/CLIP-ViT-bigG-14-laion2B-39B-b160k](https://huggingface.co/laion/CLIP-ViT-bigG-14-laion2B-39B-b160k)

variant.

tokenizer (`CLIPTokenizer`):

Tokenizer of class

[CLIPTokenizer](https://huggingface.co/docs/transformers/v4.21.0/en/model_doc/clip#transformers.CLIPTokenizer).

tokenizer_2 (`CLIPTokenizer`):

Second Tokenizer of class

[CLIPTokenizer](https://huggingface.co/docs/transformers/v4.21.0/en/model_doc/clip#transformers.CLIPTokenizer).

unet ([`UNet2DConditionModel`]): Conditional U-Net architecture to denoise the encoded image latents.

scheduler ([`SchedulerMixin`]):

A scheduler to be used in combination with `unet` to denoise the encoded image latents. Can be one of

[`DDIMScheduler`], [`LMSDiscreteScheduler`], or [`PNDMScheduler`].

force_zeros_for_empty_prompt (`bool`, *optional*, defaults to `"True"`):

Whether the negative prompt embeddings shall be forced to always be set to 0. Also see the config of

`stabilityai/stable-diffusion-xl-base-1-0`.

add_watermarker (`bool`, *optional*):

Whether to use the [invisible_watermark library](https://github.com/ShieldMnt/invisible-watermark/) to

watermark output images. If not defined, it will default to True if the package is installed, otherwise no

watermarker will be used.

- all

- __call__

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/pag.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/pag/#stablediffusionxlpagpipeline

|

#stablediffusionxlpagpipeline

|

.md

|

145_10

|

StableDiffusionXLPAGImg2ImgPipeline

Pipeline for text-to-image generation using Stable Diffusion XL.

This model inherits from [`DiffusionPipeline`]. Check the superclass documentation for the generic methods the

library implements for all the pipelines (such as downloading or saving, running on a particular device, etc.)

The pipeline also inherits the following loading methods:

- [`~loaders.TextualInversionLoaderMixin.load_textual_inversion`] for loading textual inversion embeddings

- [`~loaders.FromSingleFileMixin.from_single_file`] for loading `.ckpt` files

- [`~loaders.StableDiffusionXLLoraLoaderMixin.load_lora_weights`] for loading LoRA weights

- [`~loaders.StableDiffusionXLLoraLoaderMixin.save_lora_weights`] for saving LoRA weights

- [`~loaders.IPAdapterMixin.load_ip_adapter`] for loading IP Adapters

Args:

vae ([`AutoencoderKL`]):

Variational Auto-Encoder (VAE) Model to encode and decode images to and from latent representations.

text_encoder ([`CLIPTextModel`]):

Frozen text-encoder. Stable Diffusion XL uses the text portion of

[CLIP](https://huggingface.co/docs/transformers/model_doc/clip#transformers.CLIPTextModel), specifically

the [clip-vit-large-patch14](https://huggingface.co/openai/clip-vit-large-patch14) variant.

text_encoder_2 ([` CLIPTextModelWithProjection`]):

Second frozen text-encoder. Stable Diffusion XL uses the text and pool portion of

[CLIP](https://huggingface.co/docs/transformers/model_doc/clip#transformers.CLIPTextModelWithProjection),

specifically the

[laion/CLIP-ViT-bigG-14-laion2B-39B-b160k](https://huggingface.co/laion/CLIP-ViT-bigG-14-laion2B-39B-b160k)

variant.

tokenizer (`CLIPTokenizer`):

Tokenizer of class

[CLIPTokenizer](https://huggingface.co/docs/transformers/v4.21.0/en/model_doc/clip#transformers.CLIPTokenizer).

tokenizer_2 (`CLIPTokenizer`):

Second Tokenizer of class

[CLIPTokenizer](https://huggingface.co/docs/transformers/v4.21.0/en/model_doc/clip#transformers.CLIPTokenizer).

unet ([`UNet2DConditionModel`]): Conditional U-Net architecture to denoise the encoded image latents.

scheduler ([`SchedulerMixin`]):

A scheduler to be used in combination with `unet` to denoise the encoded image latents. Can be one of

[`DDIMScheduler`], [`LMSDiscreteScheduler`], or [`PNDMScheduler`].

requires_aesthetics_score (`bool`, *optional*, defaults to `"False"`):

Whether the `unet` requires an `aesthetic_score` condition to be passed during inference. Also see the

config of `stabilityai/stable-diffusion-xl-refiner-1-0`.

force_zeros_for_empty_prompt (`bool`, *optional*, defaults to `"True"`):

Whether the negative prompt embeddings shall be forced to always be set to 0. Also see the config of

`stabilityai/stable-diffusion-xl-base-1-0`.

add_watermarker (`bool`, *optional*):

Whether to use the [invisible_watermark library](https://github.com/ShieldMnt/invisible-watermark/) to

watermark output images. If not defined, it will default to True if the package is installed, otherwise no

watermarker will be used.

- all

- __call__

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/pag.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/pag/#stablediffusionxlpagimg2imgpipeline

|

#stablediffusionxlpagimg2imgpipeline

|

.md

|

145_11

|

StableDiffusionXLPAGInpaintPipeline

Pipeline for text-to-image generation using Stable Diffusion XL.

This model inherits from [`DiffusionPipeline`]. Check the superclass documentation for the generic methods the

library implements for all the pipelines (such as downloading or saving, running on a particular device, etc.)

The pipeline also inherits the following loading methods:

- [`~loaders.TextualInversionLoaderMixin.load_textual_inversion`] for loading textual inversion embeddings

- [`~loaders.FromSingleFileMixin.from_single_file`] for loading `.ckpt` files

- [`~loaders.StableDiffusionXLLoraLoaderMixin.load_lora_weights`] for loading LoRA weights

- [`~loaders.StableDiffusionXLLoraLoaderMixin.save_lora_weights`] for saving LoRA weights

- [`~loaders.IPAdapterMixin.load_ip_adapter`] for loading IP Adapters

Args:

vae ([`AutoencoderKL`]):

Variational Auto-Encoder (VAE) Model to encode and decode images to and from latent representations.

text_encoder ([`CLIPTextModel`]):

Frozen text-encoder. Stable Diffusion XL uses the text portion of

[CLIP](https://huggingface.co/docs/transformers/model_doc/clip#transformers.CLIPTextModel), specifically

the [clip-vit-large-patch14](https://huggingface.co/openai/clip-vit-large-patch14) variant.

text_encoder_2 ([` CLIPTextModelWithProjection`]):

Second frozen text-encoder. Stable Diffusion XL uses the text and pool portion of

[CLIP](https://huggingface.co/docs/transformers/model_doc/clip#transformers.CLIPTextModelWithProjection),

specifically the

[laion/CLIP-ViT-bigG-14-laion2B-39B-b160k](https://huggingface.co/laion/CLIP-ViT-bigG-14-laion2B-39B-b160k)

variant.

tokenizer (`CLIPTokenizer`):

Tokenizer of class

[CLIPTokenizer](https://huggingface.co/docs/transformers/v4.21.0/en/model_doc/clip#transformers.CLIPTokenizer).

tokenizer_2 (`CLIPTokenizer`):

Second Tokenizer of class

[CLIPTokenizer](https://huggingface.co/docs/transformers/v4.21.0/en/model_doc/clip#transformers.CLIPTokenizer).

unet ([`UNet2DConditionModel`]): Conditional U-Net architecture to denoise the encoded image latents.

scheduler ([`SchedulerMixin`]):

A scheduler to be used in combination with `unet` to denoise the encoded image latents. Can be one of

[`DDIMScheduler`], [`LMSDiscreteScheduler`], or [`PNDMScheduler`].

requires_aesthetics_score (`bool`, *optional*, defaults to `"False"`):

Whether the `unet` requires a aesthetic_score condition to be passed during inference. Also see the config

of `stabilityai/stable-diffusion-xl-refiner-1-0`.

force_zeros_for_empty_prompt (`bool`, *optional*, defaults to `"True"`):

Whether the negative prompt embeddings shall be forced to always be set to 0. Also see the config of

`stabilityai/stable-diffusion-xl-base-1-0`.

add_watermarker (`bool`, *optional*):

Whether to use the [invisible_watermark library](https://github.com/ShieldMnt/invisible-watermark/) to

watermark output images. If not defined, it will default to True if the package is installed, otherwise no

watermarker will be used.

- all

- __call__

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/pag.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/pag/#stablediffusionxlpaginpaintpipeline

|

#stablediffusionxlpaginpaintpipeline

|

.md

|

145_12

|

StableDiffusionXLControlNetPAGPipeline

Pipeline for text-to-image generation using Stable Diffusion XL with ControlNet guidance.

This model inherits from [`DiffusionPipeline`]. Check the superclass documentation for the generic methods

implemented for all pipelines (downloading, saving, running on a particular device, etc.).

The pipeline also inherits the following loading methods:

- [`~loaders.TextualInversionLoaderMixin.load_textual_inversion`] for loading textual inversion embeddings

- [`~loaders.StableDiffusionXLLoraLoaderMixin.load_lora_weights`] for loading LoRA weights

- [`~loaders.StableDiffusionXLLoraLoaderMixin.save_lora_weights`] for saving LoRA weights

- [`~loaders.FromSingleFileMixin.from_single_file`] for loading `.ckpt` files

- [`~loaders.IPAdapterMixin.load_ip_adapter`] for loading IP Adapters

Args:

vae ([`AutoencoderKL`]):

Variational Auto-Encoder (VAE) model to encode and decode images to and from latent representations.

text_encoder ([`~transformers.CLIPTextModel`]):

Frozen text-encoder ([clip-vit-large-patch14](https://huggingface.co/openai/clip-vit-large-patch14)).

text_encoder_2 ([`~transformers.CLIPTextModelWithProjection`]):

Second frozen text-encoder

([laion/CLIP-ViT-bigG-14-laion2B-39B-b160k](https://huggingface.co/laion/CLIP-ViT-bigG-14-laion2B-39B-b160k)).

tokenizer ([`~transformers.CLIPTokenizer`]):

A `CLIPTokenizer` to tokenize text.

tokenizer_2 ([`~transformers.CLIPTokenizer`]):

A `CLIPTokenizer` to tokenize text.

unet ([`UNet2DConditionModel`]):

A `UNet2DConditionModel` to denoise the encoded image latents.

controlnet ([`ControlNetModel`] or `List[ControlNetModel]`):

Provides additional conditioning to the `unet` during the denoising process. If you set multiple

ControlNets as a list, the outputs from each ControlNet are added together to create one combined

additional conditioning.

scheduler ([`SchedulerMixin`]):

A scheduler to be used in combination with `unet` to denoise the encoded image latents. Can be one of

[`DDIMScheduler`], [`LMSDiscreteScheduler`], or [`PNDMScheduler`].

force_zeros_for_empty_prompt (`bool`, *optional*, defaults to `"True"`):

Whether the negative prompt embeddings should always be set to 0. Also see the config of

`stabilityai/stable-diffusion-xl-base-1-0`.

add_watermarker (`bool`, *optional*):

Whether to use the [invisible_watermark](https://github.com/ShieldMnt/invisible-watermark/) library to

watermark output images. If not defined, it defaults to `True` if the package is installed; otherwise no

watermarker is used.

- all

- __call__

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/pag.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/pag/#stablediffusionxlcontrolnetpagpipeline

|

#stablediffusionxlcontrolnetpagpipeline

|

.md

|

145_13

|

StableDiffusionXLControlNetPAGImg2ImgPipeline

Pipeline for image-to-image generation using Stable Diffusion XL with ControlNet guidance.

This model inherits from [`DiffusionPipeline`]. Check the superclass documentation for the generic methods the

library implements for all the pipelines (such as downloading or saving, running on a particular device, etc.)

The pipeline also inherits the following loading methods:

- [`~loaders.TextualInversionLoaderMixin.load_textual_inversion`] for loading textual inversion embeddings

- [`~loaders.StableDiffusionXLLoraLoaderMixin.load_lora_weights`] for loading LoRA weights

- [`~loaders.StableDiffusionXLLoraLoaderMixin.save_lora_weights`] for saving LoRA weights

- [`~loaders.IPAdapterMixin.load_ip_adapter`] for loading IP Adapters

Args:

vae ([`AutoencoderKL`]):

Variational Auto-Encoder (VAE) Model to encode and decode images to and from latent representations.

text_encoder ([`CLIPTextModel`]):

Frozen text-encoder. Stable Diffusion uses the text portion of

[CLIP](https://huggingface.co/docs/transformers/model_doc/clip#transformers.CLIPTextModel), specifically

the [clip-vit-large-patch14](https://huggingface.co/openai/clip-vit-large-patch14) variant.

text_encoder_2 ([` CLIPTextModelWithProjection`]):

Second frozen text-encoder. Stable Diffusion XL uses the text and pool portion of

[CLIP](https://huggingface.co/docs/transformers/model_doc/clip#transformers.CLIPTextModelWithProjection),

specifically the

[laion/CLIP-ViT-bigG-14-laion2B-39B-b160k](https://huggingface.co/laion/CLIP-ViT-bigG-14-laion2B-39B-b160k)

variant.

tokenizer (`CLIPTokenizer`):

Tokenizer of class

[CLIPTokenizer](https://huggingface.co/docs/transformers/v4.21.0/en/model_doc/clip#transformers.CLIPTokenizer).

tokenizer_2 (`CLIPTokenizer`):

Second Tokenizer of class

[CLIPTokenizer](https://huggingface.co/docs/transformers/v4.21.0/en/model_doc/clip#transformers.CLIPTokenizer).

unet ([`UNet2DConditionModel`]): Conditional U-Net architecture to denoise the encoded image latents.

controlnet ([`ControlNetModel`] or `List[ControlNetModel]`):

Provides additional conditioning to the unet during the denoising process. If you set multiple ControlNets

as a list, the outputs from each ControlNet are added together to create one combined additional

conditioning.

scheduler ([`SchedulerMixin`]):

A scheduler to be used in combination with `unet` to denoise the encoded image latents. Can be one of

[`DDIMScheduler`], [`LMSDiscreteScheduler`], or [`PNDMScheduler`].

requires_aesthetics_score (`bool`, *optional*, defaults to `"False"`):

Whether the `unet` requires an `aesthetic_score` condition to be passed during inference. Also see the

config of `stabilityai/stable-diffusion-xl-refiner-1-0`.

force_zeros_for_empty_prompt (`bool`, *optional*, defaults to `"True"`):

Whether the negative prompt embeddings shall be forced to always be set to 0. Also see the config of

`stabilityai/stable-diffusion-xl-base-1-0`.

add_watermarker (`bool`, *optional*):

Whether to use the [invisible_watermark library](https://github.com/ShieldMnt/invisible-watermark/) to

watermark output images. If not defined, it will default to True if the package is installed, otherwise no

watermarker will be used.

feature_extractor ([`~transformers.CLIPImageProcessor`]):

A `CLIPImageProcessor` to extract features from generated images; used as inputs to the `safety_checker`.

- all

- __call__

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/pag.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/pag/#stablediffusionxlcontrolnetpagimg2imgpipeline

|

#stablediffusionxlcontrolnetpagimg2imgpipeline

|

.md

|

145_14

|

StableDiffusion3PAGPipeline

[PAG pipeline](https://huggingface.co/docs/diffusers/main/en/using-diffusers/pag) for text-to-image generation

using Stable Diffusion 3.

Args:

transformer ([`SD3Transformer2DModel`]):

Conditional Transformer (MMDiT) architecture to denoise the encoded image latents.

scheduler ([`FlowMatchEulerDiscreteScheduler`]):

A scheduler to be used in combination with `transformer` to denoise the encoded image latents.

vae ([`AutoencoderKL`]):

Variational Auto-Encoder (VAE) Model to encode and decode images to and from latent representations.

text_encoder ([`CLIPTextModelWithProjection`]):

[CLIP](https://huggingface.co/docs/transformers/model_doc/clip#transformers.CLIPTextModelWithProjection),

specifically the [clip-vit-large-patch14](https://huggingface.co/openai/clip-vit-large-patch14) variant,

with an additional added projection layer that is initialized with a diagonal matrix with the `hidden_size`

as its dimension.

text_encoder_2 ([`CLIPTextModelWithProjection`]):

[CLIP](https://huggingface.co/docs/transformers/model_doc/clip#transformers.CLIPTextModelWithProjection),

specifically the

[laion/CLIP-ViT-bigG-14-laion2B-39B-b160k](https://huggingface.co/laion/CLIP-ViT-bigG-14-laion2B-39B-b160k)

variant.

text_encoder_3 ([`T5EncoderModel`]):

Frozen text-encoder. Stable Diffusion 3 uses

[T5](https://huggingface.co/docs/transformers/model_doc/t5#transformers.T5EncoderModel), specifically the

[t5-v1_1-xxl](https://huggingface.co/google/t5-v1_1-xxl) variant.

tokenizer (`CLIPTokenizer`):

Tokenizer of class

[CLIPTokenizer](https://huggingface.co/docs/transformers/v4.21.0/en/model_doc/clip#transformers.CLIPTokenizer).

tokenizer_2 (`CLIPTokenizer`):

Second Tokenizer of class

[CLIPTokenizer](https://huggingface.co/docs/transformers/v4.21.0/en/model_doc/clip#transformers.CLIPTokenizer).

tokenizer_3 (`T5TokenizerFast`):

Tokenizer of class

[T5Tokenizer](https://huggingface.co/docs/transformers/model_doc/t5#transformers.T5Tokenizer).

- all

- __call__

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/pag.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/pag/#stablediffusion3pagpipeline

|

#stablediffusion3pagpipeline

|

.md

|

145_15

|

StableDiffusion3PAGImg2ImgPipeline

[PAG pipeline](https://huggingface.co/docs/diffusers/main/en/using-diffusers/pag) for image-to-image generation

using Stable Diffusion 3.

Args:

transformer ([`SD3Transformer2DModel`]):

Conditional Transformer (MMDiT) architecture to denoise the encoded image latents.

scheduler ([`FlowMatchEulerDiscreteScheduler`]):

A scheduler to be used in combination with `transformer` to denoise the encoded image latents.

vae ([`AutoencoderKL`]):

Variational Auto-Encoder (VAE) Model to encode and decode images to and from latent representations.

text_encoder ([`CLIPTextModelWithProjection`]):

[CLIP](https://huggingface.co/docs/transformers/model_doc/clip#transformers.CLIPTextModelWithProjection),

specifically the [clip-vit-large-patch14](https://huggingface.co/openai/clip-vit-large-patch14) variant,

with an additional added projection layer that is initialized with a diagonal matrix with the `hidden_size`

as its dimension.

text_encoder_2 ([`CLIPTextModelWithProjection`]):

[CLIP](https://huggingface.co/docs/transformers/model_doc/clip#transformers.CLIPTextModelWithProjection),

specifically the

[laion/CLIP-ViT-bigG-14-laion2B-39B-b160k](https://huggingface.co/laion/CLIP-ViT-bigG-14-laion2B-39B-b160k)

variant.

text_encoder_3 ([`T5EncoderModel`]):

Frozen text-encoder. Stable Diffusion 3 uses

[T5](https://huggingface.co/docs/transformers/model_doc/t5#transformers.T5EncoderModel), specifically the

[t5-v1_1-xxl](https://huggingface.co/google/t5-v1_1-xxl) variant.

tokenizer (`CLIPTokenizer`):

Tokenizer of class

[CLIPTokenizer](https://huggingface.co/docs/transformers/v4.21.0/en/model_doc/clip#transformers.CLIPTokenizer).

tokenizer_2 (`CLIPTokenizer`):

Second Tokenizer of class

[CLIPTokenizer](https://huggingface.co/docs/transformers/v4.21.0/en/model_doc/clip#transformers.CLIPTokenizer).

tokenizer_3 (`T5TokenizerFast`):

Tokenizer of class

[T5Tokenizer](https://huggingface.co/docs/transformers/model_doc/t5#transformers.T5Tokenizer).

- all

- __call__

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/pag.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/pag/#stablediffusion3pagimg2imgpipeline

|

#stablediffusion3pagimg2imgpipeline

|

.md

|

145_16

|

PixArtSigmaPAGPipeline

[PAG pipeline](https://huggingface.co/docs/diffusers/main/en/using-diffusers/pag) for text-to-image generation

using PixArt-Sigma.

- all

- __call__

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/pag.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/pag/#pixartsigmapagpipeline

|

#pixartsigmapagpipeline

|

.md

|

145_17

|

<!--Copyright 2024 The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

specific language governing permissions and limitations under the License.

-->

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/self_attention_guidance.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/self_attention_guidance/

|

.md

|

146_0

|

|

[Improving Sample Quality of Diffusion Models Using Self-Attention Guidance](https://huggingface.co/papers/2210.00939) is by Susung Hong et al.

The abstract from the paper is:

*Denoising diffusion models (DDMs) have attracted attention for their exceptional generation quality and diversity. This success is largely attributed to the use of class- or text-conditional diffusion guidance methods, such as classifier and classifier-free guidance. In this paper, we present a more comprehensive perspective that goes beyond the traditional guidance methods. From this generalized perspective, we introduce novel condition- and training-free strategies to enhance the quality of generated images. As a simple solution, blur guidance improves the suitability of intermediate samples for their fine-scale information and structures, enabling diffusion models to generate higher quality samples with a moderate guidance scale. Improving upon this, Self-Attention Guidance (SAG) uses the intermediate self-attention maps of diffusion models to enhance their stability and efficacy. Specifically, SAG adversarially blurs only the regions that diffusion models attend to at each iteration and guides them accordingly. Our experimental results show that our SAG improves the performance of various diffusion models, including ADM, IDDPM, Stable Diffusion, and DiT. Moreover, combining SAG with conventional guidance methods leads to further improvement.*

You can find additional information about Self-Attention Guidance on the [project page](https://ku-cvlab.github.io/Self-Attention-Guidance), [original codebase](https://github.com/KU-CVLAB/Self-Attention-Guidance), and try it out in a [demo](https://huggingface.co/spaces/susunghong/Self-Attention-Guidance) or [notebook](https://colab.research.google.com/github/SusungHong/Self-Attention-Guidance/blob/main/SAG_Stable.ipynb).

<Tip>

Make sure to check out the Schedulers [guide](../../using-diffusers/schedulers) to learn how to explore the tradeoff between scheduler speed and quality, and see the [reuse components across pipelines](../../using-diffusers/loading#reuse-a-pipeline) section to learn how to efficiently load the same components into multiple pipelines.

</Tip>

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/self_attention_guidance.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/self_attention_guidance/#self-attention-guidance

|

#self-attention-guidance

|

.md

|

146_1

|

StableDiffusionSAGPipeline

Pipeline for text-to-image generation using Stable Diffusion.

This model inherits from [`DiffusionPipeline`]. Check the superclass documentation for the generic methods

implemented for all pipelines (downloading, saving, running on a particular device, etc.).

The pipeline also inherits the following loading methods:

- [`~loaders.TextualInversionLoaderMixin.load_textual_inversion`] for loading textual inversion embeddings

- [`~loaders.IPAdapterMixin.load_ip_adapter`] for loading IP Adapters

Args:

vae ([`AutoencoderKL`]):

Variational Auto-Encoder (VAE) model to encode and decode images to and from latent representations.

text_encoder ([`~transformers.CLIPTextModel`]):

Frozen text-encoder ([clip-vit-large-patch14](https://huggingface.co/openai/clip-vit-large-patch14)).

tokenizer ([`~transformers.CLIPTokenizer`]):

A `CLIPTokenizer` to tokenize text.

unet ([`UNet2DConditionModel`]):

A `UNet2DConditionModel` to denoise the encoded image latents.

scheduler ([`SchedulerMixin`]):

A scheduler to be used in combination with `unet` to denoise the encoded image latents. Can be one of

[`DDIMScheduler`], [`LMSDiscreteScheduler`], or [`PNDMScheduler`].

safety_checker ([`StableDiffusionSafetyChecker`]):

Classification module that estimates whether generated images could be considered offensive or harmful.

Please refer to the [model card](https://huggingface.co/stable-diffusion-v1-5/stable-diffusion-v1-5) for

more details about a model's potential harms.

feature_extractor ([`~transformers.CLIPImageProcessor`]):

A `CLIPImageProcessor` to extract features from generated images; used as inputs to the `safety_checker`.

- __call__

- all

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/self_attention_guidance.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/self_attention_guidance/#stablediffusionsagpipeline

|

#stablediffusionsagpipeline

|

.md

|

146_2

|

StableDiffusionPipelineOutput

Output class for Stable Diffusion pipelines.

Args:

images (`List[PIL.Image.Image]` or `np.ndarray`)

List of denoised PIL images of length `batch_size` or NumPy array of shape `(batch_size, height, width,

num_channels)`.

nsfw_content_detected (`List[bool]`)

List indicating whether the corresponding generated image contains "not-safe-for-work" (nsfw) content or

`None` if safety checking could not be performed.

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/self_attention_guidance.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/self_attention_guidance/#stablediffusionoutput

|

#stablediffusionoutput

|

.md

|

146_3

|

<!--Copyright 2024 Marigold authors and The HuggingFace Team. All rights reserved.

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on

an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the

specific language governing permissions and limitations under the License.

-->

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/marigold.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/marigold/

|

.md

|

147_0

|

|

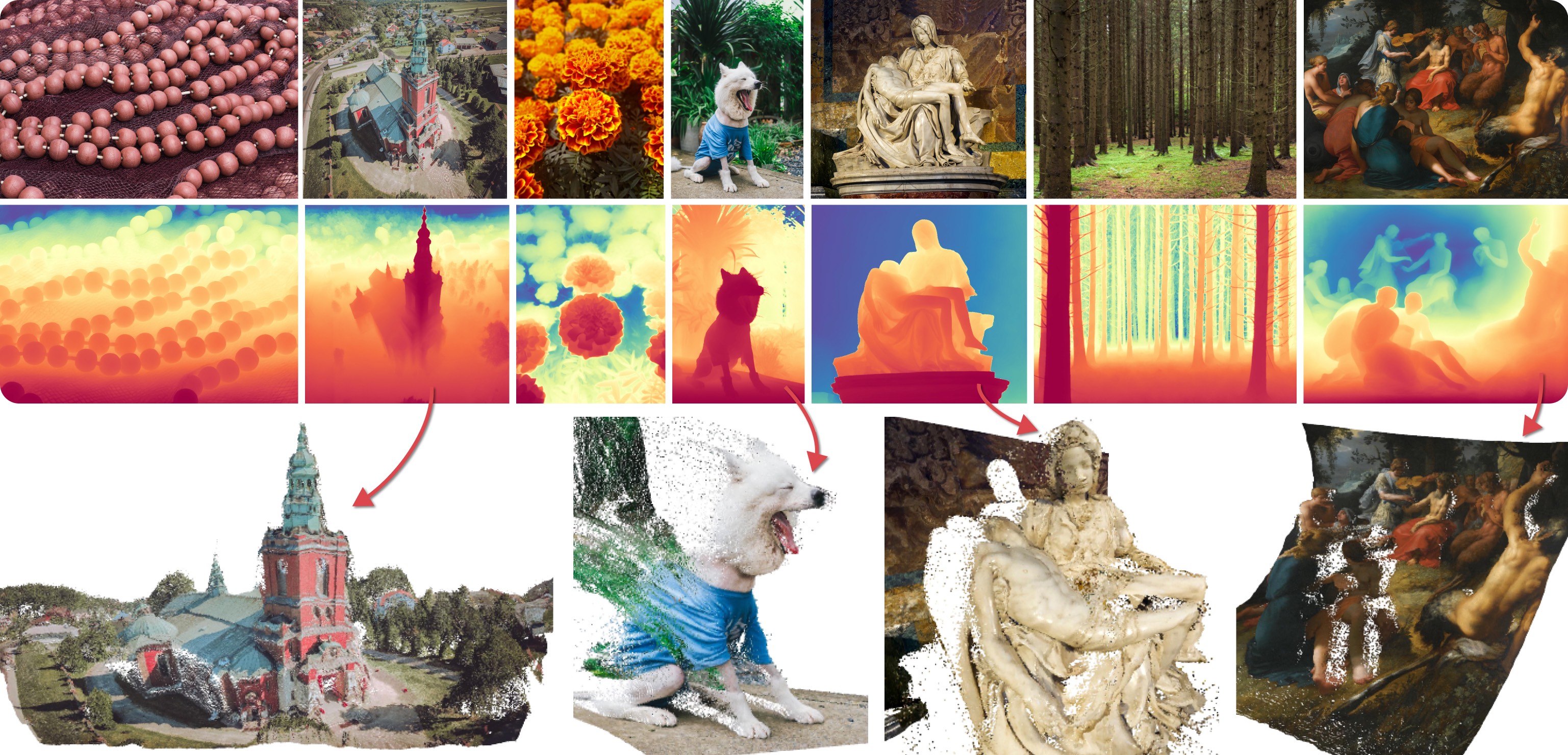

Marigold was proposed in [Repurposing Diffusion-Based Image Generators for Monocular Depth Estimation](https://huggingface.co/papers/2312.02145), a CVPR 2024 Oral paper by [Bingxin Ke](http://www.kebingxin.com/), [Anton Obukhov](https://www.obukhov.ai/), [Shengyu Huang](https://shengyuh.github.io/), [Nando Metzger](https://nandometzger.github.io/), [Rodrigo Caye Daudt](https://rcdaudt.github.io/), and [Konrad Schindler](https://scholar.google.com/citations?user=FZuNgqIAAAAJ&hl=en).

The idea is to repurpose the rich generative prior of Text-to-Image Latent Diffusion Models (LDMs) for traditional computer vision tasks.

Initially, this idea was explored to fine-tune Stable Diffusion for Monocular Depth Estimation, as shown in the teaser above.

Later,

- [Tianfu Wang](https://tianfwang.github.io/) trained the first Latent Consistency Model (LCM) of Marigold, which unlocked fast single-step inference;

- [Kevin Qu](https://www.linkedin.com/in/kevin-qu-b3417621b/?locale=en_US) extended the approach to Surface Normals Estimation;

- [Anton Obukhov](https://www.obukhov.ai/) contributed the pipelines and documentation into diffusers (enabled and supported by [YiYi Xu](https://yiyixuxu.github.io/) and [Sayak Paul](https://sayak.dev/)).

The abstract from the paper is:

*Monocular depth estimation is a fundamental computer vision task. Recovering 3D depth from a single image is geometrically ill-posed and requires scene understanding, so it is not surprising that the rise of deep learning has led to a breakthrough. The impressive progress of monocular depth estimators has mirrored the growth in model capacity, from relatively modest CNNs to large Transformer architectures. Still, monocular depth estimators tend to struggle when presented with images with unfamiliar content and layout, since their knowledge of the visual world is restricted by the data seen during training, and challenged by zero-shot generalization to new domains. This motivates us to explore whether the extensive priors captured in recent generative diffusion models can enable better, more generalizable depth estimation. We introduce Marigold, a method for affine-invariant monocular depth estimation that is derived from Stable Diffusion and retains its rich prior knowledge. The estimator can be fine-tuned in a couple of days on a single GPU using only synthetic training data. It delivers state-of-the-art performance across a wide range of datasets, including over 20% performance gains in specific cases. Project page: https://marigoldmonodepth.github.io.*

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/marigold.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/marigold/#marigold-pipelines-for-computer-vision-tasks

|

#marigold-pipelines-for-computer-vision-tasks

|

.md

|

147_1

|

Each pipeline supports one Computer Vision task, which takes an input RGB image as input and produces a *prediction* of the modality of interest, such as a depth map of the input image.

Currently, the following tasks are implemented:

| Pipeline | Predicted Modalities | Demos |

|---------------------------------------------------------------------------------------------------------------------------------------------|------------------------------------------------------------------------------------------------------------------|:--------------------------------------------------------------------------------------------------------------------------------------------------:|

| [MarigoldDepthPipeline](https://github.com/huggingface/diffusers/blob/main/src/diffusers/pipelines/marigold/pipeline_marigold_depth.py) | [Depth](https://en.wikipedia.org/wiki/Depth_map), [Disparity](https://en.wikipedia.org/wiki/Binocular_disparity) | [Fast Demo (LCM)](https://huggingface.co/spaces/prs-eth/marigold-lcm), [Slow Original Demo (DDIM)](https://huggingface.co/spaces/prs-eth/marigold) |

| [MarigoldNormalsPipeline](https://github.com/huggingface/diffusers/blob/main/src/diffusers/pipelines/marigold/pipeline_marigold_normals.py) | [Surface normals](https://en.wikipedia.org/wiki/Normal_mapping) | [Fast Demo (LCM)](https://huggingface.co/spaces/prs-eth/marigold-normals-lcm) |

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/marigold.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/marigold/#available-pipelines

|

#available-pipelines

|

.md

|

147_2

|

The original checkpoints can be found under the [PRS-ETH](https://huggingface.co/prs-eth/) Hugging Face organization.

<Tip>

Make sure to check out the Schedulers [guide](../../using-diffusers/schedulers) to learn how to explore the tradeoff between scheduler speed and quality, and see the [reuse components across pipelines](../../using-diffusers/loading#reuse-a-pipeline) section to learn how to efficiently load the same components into multiple pipelines. Also, to know more about reducing the memory usage of this pipeline, refer to the ["Reduce memory usage"] section [here](../../using-diffusers/svd#reduce-memory-usage).

</Tip>

<Tip warning={true}>

Marigold pipelines were designed and tested only with `DDIMScheduler` and `LCMScheduler`.

Depending on the scheduler, the number of inference steps required to get reliable predictions varies, and there is no universal value that works best across schedulers.

Because of that, the default value of `num_inference_steps` in the `__call__` method of the pipeline is set to `None` (see the API reference).

Unless set explicitly, its value will be taken from the checkpoint configuration `model_index.json`.

This is done to ensure high-quality predictions when calling the pipeline with just the `image` argument.

</Tip>

See also Marigold [usage examples](marigold_usage).

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/marigold.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/marigold/#available-checkpoints

|

#available-checkpoints

|

.md

|

147_3

|

MarigoldDepthPipeline

Pipeline for monocular depth estimation using the Marigold method: https://marigoldmonodepth.github.io.

This model inherits from [`DiffusionPipeline`]. Check the superclass documentation for the generic methods the

library implements for all the pipelines (such as downloading or saving, running on a particular device, etc.)

Args:

unet (`UNet2DConditionModel`):

Conditional U-Net to denoise the depth latent, conditioned on image latent.

vae (`AutoencoderKL`):

Variational Auto-Encoder (VAE) Model to encode and decode images and predictions to and from latent

representations.

scheduler (`DDIMScheduler` or `LCMScheduler`):

A scheduler to be used in combination with `unet` to denoise the encoded image latents.

text_encoder (`CLIPTextModel`):

Text-encoder, for empty text embedding.

tokenizer (`CLIPTokenizer`):

CLIP tokenizer.

prediction_type (`str`, *optional*):

Type of predictions made by the model.

scale_invariant (`bool`, *optional*):

A model property specifying whether the predicted depth maps are scale-invariant. This value must be set in

the model config. When used together with the `shift_invariant=True` flag, the model is also called

"affine-invariant". NB: overriding this value is not supported.

shift_invariant (`bool`, *optional*):

A model property specifying whether the predicted depth maps are shift-invariant. This value must be set in

the model config. When used together with the `scale_invariant=True` flag, the model is also called

"affine-invariant". NB: overriding this value is not supported.

default_denoising_steps (`int`, *optional*):

The minimum number of denoising diffusion steps that are required to produce a prediction of reasonable

quality with the given model. This value must be set in the model config. When the pipeline is called

without explicitly setting `num_inference_steps`, the default value is used. This is required to ensure

reasonable results with various model flavors compatible with the pipeline, such as those relying on very

short denoising schedules (`LCMScheduler`) and those with full diffusion schedules (`DDIMScheduler`).

default_processing_resolution (`int`, *optional*):

The recommended value of the `processing_resolution` parameter of the pipeline. This value must be set in

the model config. When the pipeline is called without explicitly setting `processing_resolution`, the

default value is used. This is required to ensure reasonable results with various model flavors trained

with varying optimal processing resolution values.

- all

- __call__

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/marigold.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/marigold/#marigolddepthpipeline

|

#marigolddepthpipeline

|

.md

|

147_4

|

MarigoldNormalsPipeline

Pipeline for monocular normals estimation using the Marigold method: https://marigoldmonodepth.github.io.

This model inherits from [`DiffusionPipeline`]. Check the superclass documentation for the generic methods the

library implements for all the pipelines (such as downloading or saving, running on a particular device, etc.)

Args:

unet (`UNet2DConditionModel`):

Conditional U-Net to denoise the normals latent, conditioned on image latent.

vae (`AutoencoderKL`):

Variational Auto-Encoder (VAE) Model to encode and decode images and predictions to and from latent

representations.

scheduler (`DDIMScheduler` or `LCMScheduler`):

A scheduler to be used in combination with `unet` to denoise the encoded image latents.

text_encoder (`CLIPTextModel`):

Text-encoder, for empty text embedding.

tokenizer (`CLIPTokenizer`):

CLIP tokenizer.

prediction_type (`str`, *optional*):

Type of predictions made by the model.

use_full_z_range (`bool`, *optional*):

Whether the normals predicted by this model utilize the full range of the Z dimension, or only its positive

half.

default_denoising_steps (`int`, *optional*):

The minimum number of denoising diffusion steps that are required to produce a prediction of reasonable

quality with the given model. This value must be set in the model config. When the pipeline is called

without explicitly setting `num_inference_steps`, the default value is used. This is required to ensure

reasonable results with various model flavors compatible with the pipeline, such as those relying on very

short denoising schedules (`LCMScheduler`) and those with full diffusion schedules (`DDIMScheduler`).

default_processing_resolution (`int`, *optional*):

The recommended value of the `processing_resolution` parameter of the pipeline. This value must be set in

the model config. When the pipeline is called without explicitly setting `processing_resolution`, the

default value is used. This is required to ensure reasonable results with various model flavors trained

with varying optimal processing resolution values.

- all

- __call__

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/marigold.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/marigold/#marigoldnormalspipeline

|

#marigoldnormalspipeline

|

.md

|

147_5

|

MarigoldDepthOutput

Output class for Marigold monocular depth prediction pipeline.

Args:

prediction (`np.ndarray`, `torch.Tensor`):

Predicted depth maps with values in the range [0, 1]. The shape is always $numimages imes 1 imes height

imes width$, regardless of whether the images were passed as a 4D array or a list.

uncertainty (`None`, `np.ndarray`, `torch.Tensor`):

Uncertainty maps computed from the ensemble, with values in the range [0, 1]. The shape is $numimages

imes 1 imes height imes width$.

latent (`None`, `torch.Tensor`):

Latent features corresponding to the predictions, compatible with the `latents` argument of the pipeline.

The shape is $numimages * numensemble imes 4 imes latentheight imes latentwidth$.

|

/Users/nielsrogge/Documents/python_projecten/diffusers/docs/source/en/api/pipelines/marigold.md

|

https://huggingface.co/docs/diffusers/en/api/pipelines/marigold/#marigolddepthoutput

|

#marigolddepthoutput

|

.md

|

147_6

|

MarigoldNormalsOutput

Output class for Marigold monocular normals prediction pipeline.

Args:

prediction (`np.ndarray`, `torch.Tensor`):

Predicted normals with values in the range [-1, 1]. The shape is always $numimages imes 3 imes height

imes width$, regardless of whether the images were passed as a 4D array or a list.

uncertainty (`None`, `np.ndarray`, `torch.Tensor`):

Uncertainty maps computed from the ensemble, with values in the range [0, 1]. The shape is $numimages

imes 1 imes height imes width$.

latent (`None`, `torch.Tensor`):

Latent features corresponding to the predictions, compatible with the `latents` argument of the pipeline.

The shape is $numimages * numensemble imes 4 imes latentheight imes latentwidth$.

|