Spaces:

Running

on

Zero

Running

on

Zero

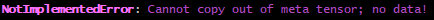

Meta Tensor Issues: Cannot copy out of meta tensor;

#10

by

v12h

- opened

model_id = "Wan-AI/Wan2.1-I2V-14B-480P-Diffusers"

image_encoder = CLIPVisionModel.from_pretrained(

model_id, subfolder="image_encoder", torch_dtype=torch.float32

)

vae = AutoencoderKLWan.from_pretrained(model_id, subfolder="vae", torch_dtype=torch.float32)

pipe = WanImageToVideoPipeline.from_pretrained(

model_id, vae=vae, image_encoder=image_encoder, torch_dtype=torch.bfloat16

)

pipe.to("cuda")

# (OPTION1) pipe.enable_sequential_cpu_offload()

# (OPTION2) pipe.enable_model_cpu_offload()

# (OPTION3) none. no cpu offloading.

print("Loading LoRA...")

causvid_path = hf_hub_download(repo_id="Kijai/WanVideo_comfy", filename="Wan21_CausVid_14B_T2V_lora_rank32.safetensors")

pipe.load_lora_weights(causvid_path, adapter_name="causvid_lora")

pipe.set_adapters(["causvid_lora"], adapter_weights=[0.95])

pipe.fuse_lora()

print("LoRA loaded")

Using the latest Diffusers version from git, latest Pytorch nightly + CU128.

RTX 5090 32GB VRAM

64GB General RAM

Using the new pipe.fuse_lora() method recommended to me for using LoRA's with WanImageToVideoPipeline.

I have other LoRA's working for me though. I'm just leaving this here in case anybody else runs into any of these issues as well.