language:

- en

license: bsd-3-clause-clear

tags:

- lidar

- point-cloud

- surface-normals

- autonomous-driving

annotations_creators:

- machine-generated

pretty_name: LiSu

size_categories:

- 10K<n<100K

paperswithcode_id: lisu

configs:

- config_name: default

data_files:

- split: train

path:

- Town01/**/*.parquet

- Town03/**/*.parquet

- Town05/**/*.parquet

- Town07/**/*.parquet

- split: test

path:

- Town02/**/*.parquet

- Town04/**/*.parquet

- Town06/**/*.parquet

- Town12/**/*.parquet

- split: val

path:

- Town10/**/*.parquet

dataset_info:

features:

- name: x

dtype: float32

- name: 'y'

dtype: float32

- name: z

dtype: float32

- name: intensity

dtype: float32

- name: surf_norm_x

dtype: float32

- name: surf_norm_y

dtype: float32

- name: surf_norm_z

dtype: float32

LiSu: A Dataset for LiDAR Surface Normal Estimation

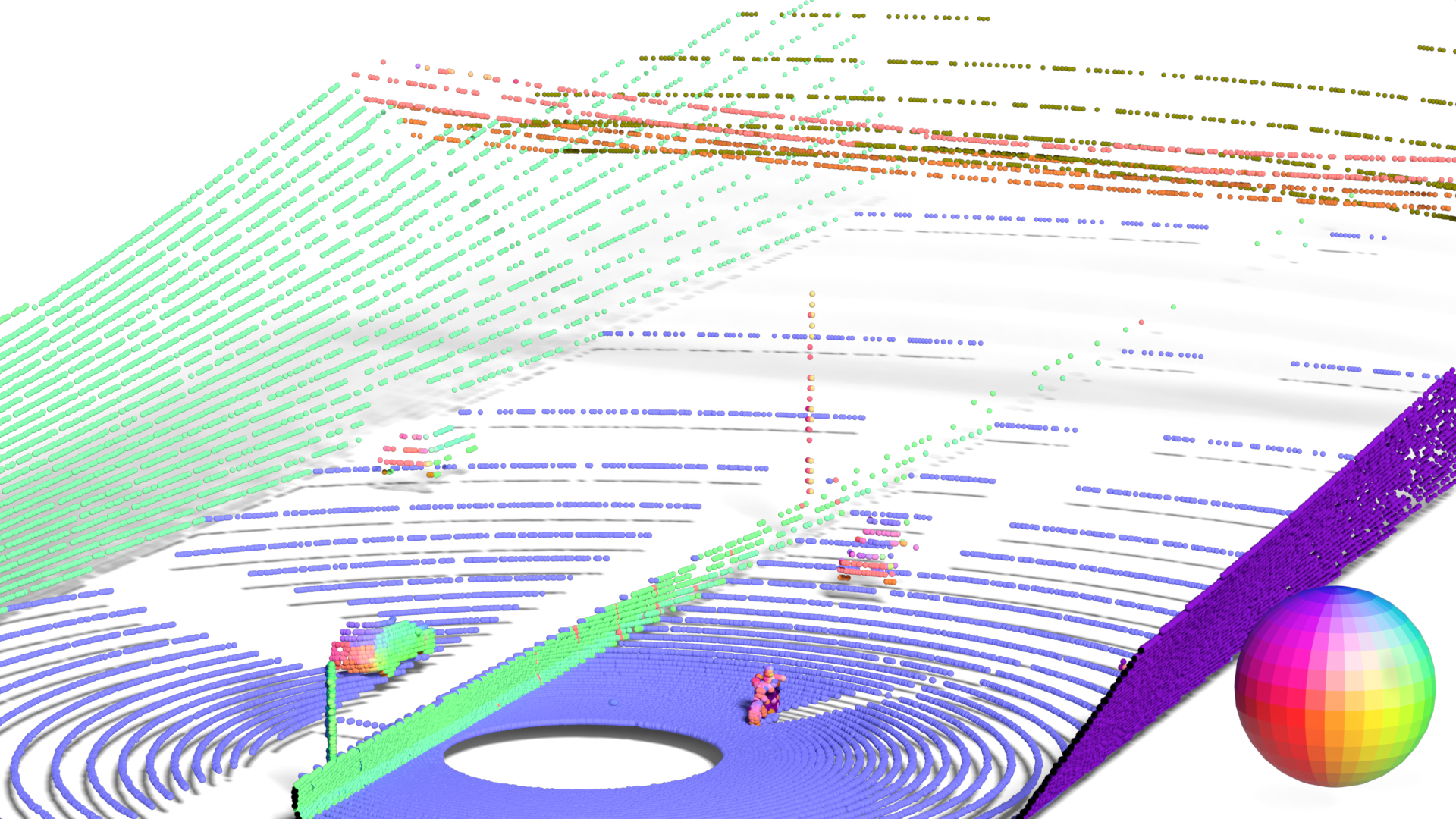

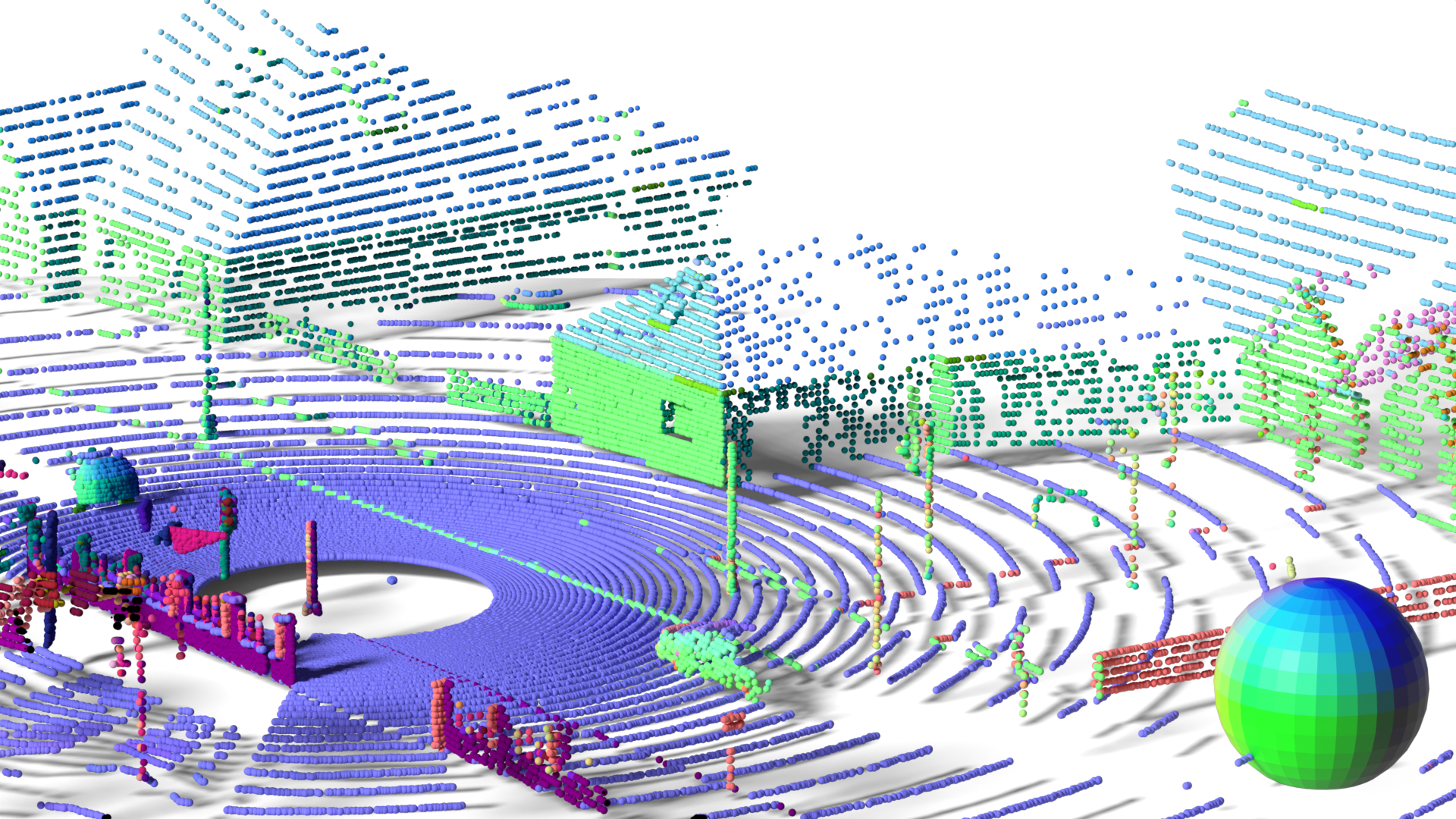

LiSu provides synthetic LiDAR point clouds, each annotated with surface normal vectors. This dataset is generated using CARLA simulator, ensuring diverse environmental conditions for robust training and evaluation. Below is an example from LiSu, where surface normals are linearly mapped to the RGB color space for intuitive visualization:

Dataset Details

Dataset Description

We generate our dataset using CARLA, a simulation framework based on the Unreal Engine. Specifically, we leverage nine of CARLA's twelve pre-built maps, excluding two reserved for the CARLA Autonomous Driving Challenges and one undecorated map with low geometric detail (i.e. without buildings, sidewalks, etc.). These selected maps represent diverse urban and rural environments, including downtown areas, small towns, and multi-lane highways. For each simulation, we populated the scenes with a large number of dynamic actors, such as vehicles (cars, trucks, buses, vans, motorcycles, bicycles) and pedestrians (adults, children, police) as well as static props (barrels, garbage cans, road barriers, etc.). The dynamic actors exhibited realistic movement patterns, governed by the underlying physics engine and adhered to real-world traffic rules, such as driving on designated roads and obeying traffic signals.

To capture realistic driving scenarios, we employ a virtual LiDAR sensor mounted atop a car operating in autopilot mode. The LiDAR sensor is configured to emit 64 laser beams, a 10° upper and a -30° lower field of view. Such a common sensor configuration strikes a balance between sparsity and density, providing a challenging yet fair evaluation environment. To further mimic real-world conditions, we set the maximum range to 100 meters and introduce Gaussian noise with a standard deviation of 0.02 meters to the LiDAR point cloud. The sensor captures data at a rate of 10Hz.

CARLA's default LiDAR sensor implementation is limited to position and intensity channels. To enable surface normal collection, we extend CARLA's ray tracer to query surface normals at each intersection point between a ray and a mesh object. These surface normals are then transformed into the sensor's coordinate frame and appended to the LiDAR data. This requires modifications to both CARLA's C++ backend and Python frontend, adding three extra channels to store the x, y, and z components of the normal vector for each LiDAR point.

For each map, we conduct eleven randomly initialized and independent simulation runs. A simulation is terminated early if prolonged traffic halts, such as red lights, occur. On average, each simulation lasts approximately 50 seconds, resulting in total of 50045 labeled frames. To ensure rigorous evaluation, we partition our dataset into training, validation, and testing sets. We assign each map to exactly one split, preventing data leakage (i.e. using the same "city" in multiple splits). One map is designated for validation, while the remaining eight maps are divided equally between the training and testing sets. This results in 25053 training, 22167 testing, and 2825 validation frames.

CARLA Pull Request

Citation

@inproceedings{cvpr2025lisu,

title={{LiSu: A Dataset and Method for LiDAR Surface Normal Estimation}},

author={Du\v{s}an Mali\'c and Christian Fruhwirth-Reisinger and Samuel Schulter and Horst Possegger},

booktitle={IEEE/CVF Computer Vision and Pattern Recognition Conference (CVPR)},

year={2025}

}