gguf quantized version of openaudio

- base model from fishaudio

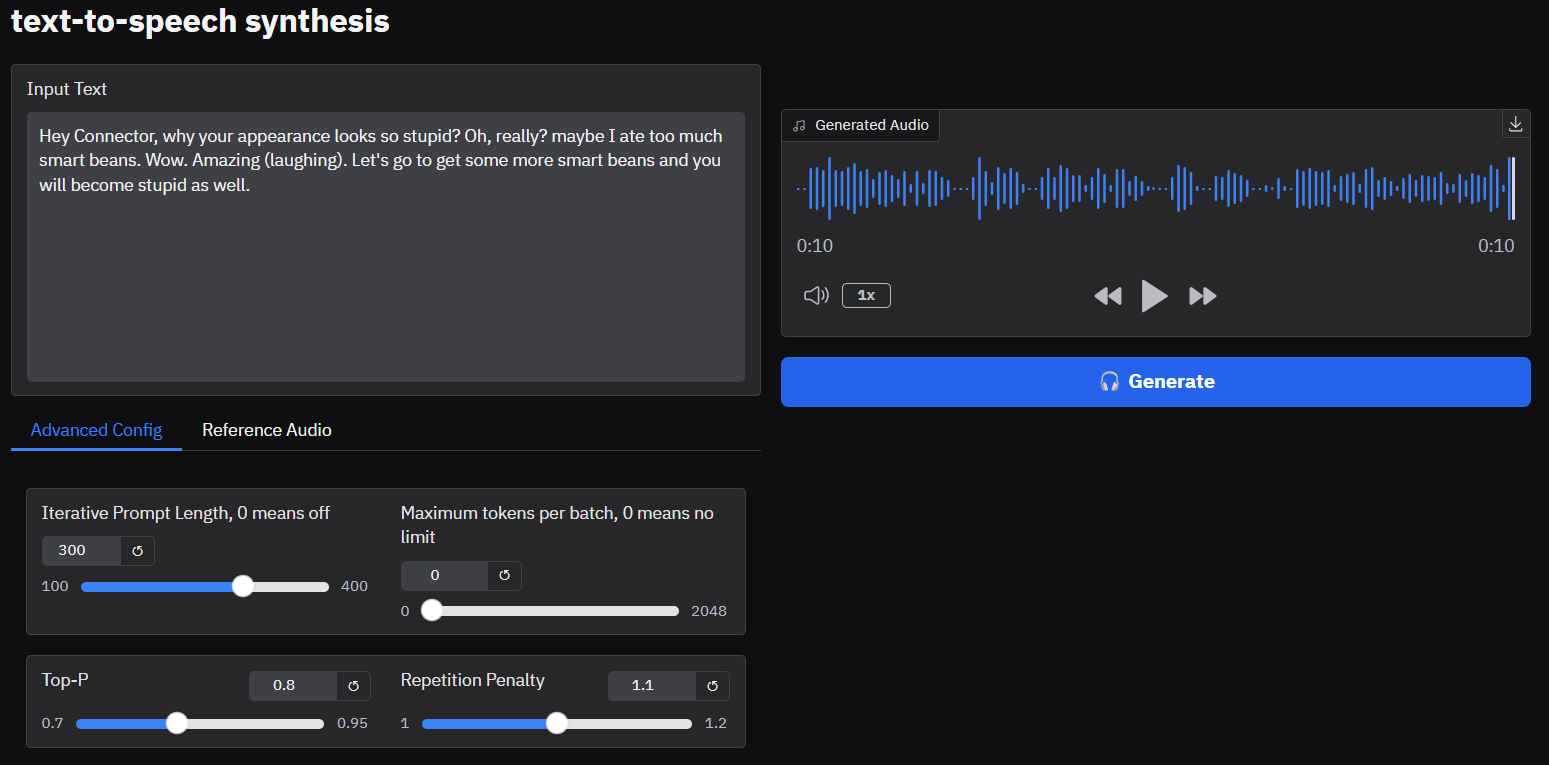

- text-to-speech synthesis

run it with gguf-connector

ggc o2

| Prompt | Audio Sample |

|---|---|

Hey Connector, why your appearance looks so stupid?Oh, really? maybe I ate too much smart beans.Wow. Amazing (laughing).Let's go to get some more smart beans and you will become stupid as well. |

🎧 audio-sample-1 |

Suddenly the plane's engines began failing, and the pilot says there isn't much time, and he'll keep the plane in the air as long as he can, and told his two passengers to take the only two parachutes on board and bail out. The world's smartest man immediately took a parachute and said "I'm the world's smartest man! The world needs me, so I can't die here!", and then jumped out of the plane. The pilot tells the hippie to hurry up and take the other parachute, because there aren't any more. And the hippie says "Relax man. We'll be fine. The world's smartest man took my backpack." |

🎧 audio-sample-2 |

review/reference

- simply execute the command (

ggc o2) above in console/terminal - opt a

codecand amodelgguf in the current directory to interact with (see example below)

GGUF file(s) available. Select which one for codec:

- codec-q2_k.gguf

- codec-q3_k_m.gguf

- codec-q4_k_m.gguf (recommended)

- codec-q5_k_m.gguf

- codec-q6_k.gguf

- model-bf16.gguf

- model-f16.gguf

- model-f32.gguf

Enter your choice (1 to 8): 3

GGUF file(s) available. Select which one for model:

- codec-q2_k.gguf

- codec-q3_k_m.gguf

- codec-q4_k_m.gguf

- codec-q5_k_m.gguf

- codec-q6_k.gguf

- model-bf16.gguf (recommended)

- model-f16.gguf (for non-cuda user)

- model-f32.gguf

Enter your choice (1 to 8): _

- note: for the latest update, only tokenizer will be pulled to

models/fishautomatically during the first launch, you need to prepare the codec and model files yourself, working like vision connector right away; mix and match, more flexible - run it entirely offline; i.e., from local URL: http://127.0.0.1:7860 with lazy webui

- gguf-connector (pypi)

- Downloads last month

- 737

Hardware compatibility

Log In

to view the estimation

2-bit

3-bit

4-bit

5-bit

6-bit

16-bit

32-bit

Inference Providers

NEW

This model isn't deployed by any Inference Provider.

🙋

Ask for provider support

Model tree for calcuis/openaudio-gguf

Base model

fishaudio/openaudio-s1-mini