Upload 114 files

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +9 -0

- LICENSE +21 -0

- README.md +13 -13

- app.py +4 -0

- benchmark-openvino.bat +23 -0

- benchmark.bat +23 -0

- configs/lcm-lora-models.txt +4 -0

- configs/lcm-models.txt +8 -0

- configs/openvino-lcm-models.txt +10 -0

- configs/stable-diffusion-models.txt +7 -0

- controlnet_models/Readme.txt +3 -0

- docs/images/2steps-inference.jpg +0 -0

- docs/images/ARCGPU.png +0 -0

- docs/images/comfyui-workflow.png +3 -0

- docs/images/fastcpu-cli.png +0 -0

- docs/images/fastcpu-webui.png +3 -0

- docs/images/fastsdcpu-android-termux-pixel7.png +3 -0

- docs/images/fastsdcpu-api.png +0 -0

- docs/images/fastsdcpu-gui.jpg +3 -0

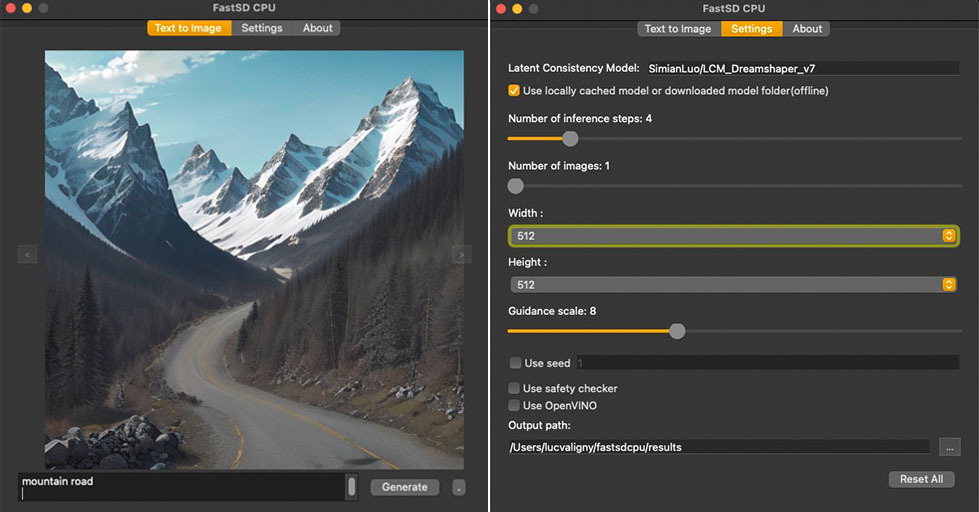

- docs/images/fastsdcpu-mac-gui.jpg +0 -0

- docs/images/fastsdcpu-screenshot.png +3 -0

- docs/images/fastsdcpu-webui.png +3 -0

- docs/images/fastsdcpu_claude.jpg +3 -0

- docs/images/fastsdcpu_flux_on_cpu.png +3 -0

- docs/images/openwebui-fastsd.jpg +3 -0

- docs/images/openwebui-settings.png +0 -0

- install-mac.sh +36 -0

- install.bat +38 -0

- install.sh +44 -0

- lora_models/Readme.txt +3 -0

- models/gguf/clip/readme.txt +1 -0

- models/gguf/diffusion/readme.txt +1 -0

- models/gguf/t5xxl/readme.txt +1 -0

- models/gguf/vae/readme.txt +1 -0

- requirements.txt +21 -0

- src/__init__.py +0 -0

- src/app.py +554 -0

- src/app_settings.py +124 -0

- src/backend/__init__.py +0 -0

- src/backend/annotators/canny_control.py +15 -0

- src/backend/annotators/control_interface.py +12 -0

- src/backend/annotators/depth_control.py +15 -0

- src/backend/annotators/image_control_factory.py +31 -0

- src/backend/annotators/lineart_control.py +11 -0

- src/backend/annotators/mlsd_control.py +10 -0

- src/backend/annotators/normal_control.py +10 -0

- src/backend/annotators/pose_control.py +10 -0

- src/backend/annotators/shuffle_control.py +10 -0

- src/backend/annotators/softedge_control.py +10 -0

- src/backend/api/mcp_server.py +95 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,12 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

docs/images/comfyui-workflow.png filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

docs/images/fastcpu-webui.png filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

docs/images/fastsdcpu_claude.jpg filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

docs/images/fastsdcpu_flux_on_cpu.png filter=lfs diff=lfs merge=lfs -text

|

| 40 |

+

docs/images/fastsdcpu-android-termux-pixel7.png filter=lfs diff=lfs merge=lfs -text

|

| 41 |

+

docs/images/fastsdcpu-gui.jpg filter=lfs diff=lfs merge=lfs -text

|

| 42 |

+

docs/images/fastsdcpu-screenshot.png filter=lfs diff=lfs merge=lfs -text

|

| 43 |

+

docs/images/fastsdcpu-webui.png filter=lfs diff=lfs merge=lfs -text

|

| 44 |

+

docs/images/openwebui-fastsd.jpg filter=lfs diff=lfs merge=lfs -text

|

LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2023 Rupesh Sreeraman

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

README.md

CHANGED

|

@@ -1,13 +1,13 @@

|

|

| 1 |

-

---

|

| 2 |

-

title:

|

| 3 |

-

emoji:

|

| 4 |

-

colorFrom:

|

| 5 |

-

colorTo:

|

| 6 |

-

sdk: gradio

|

| 7 |

-

sdk_version: 5.

|

| 8 |

-

app_file: app.py

|

| 9 |

-

pinned: false

|

| 10 |

-

license: mit

|

| 11 |

-

---

|

| 12 |

-

|

| 13 |

-

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

|

|

|

| 1 |

+

---

|

| 2 |

+

title: FaceGUI

|

| 3 |

+

emoji: 📚

|

| 4 |

+

colorFrom: yellow

|

| 5 |

+

colorTo: gray

|

| 6 |

+

sdk: gradio

|

| 7 |

+

sdk_version: 5.30.0

|

| 8 |

+

app_file: app.py

|

| 9 |

+

pinned: false

|

| 10 |

+

license: mit

|

| 11 |

+

---

|

| 12 |

+

|

| 13 |

+

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

app.py

ADDED

|

@@ -0,0 +1,4 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import subprocess

|

| 2 |

+

|

| 3 |

+

print("Starting FastSD CPU please wait...")

|

| 4 |

+

subprocess.run(["python3", "src/app.py", "--webui", "--port", "7860"])

|

benchmark-openvino.bat

ADDED

|

@@ -0,0 +1,23 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

@echo off

|

| 2 |

+

setlocal

|

| 3 |

+

|

| 4 |

+

set "PYTHON_COMMAND=python"

|

| 5 |

+

|

| 6 |

+

call python --version > nul 2>&1

|

| 7 |

+

if %errorlevel% equ 0 (

|

| 8 |

+

echo Python command check :OK

|

| 9 |

+

) else (

|

| 10 |

+

echo "Error: Python command not found, please install Python (Recommended : Python 3.10 or Python 3.11) and try again"

|

| 11 |

+

pause

|

| 12 |

+

exit /b 1

|

| 13 |

+

|

| 14 |

+

)

|

| 15 |

+

|

| 16 |

+

:check_python_version

|

| 17 |

+

for /f "tokens=2" %%I in ('%PYTHON_COMMAND% --version 2^>^&1') do (

|

| 18 |

+

set "python_version=%%I"

|

| 19 |

+

)

|

| 20 |

+

|

| 21 |

+

echo Python version: %python_version%

|

| 22 |

+

|

| 23 |

+

call "%~dp0env\Scripts\activate.bat" && %PYTHON_COMMAND% src/app.py -b --use_openvino --openvino_lcm_model_id "rupeshs/sd-turbo-openvino"

|

benchmark.bat

ADDED

|

@@ -0,0 +1,23 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

@echo off

|

| 2 |

+

setlocal

|

| 3 |

+

|

| 4 |

+

set "PYTHON_COMMAND=python"

|

| 5 |

+

|

| 6 |

+

call python --version > nul 2>&1

|

| 7 |

+

if %errorlevel% equ 0 (

|

| 8 |

+

echo Python command check :OK

|

| 9 |

+

) else (

|

| 10 |

+

echo "Error: Python command not found, please install Python (Recommended : Python 3.10 or Python 3.11) and try again"

|

| 11 |

+

pause

|

| 12 |

+

exit /b 1

|

| 13 |

+

|

| 14 |

+

)

|

| 15 |

+

|

| 16 |

+

:check_python_version

|

| 17 |

+

for /f "tokens=2" %%I in ('%PYTHON_COMMAND% --version 2^>^&1') do (

|

| 18 |

+

set "python_version=%%I"

|

| 19 |

+

)

|

| 20 |

+

|

| 21 |

+

echo Python version: %python_version%

|

| 22 |

+

|

| 23 |

+

call "%~dp0env\Scripts\activate.bat" && %PYTHON_COMMAND% src/app.py -b

|

configs/lcm-lora-models.txt

ADDED

|

@@ -0,0 +1,4 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

latent-consistency/lcm-lora-sdv1-5

|

| 2 |

+

latent-consistency/lcm-lora-sdxl

|

| 3 |

+

latent-consistency/lcm-lora-ssd-1b

|

| 4 |

+

rupeshs/hypersd-sd1-5-1-step-lora

|

configs/lcm-models.txt

ADDED

|

@@ -0,0 +1,8 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

stabilityai/sd-turbo

|

| 2 |

+

rupeshs/sdxs-512-0.9-orig-vae

|

| 3 |

+

rupeshs/hyper-sd-sdxl-1-step

|

| 4 |

+

rupeshs/SDXL-Lightning-2steps

|

| 5 |

+

stabilityai/sdxl-turbo

|

| 6 |

+

SimianLuo/LCM_Dreamshaper_v7

|

| 7 |

+

latent-consistency/lcm-sdxl

|

| 8 |

+

latent-consistency/lcm-ssd-1b

|

configs/openvino-lcm-models.txt

ADDED

|

@@ -0,0 +1,10 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

rupeshs/sd-turbo-openvino

|

| 2 |

+

rupeshs/sdxs-512-0.9-openvino

|

| 3 |

+

rupeshs/hyper-sd-sdxl-1-step-openvino-int8

|

| 4 |

+

rupeshs/SDXL-Lightning-2steps-openvino-int8

|

| 5 |

+

rupeshs/sdxl-turbo-openvino-int8

|

| 6 |

+

rupeshs/LCM-dreamshaper-v7-openvino

|

| 7 |

+

Disty0/LCM_SoteMix

|

| 8 |

+

rupeshs/sd15-lcm-square-openvino-int8

|

| 9 |

+

OpenVINO/FLUX.1-schnell-int4-ov

|

| 10 |

+

rupeshs/sana-sprint-0.6b-openvino-int4

|

configs/stable-diffusion-models.txt

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Lykon/dreamshaper-8

|

| 2 |

+

Fictiverse/Stable_Diffusion_PaperCut_Model

|

| 3 |

+

stabilityai/stable-diffusion-xl-base-1.0

|

| 4 |

+

runwayml/stable-diffusion-v1-5

|

| 5 |

+

segmind/SSD-1B

|

| 6 |

+

stablediffusionapi/anything-v5

|

| 7 |

+

prompthero/openjourney-v4

|

controlnet_models/Readme.txt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Place your ControlNet models in this folder.

|

| 2 |

+

You can download controlnet model (.safetensors) from https://huggingface.co/comfyanonymous/ControlNet-v1-1_fp16_safetensors/tree/main

|

| 3 |

+

E.g: https://huggingface.co/comfyanonymous/ControlNet-v1-1_fp16_safetensors/blob/main/control_v11p_sd15_canny_fp16.safetensors

|

docs/images/2steps-inference.jpg

ADDED

|

docs/images/ARCGPU.png

ADDED

|

docs/images/comfyui-workflow.png

ADDED

|

Git LFS Details

|

docs/images/fastcpu-cli.png

ADDED

|

docs/images/fastcpu-webui.png

ADDED

|

Git LFS Details

|

docs/images/fastsdcpu-android-termux-pixel7.png

ADDED

|

|

Git LFS Details

|

docs/images/fastsdcpu-api.png

ADDED

|

docs/images/fastsdcpu-gui.jpg

ADDED

|

Git LFS Details

|

docs/images/fastsdcpu-mac-gui.jpg

ADDED

|

docs/images/fastsdcpu-screenshot.png

ADDED

|

Git LFS Details

|

docs/images/fastsdcpu-webui.png

ADDED

|

Git LFS Details

|

docs/images/fastsdcpu_claude.jpg

ADDED

|

Git LFS Details

|

docs/images/fastsdcpu_flux_on_cpu.png

ADDED

|

Git LFS Details

|

docs/images/openwebui-fastsd.jpg

ADDED

|

Git LFS Details

|

docs/images/openwebui-settings.png

ADDED

|

install-mac.sh

ADDED

|

@@ -0,0 +1,36 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/usr/bin/env bash

|

| 2 |

+

echo Starting FastSD CPU env installation...

|

| 3 |

+

set -e

|

| 4 |

+

PYTHON_COMMAND="python3"

|

| 5 |

+

|

| 6 |

+

if ! command -v python3 &>/dev/null; then

|

| 7 |

+

if ! command -v python &>/dev/null; then

|

| 8 |

+

echo "Error: Python not found, please install python 3.8 or higher and try again"

|

| 9 |

+

exit 1

|

| 10 |

+

fi

|

| 11 |

+

fi

|

| 12 |

+

|

| 13 |

+

if command -v python &>/dev/null; then

|

| 14 |

+

PYTHON_COMMAND="python"

|

| 15 |

+

fi

|

| 16 |

+

|

| 17 |

+

echo "Found $PYTHON_COMMAND command"

|

| 18 |

+

|

| 19 |

+

python_version=$($PYTHON_COMMAND --version 2>&1 | awk '{print $2}')

|

| 20 |

+

echo "Python version : $python_version"

|

| 21 |

+

|

| 22 |

+

if ! command -v uv &>/dev/null; then

|

| 23 |

+

echo "Error: uv command not found,please install https://docs.astral.sh/uv/getting-started/installation/#__tabbed_1_1 and try again."

|

| 24 |

+

exit 1

|

| 25 |

+

fi

|

| 26 |

+

|

| 27 |

+

BASEDIR=$(pwd)

|

| 28 |

+

|

| 29 |

+

uv venv --python 3.11.6 "$BASEDIR/env"

|

| 30 |

+

# shellcheck disable=SC1091

|

| 31 |

+

source "$BASEDIR/env/bin/activate"

|

| 32 |

+

uv pip install torch

|

| 33 |

+

uv pip install -r "$BASEDIR/requirements.txt"

|

| 34 |

+

chmod +x "start.sh"

|

| 35 |

+

chmod +x "start-webui.sh"

|

| 36 |

+

read -n1 -r -p "FastSD CPU installation completed,press any key to continue..." key

|

install.bat

ADDED

|

@@ -0,0 +1,38 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

@echo off

|

| 3 |

+

setlocal

|

| 4 |

+

echo Starting FastSD CPU env installation...

|

| 5 |

+

|

| 6 |

+

set "PYTHON_COMMAND=python"

|

| 7 |

+

|

| 8 |

+

call python --version > nul 2>&1

|

| 9 |

+

if %errorlevel% equ 0 (

|

| 10 |

+

echo Python command check :OK

|

| 11 |

+

) else (

|

| 12 |

+

echo "Error: Python command not found,please install Python(Recommended : Python 3.10 or Python 3.11) and try again."

|

| 13 |

+

pause

|

| 14 |

+

exit /b 1

|

| 15 |

+

|

| 16 |

+

)

|

| 17 |

+

|

| 18 |

+

call uv --version > nul 2>&1

|

| 19 |

+

if %errorlevel% equ 0 (

|

| 20 |

+

echo uv command check :OK

|

| 21 |

+

) else (

|

| 22 |

+

echo "Error: uv command not found,please install https://docs.astral.sh/uv/getting-started/installation/#__tabbed_1_2 and try again."

|

| 23 |

+

pause

|

| 24 |

+

exit /b 1

|

| 25 |

+

|

| 26 |

+

)

|

| 27 |

+

:check_python_version

|

| 28 |

+

for /f "tokens=2" %%I in ('%PYTHON_COMMAND% --version 2^>^&1') do (

|

| 29 |

+

set "python_version=%%I"

|

| 30 |

+

)

|

| 31 |

+

|

| 32 |

+

echo Python version: %python_version%

|

| 33 |

+

|

| 34 |

+

uv venv --python 3.11.6 "%~dp0env"

|

| 35 |

+

call "%~dp0env\Scripts\activate.bat" && uv pip install torch --index-url https://download.pytorch.org/whl/cpu

|

| 36 |

+

call "%~dp0env\Scripts\activate.bat" && uv pip install -r "%~dp0requirements.txt"

|

| 37 |

+

echo FastSD CPU env installation completed.

|

| 38 |

+

pause

|

install.sh

ADDED

|

@@ -0,0 +1,44 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/usr/bin/env bash

|

| 2 |

+

echo Starting FastSD CPU env installation...

|

| 3 |

+

set -e

|

| 4 |

+

PYTHON_COMMAND="python3"

|

| 5 |

+

|

| 6 |

+

if ! command -v python3 &>/dev/null; then

|

| 7 |

+

if ! command -v python &>/dev/null; then

|

| 8 |

+

echo "Error: Python not found, please install python 3.8 or higher and try again"

|

| 9 |

+

exit 1

|

| 10 |

+

fi

|

| 11 |

+

fi

|

| 12 |

+

|

| 13 |

+

if command -v python &>/dev/null; then

|

| 14 |

+

PYTHON_COMMAND="python"

|

| 15 |

+

fi

|

| 16 |

+

|

| 17 |

+

echo "Found $PYTHON_COMMAND command"

|

| 18 |

+

|

| 19 |

+

python_version=$($PYTHON_COMMAND --version 2>&1 | awk '{print $2}')

|

| 20 |

+

echo "Python version : $python_version"

|

| 21 |

+

|

| 22 |

+

if ! command -v uv &>/dev/null; then

|

| 23 |

+

echo "Error: uv command not found,please install https://docs.astral.sh/uv/getting-started/installation/#__tabbed_1_1 and try again."

|

| 24 |

+

exit 1

|

| 25 |

+

fi

|

| 26 |

+

|

| 27 |

+

BASEDIR=$(pwd)

|

| 28 |

+

|

| 29 |

+

uv venv --python 3.11.6 "$BASEDIR/env"

|

| 30 |

+

# shellcheck disable=SC1091

|

| 31 |

+

source "$BASEDIR/env/bin/activate"

|

| 32 |

+

uv pip install torch --index-url https://download.pytorch.org/whl/cpu

|

| 33 |

+

if [[ "$1" == "--disable-gui" ]]; then

|

| 34 |

+

#! For termux , we don't need Qt based GUI

|

| 35 |

+

packages="$(grep -v "^ *#\|^PyQt5" requirements.txt | grep .)"

|

| 36 |

+

# shellcheck disable=SC2086

|

| 37 |

+

uv pip install $packages

|

| 38 |

+

else

|

| 39 |

+

uv pip install -r "$BASEDIR/requirements.txt"

|

| 40 |

+

fi

|

| 41 |

+

|

| 42 |

+

chmod +x "start.sh"

|

| 43 |

+

chmod +x "start-webui.sh"

|

| 44 |

+

read -n1 -r -p "FastSD CPU installation completed,press any key to continue..." key

|

lora_models/Readme.txt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Place your lora models in this folder.

|

| 2 |

+

You can download lora model (.safetensors/Safetensor) from Civitai (https://civitai.com/) or Hugging Face(https://huggingface.co/)

|

| 3 |

+

E.g: https://civitai.com/models/207984/cutecartoonredmond-15v-cute-cartoon-lora-for-liberteredmond-sd-15?modelVersionId=234192

|

models/gguf/clip/readme.txt

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

Place CLIP model files here"

|

models/gguf/diffusion/readme.txt

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

Place your diffusion gguf model files here

|

models/gguf/t5xxl/readme.txt

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

Place T5-XXL model files here

|

models/gguf/vae/readme.txt

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

Place VAE model files here

|

requirements.txt

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

accelerate==1.6.0

|

| 2 |

+

diffusers==0.33.0

|

| 3 |

+

transformers==4.48.0

|

| 4 |

+

PyQt5

|

| 5 |

+

Pillow==9.4.0

|

| 6 |

+

openvino==2025.1.0

|

| 7 |

+

optimum-intel==1.23.0

|

| 8 |

+

onnx==1.16.0

|

| 9 |

+

numpy==1.26.4

|

| 10 |

+

onnxruntime==1.17.3

|

| 11 |

+

pydantic

|

| 12 |

+

typing-extensions==4.8.0

|

| 13 |

+

pyyaml==6.0.1

|

| 14 |

+

gradio==5.6.0

|

| 15 |

+

peft==0.6.1

|

| 16 |

+

opencv-python==4.8.1.78

|

| 17 |

+

omegaconf==2.3.0

|

| 18 |

+

controlnet-aux==0.0.7

|

| 19 |

+

mediapipe>=0.10.9

|

| 20 |

+

tomesd==0.1.3

|

| 21 |

+

fastapi-mcp==0.3.0

|

src/__init__.py

ADDED

|

File without changes

|

src/app.py

ADDED

|

@@ -0,0 +1,554 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import json

|

| 2 |

+

from argparse import ArgumentParser

|

| 3 |

+

|

| 4 |

+

from PIL import Image

|

| 5 |

+

|

| 6 |

+

import constants

|

| 7 |

+

from backend.controlnet import controlnet_settings_from_dict

|

| 8 |

+

from backend.device import get_device_name

|

| 9 |

+

from backend.models.gen_images import ImageFormat

|

| 10 |

+

from backend.models.lcmdiffusion_setting import DiffusionTask

|

| 11 |

+

from backend.upscale.tiled_upscale import generate_upscaled_image

|

| 12 |

+

from constants import APP_VERSION, DEVICE

|

| 13 |

+

from frontend.webui.image_variations_ui import generate_image_variations

|

| 14 |

+

from models.interface_types import InterfaceType

|

| 15 |

+

from paths import FastStableDiffusionPaths, ensure_path

|

| 16 |

+

from state import get_context, get_settings

|

| 17 |

+

from utils import show_system_info

|

| 18 |

+

|

| 19 |

+

parser = ArgumentParser(description=f"FAST SD CPU {constants.APP_VERSION}")

|

| 20 |

+

parser.add_argument(

|

| 21 |

+

"-s",

|

| 22 |

+

"--share",

|

| 23 |

+

action="store_true",

|

| 24 |

+

help="Create sharable link(Web UI)",

|

| 25 |

+

required=False,

|

| 26 |

+

)

|

| 27 |

+

group = parser.add_mutually_exclusive_group(required=False)

|

| 28 |

+

group.add_argument(

|

| 29 |

+

"-g",

|

| 30 |

+

"--gui",

|

| 31 |

+

action="store_true",

|

| 32 |

+

help="Start desktop GUI",

|

| 33 |

+

)

|

| 34 |

+

group.add_argument(

|

| 35 |

+

"-w",

|

| 36 |

+

"--webui",

|

| 37 |

+

action="store_true",

|

| 38 |

+

help="Start Web UI",

|

| 39 |

+

)

|

| 40 |

+

group.add_argument(

|

| 41 |

+

"-a",

|

| 42 |

+

"--api",

|

| 43 |

+

action="store_true",

|

| 44 |

+

help="Start Web API server",

|

| 45 |

+

)

|

| 46 |

+

group.add_argument(

|

| 47 |

+

"-m",

|

| 48 |

+

"--mcp",

|

| 49 |

+

action="store_true",

|

| 50 |

+

help="Start MCP(Model Context Protocol) server",

|

| 51 |

+

)

|

| 52 |

+

group.add_argument(

|

| 53 |

+

"-r",

|

| 54 |

+

"--realtime",

|

| 55 |

+

action="store_true",

|

| 56 |

+

help="Start realtime inference UI(experimental)",

|

| 57 |

+

)

|

| 58 |

+

group.add_argument(

|

| 59 |

+

"-v",

|

| 60 |

+

"--version",

|

| 61 |

+

action="store_true",

|

| 62 |

+

help="Version",

|

| 63 |

+

)

|

| 64 |

+

|

| 65 |

+

parser.add_argument(

|

| 66 |

+

"-b",

|

| 67 |

+

"--benchmark",

|

| 68 |

+

action="store_true",

|

| 69 |

+

help="Run inference benchmark on the selected device",

|

| 70 |

+

)

|

| 71 |

+

parser.add_argument(

|

| 72 |

+

"--lcm_model_id",

|

| 73 |

+

type=str,

|

| 74 |

+

help="Model ID or path,Default stabilityai/sd-turbo",

|

| 75 |

+

default="stabilityai/sd-turbo",

|

| 76 |

+

)

|

| 77 |

+

parser.add_argument(

|

| 78 |

+

"--openvino_lcm_model_id",

|

| 79 |

+

type=str,

|

| 80 |

+

help="OpenVINO Model ID or path,Default rupeshs/sd-turbo-openvino",

|

| 81 |

+

default="rupeshs/sd-turbo-openvino",

|

| 82 |

+

)

|

| 83 |

+

parser.add_argument(

|

| 84 |

+

"--prompt",

|

| 85 |

+

type=str,

|

| 86 |

+

help="Describe the image you want to generate",

|

| 87 |

+

default="",

|

| 88 |

+

)

|

| 89 |

+

parser.add_argument(

|

| 90 |

+

"--negative_prompt",

|

| 91 |

+

type=str,

|

| 92 |

+

help="Describe what you want to exclude from the generation",

|

| 93 |

+

default="",

|

| 94 |

+

)

|

| 95 |

+

parser.add_argument(

|

| 96 |

+

"--image_height",

|

| 97 |

+

type=int,

|

| 98 |

+

help="Height of the image",

|

| 99 |

+

default=512,

|

| 100 |

+

)

|

| 101 |

+

parser.add_argument(

|

| 102 |

+

"--image_width",

|

| 103 |

+

type=int,

|

| 104 |

+

help="Width of the image",

|

| 105 |

+

default=512,

|

| 106 |

+

)

|

| 107 |

+

parser.add_argument(

|

| 108 |

+

"--inference_steps",

|

| 109 |

+

type=int,

|

| 110 |

+

help="Number of steps,default : 1",

|

| 111 |

+

default=1,

|

| 112 |

+

)

|

| 113 |

+

parser.add_argument(

|

| 114 |

+

"--guidance_scale",

|

| 115 |

+

type=float,

|

| 116 |

+

help="Guidance scale,default : 1.0",

|

| 117 |

+

default=1.0,

|

| 118 |

+

)

|

| 119 |

+

|

| 120 |

+

parser.add_argument(

|

| 121 |

+

"--number_of_images",

|

| 122 |

+

type=int,

|

| 123 |

+

help="Number of images to generate ,default : 1",

|

| 124 |

+

default=1,

|

| 125 |

+

)

|

| 126 |

+

parser.add_argument(

|

| 127 |

+

"--seed",

|

| 128 |

+

type=int,

|

| 129 |

+

help="Seed,default : -1 (disabled) ",

|

| 130 |

+

default=-1,

|

| 131 |

+

)

|

| 132 |

+

parser.add_argument(

|

| 133 |

+

"--use_openvino",

|

| 134 |

+

action="store_true",

|

| 135 |

+

help="Use OpenVINO model",

|

| 136 |

+

)

|

| 137 |

+

|

| 138 |

+

parser.add_argument(

|

| 139 |

+

"--use_offline_model",

|

| 140 |

+

action="store_true",

|

| 141 |

+

help="Use offline model",

|

| 142 |

+

)

|

| 143 |

+

parser.add_argument(

|

| 144 |

+

"--clip_skip",

|

| 145 |

+

type=int,

|

| 146 |

+

help="CLIP Skip (1-12), default : 1 (disabled) ",

|

| 147 |

+

default=1,

|

| 148 |

+

)

|

| 149 |

+

parser.add_argument(

|

| 150 |

+

"--token_merging",

|

| 151 |

+

type=float,

|

| 152 |

+

help="Token merging scale, 0.0 - 1.0, default : 0.0",

|

| 153 |

+

default=0.0,

|

| 154 |

+

)

|

| 155 |

+

|

| 156 |

+

parser.add_argument(

|

| 157 |

+

"--use_safety_checker",

|

| 158 |

+

action="store_true",

|

| 159 |

+

help="Use safety checker",

|

| 160 |

+

)

|

| 161 |

+

parser.add_argument(

|

| 162 |

+

"--use_lcm_lora",

|

| 163 |

+

action="store_true",

|

| 164 |

+

help="Use LCM-LoRA",

|

| 165 |

+

)

|

| 166 |

+

parser.add_argument(

|

| 167 |

+

"--base_model_id",

|

| 168 |

+

type=str,

|

| 169 |

+

help="LCM LoRA base model ID,Default Lykon/dreamshaper-8",

|

| 170 |

+

default="Lykon/dreamshaper-8",

|

| 171 |

+

)

|

| 172 |

+

parser.add_argument(

|

| 173 |

+

"--lcm_lora_id",

|

| 174 |

+

type=str,

|

| 175 |

+

help="LCM LoRA model ID,Default latent-consistency/lcm-lora-sdv1-5",

|

| 176 |

+

default="latent-consistency/lcm-lora-sdv1-5",

|

| 177 |

+

)

|

| 178 |

+

parser.add_argument(

|

| 179 |

+

"-i",

|

| 180 |

+

"--interactive",

|

| 181 |

+

action="store_true",

|

| 182 |

+

help="Interactive CLI mode",

|

| 183 |

+

)

|

| 184 |

+

parser.add_argument(

|

| 185 |

+

"-t",

|

| 186 |

+

"--use_tiny_auto_encoder",

|

| 187 |

+

action="store_true",

|

| 188 |

+

help="Use Tiny AutoEncoder for TAESD/TAESDXL/TAEF1",

|

| 189 |

+

)

|

| 190 |

+

parser.add_argument(

|

| 191 |

+

"-f",

|

| 192 |

+

"--file",

|

| 193 |

+

type=str,

|

| 194 |

+

help="Input image for img2img mode",

|

| 195 |

+

default="",

|

| 196 |

+

)

|

| 197 |

+

parser.add_argument(

|

| 198 |

+

"--img2img",

|

| 199 |

+

action="store_true",

|

| 200 |

+

help="img2img mode; requires input file via -f argument",

|

| 201 |

+

)

|

| 202 |

+

parser.add_argument(

|

| 203 |

+

"--batch_count",

|

| 204 |

+

type=int,

|

| 205 |

+

help="Number of sequential generations",

|

| 206 |

+

default=1,

|

| 207 |

+

)

|

| 208 |

+

parser.add_argument(

|

| 209 |

+

"--strength",

|

| 210 |

+

type=float,

|

| 211 |

+

help="Denoising strength for img2img and Image variations",

|

| 212 |

+

default=0.3,

|

| 213 |

+

)

|

| 214 |

+

parser.add_argument(

|

| 215 |

+

"--sdupscale",

|

| 216 |

+

action="store_true",

|

| 217 |

+

help="Tiled SD upscale,works only for the resolution 512x512,(2x upscale)",

|

| 218 |

+

)

|

| 219 |

+

parser.add_argument(

|

| 220 |

+

"--upscale",

|

| 221 |

+

action="store_true",

|

| 222 |

+

help="EDSR SD upscale ",

|

| 223 |

+

)

|

| 224 |

+

parser.add_argument(

|

| 225 |

+

"--custom_settings",

|

| 226 |

+

type=str,

|

| 227 |

+

help="JSON file containing custom generation settings",

|

| 228 |

+

default=None,

|

| 229 |

+

)

|

| 230 |

+

parser.add_argument(

|

| 231 |

+

"--usejpeg",

|

| 232 |

+

action="store_true",

|

| 233 |

+

help="Images will be saved as JPEG format",

|

| 234 |

+

)

|

| 235 |

+

parser.add_argument(

|

| 236 |

+

"--noimagesave",

|

| 237 |

+

action="store_true",

|

| 238 |

+

help="Disable image saving",

|

| 239 |

+

)

|

| 240 |

+

parser.add_argument(

|

| 241 |

+

"--imagequality", type=int, help="Output image quality [0 to 100]", default=90

|

| 242 |

+

)

|

| 243 |

+

parser.add_argument(

|

| 244 |

+

"--lora",

|

| 245 |

+

type=str,

|

| 246 |

+

help="LoRA model full path e.g D:\lora_models\CuteCartoon15V-LiberteRedmodModel-Cartoon-CuteCartoonAF.safetensors",

|

| 247 |

+

default=None,

|

| 248 |

+

)

|

| 249 |

+

parser.add_argument(

|

| 250 |

+

"--lora_weight",

|

| 251 |

+

type=float,

|

| 252 |

+

help="LoRA adapter weight [0 to 1.0]",

|

| 253 |

+

default=0.5,

|

| 254 |

+

)

|

| 255 |

+

parser.add_argument(

|

| 256 |

+

"--port",

|

| 257 |

+

type=int,

|

| 258 |

+

help="Web server port",

|

| 259 |

+

default=8000,

|

| 260 |

+

)

|

| 261 |

+

|

| 262 |

+

args = parser.parse_args()

|

| 263 |

+

|

| 264 |

+

if args.version:

|

| 265 |

+

print(APP_VERSION)

|

| 266 |

+

exit()

|

| 267 |

+

|

| 268 |

+

# parser.print_help()

|

| 269 |

+

print("FastSD CPU - ", APP_VERSION)

|

| 270 |

+

show_system_info()

|

| 271 |

+

print(f"Using device : {constants.DEVICE}")

|

| 272 |

+

|

| 273 |

+

|

| 274 |

+

if args.webui:

|

| 275 |

+

app_settings = get_settings()

|

| 276 |

+

else:

|

| 277 |

+

app_settings = get_settings()

|

| 278 |

+

|

| 279 |

+

print(f"Output path : {app_settings.settings.generated_images.path}")

|

| 280 |

+

ensure_path(app_settings.settings.generated_images.path)

|

| 281 |

+

|

| 282 |

+

print(f"Found {len(app_settings.lcm_models)} LCM models in config/lcm-models.txt")

|

| 283 |

+

print(

|

| 284 |

+

f"Found {len(app_settings.stable_diffsuion_models)} stable diffusion models in config/stable-diffusion-models.txt"

|

| 285 |

+

)

|

| 286 |

+

print(

|

| 287 |

+

f"Found {len(app_settings.lcm_lora_models)} LCM-LoRA models in config/lcm-lora-models.txt"

|

| 288 |

+

)

|

| 289 |

+

print(

|

| 290 |

+

f"Found {len(app_settings.openvino_lcm_models)} OpenVINO LCM models in config/openvino-lcm-models.txt"

|

| 291 |

+

)

|

| 292 |

+

|

| 293 |

+

if args.noimagesave:

|

| 294 |

+

app_settings.settings.generated_images.save_image = False

|

| 295 |

+

else:

|

| 296 |

+

app_settings.settings.generated_images.save_image = True

|

| 297 |

+

|

| 298 |

+

app_settings.settings.generated_images.save_image_quality = args.imagequality

|

| 299 |

+

|

| 300 |

+

if not args.realtime:

|

| 301 |

+

# To minimize realtime mode dependencies

|

| 302 |

+

from backend.upscale.upscaler import upscale_image

|

| 303 |

+

from frontend.cli_interactive import interactive_mode

|

| 304 |

+

|

| 305 |

+

if args.gui:

|

| 306 |

+

from frontend.gui.ui import start_gui

|

| 307 |

+

|

| 308 |

+

print("Starting desktop GUI mode(Qt)")

|

| 309 |

+

start_gui(

|

| 310 |

+

[],

|

| 311 |

+

app_settings,

|

| 312 |

+

)

|

| 313 |

+

elif args.webui:

|

| 314 |

+

from frontend.webui.ui import start_webui

|

| 315 |

+

|

| 316 |

+

print("Starting web UI mode")

|

| 317 |

+

start_webui(

|

| 318 |

+

args.share,

|

| 319 |

+

)

|

| 320 |

+

elif args.realtime:

|

| 321 |

+

from frontend.webui.realtime_ui import start_realtime_text_to_image

|

| 322 |

+

|

| 323 |

+

print("Starting realtime text to image(EXPERIMENTAL)")

|

| 324 |

+

start_realtime_text_to_image(args.share)

|

| 325 |

+

elif args.api:

|

| 326 |

+

from backend.api.web import start_web_server

|

| 327 |

+

|

| 328 |

+

start_web_server(args.port)

|

| 329 |

+

elif args.mcp:

|

| 330 |

+

from backend.api.mcp_server import start_mcp_server

|

| 331 |

+

|

| 332 |

+

start_mcp_server(args.port)

|

| 333 |

+

else:

|

| 334 |

+

context = get_context(InterfaceType.CLI)

|

| 335 |

+

config = app_settings.settings

|

| 336 |

+

|

| 337 |

+

if args.use_openvino:

|

| 338 |

+

config.lcm_diffusion_setting.openvino_lcm_model_id = args.openvino_lcm_model_id

|

| 339 |

+

else:

|

| 340 |

+

config.lcm_diffusion_setting.lcm_model_id = args.lcm_model_id

|

| 341 |

+

|

| 342 |

+

config.lcm_diffusion_setting.prompt = args.prompt

|

| 343 |

+

config.lcm_diffusion_setting.negative_prompt = args.negative_prompt

|

| 344 |

+

config.lcm_diffusion_setting.image_height = args.image_height

|

| 345 |

+

config.lcm_diffusion_setting.image_width = args.image_width

|

| 346 |

+

config.lcm_diffusion_setting.guidance_scale = args.guidance_scale

|

| 347 |

+

config.lcm_diffusion_setting.number_of_images = args.number_of_images

|

| 348 |

+

config.lcm_diffusion_setting.inference_steps = args.inference_steps

|

| 349 |

+

config.lcm_diffusion_setting.strength = args.strength

|

| 350 |

+

config.lcm_diffusion_setting.seed = args.seed

|

| 351 |

+

config.lcm_diffusion_setting.use_openvino = args.use_openvino

|

| 352 |

+

config.lcm_diffusion_setting.use_tiny_auto_encoder = args.use_tiny_auto_encoder

|

| 353 |

+

config.lcm_diffusion_setting.use_lcm_lora = args.use_lcm_lora

|

| 354 |

+

config.lcm_diffusion_setting.lcm_lora.base_model_id = args.base_model_id

|

| 355 |

+

config.lcm_diffusion_setting.lcm_lora.lcm_lora_id = args.lcm_lora_id

|

| 356 |

+

config.lcm_diffusion_setting.diffusion_task = DiffusionTask.text_to_image.value

|

| 357 |

+

config.lcm_diffusion_setting.lora.enabled = False

|

| 358 |

+

config.lcm_diffusion_setting.lora.path = args.lora

|

| 359 |

+

config.lcm_diffusion_setting.lora.weight = args.lora_weight

|

| 360 |

+

config.lcm_diffusion_setting.lora.fuse = True

|

| 361 |

+

if config.lcm_diffusion_setting.lora.path:

|

| 362 |

+

config.lcm_diffusion_setting.lora.enabled = True

|

| 363 |

+

if args.usejpeg:

|

| 364 |

+

config.generated_images.format = ImageFormat.JPEG.value.upper()

|

| 365 |

+

if args.seed > -1:

|

| 366 |

+

config.lcm_diffusion_setting.use_seed = True

|

| 367 |

+

else:

|

| 368 |

+

config.lcm_diffusion_setting.use_seed = False

|

| 369 |

+

config.lcm_diffusion_setting.use_offline_model = args.use_offline_model

|

| 370 |

+

config.lcm_diffusion_setting.clip_skip = args.clip_skip

|

| 371 |

+

config.lcm_diffusion_setting.token_merging = args.token_merging

|

| 372 |

+

config.lcm_diffusion_setting.use_safety_checker = args.use_safety_checker

|

| 373 |

+

|

| 374 |

+

# Read custom settings from JSON file

|

| 375 |

+

custom_settings = {}

|

| 376 |

+

if args.custom_settings:

|

| 377 |

+

with open(args.custom_settings) as f:

|

| 378 |

+

custom_settings = json.load(f)

|

| 379 |

+

|

| 380 |

+

# Basic ControlNet settings; if ControlNet is enabled, an image is

|

| 381 |

+

# required even in txt2img mode

|

| 382 |

+

config.lcm_diffusion_setting.controlnet = None

|

| 383 |

+

controlnet_settings_from_dict(

|

| 384 |

+

config.lcm_diffusion_setting,

|

| 385 |

+

custom_settings,

|

| 386 |

+

)

|

| 387 |

+

|

| 388 |

+

# Interactive mode

|

| 389 |

+

if args.interactive:

|

| 390 |

+

# wrapper(interactive_mode, config, context)

|

| 391 |

+

config.lcm_diffusion_setting.lora.fuse = False

|

| 392 |

+

interactive_mode(config, context)

|

| 393 |

+

|

| 394 |

+

# Start of non-interactive CLI image generation

|

| 395 |

+

if args.img2img and args.file != "":

|

| 396 |

+

config.lcm_diffusion_setting.init_image = Image.open(args.file)

|

| 397 |

+

config.lcm_diffusion_setting.diffusion_task = DiffusionTask.image_to_image.value

|

| 398 |

+

elif args.img2img and args.file == "":

|

| 399 |

+

print("Error : You need to specify a file in img2img mode")

|

| 400 |

+

exit()

|

| 401 |

+

elif args.upscale and args.file == "" and args.custom_settings == None:

|

| 402 |

+

print("Error : You need to specify a file in SD upscale mode")

|

| 403 |

+

exit()

|

| 404 |

+

elif (

|

| 405 |

+

args.prompt == ""

|

| 406 |

+

and args.file == ""

|

| 407 |

+

and args.custom_settings == None

|

| 408 |

+

and not args.benchmark

|

| 409 |

+

):

|

| 410 |

+

print("Error : You need to provide a prompt")

|

| 411 |

+

exit()

|

| 412 |

+

|

| 413 |

+

if args.upscale:

|

| 414 |

+

# image = Image.open(args.file)

|

| 415 |

+

output_path = FastStableDiffusionPaths.get_upscale_filepath(

|

| 416 |

+

args.file,

|

| 417 |

+

2,

|

| 418 |

+

config.generated_images.format,

|

| 419 |

+

)

|

| 420 |

+

result = upscale_image(

|

| 421 |

+

context,

|

| 422 |

+

args.file,

|

| 423 |

+

output_path,

|

| 424 |

+

2,

|

| 425 |

+

)

|

| 426 |

+

# Perform Tiled SD upscale (EXPERIMENTAL)

|

| 427 |

+

elif args.sdupscale:

|

| 428 |

+

if args.use_openvino:

|

| 429 |

+

config.lcm_diffusion_setting.strength = 0.3

|

| 430 |

+

upscale_settings = None

|

| 431 |

+

if custom_settings != {}:

|

| 432 |

+

upscale_settings = custom_settings

|

| 433 |

+

filepath = args.file

|

| 434 |

+

output_format = config.generated_images.format

|

| 435 |

+

if upscale_settings:

|

| 436 |

+

filepath = upscale_settings["source_file"]

|

| 437 |

+

output_format = upscale_settings["output_format"].upper()

|

| 438 |

+

output_path = FastStableDiffusionPaths.get_upscale_filepath(

|

| 439 |

+

filepath,

|

| 440 |

+

2,

|

| 441 |

+

output_format,

|

| 442 |

+

)

|

| 443 |

+

|

| 444 |

+

generate_upscaled_image(

|

| 445 |

+

config,

|

| 446 |

+

filepath,

|

| 447 |

+

config.lcm_diffusion_setting.strength,

|

| 448 |

+

upscale_settings=upscale_settings,

|

| 449 |

+

context=context,

|

| 450 |

+

tile_overlap=32 if config.lcm_diffusion_setting.use_openvino else 16,

|

| 451 |

+

output_path=output_path,

|

| 452 |

+

image_format=output_format,

|

| 453 |

+

)

|

| 454 |

+

exit()

|

| 455 |

+

# If img2img argument is set and prompt is empty, use image variations mode

|

| 456 |

+

elif args.img2img and args.prompt == "":

|

| 457 |

+

for i in range(0, args.batch_count):

|

| 458 |

+

generate_image_variations(

|

| 459 |

+

config.lcm_diffusion_setting.init_image, args.strength

|

| 460 |

+

)

|

| 461 |

+

else:

|

| 462 |

+

if args.benchmark:

|

| 463 |

+

print("Initializing benchmark...")

|

| 464 |

+

bench_lcm_setting = config.lcm_diffusion_setting

|

| 465 |

+

bench_lcm_setting.prompt = "a cat"

|

| 466 |

+

bench_lcm_setting.use_tiny_auto_encoder = False

|

| 467 |

+

context.generate_text_to_image(

|

| 468 |

+

settings=config,

|

| 469 |

+

device=DEVICE,

|

| 470 |

+

)

|

| 471 |

+

|

| 472 |

+

latencies = []

|

| 473 |

+

|

| 474 |

+

print("Starting benchmark please wait...")

|

| 475 |

+

for _ in range(3):

|

| 476 |

+

context.generate_text_to_image(

|

| 477 |

+

settings=config,

|

| 478 |

+

device=DEVICE,

|

| 479 |

+

)

|

| 480 |

+

latencies.append(context.latency)

|

| 481 |

+

|

| 482 |

+

avg_latency = sum(latencies) / 3

|

| 483 |

+

|

| 484 |

+

bench_lcm_setting.use_tiny_auto_encoder = True

|

| 485 |

+

|

| 486 |

+

context.generate_text_to_image(

|

| 487 |

+

settings=config,

|

| 488 |

+

device=DEVICE,

|

| 489 |

+

)

|

| 490 |

+

latencies = []

|

| 491 |

+

for _ in range(3):

|

| 492 |

+

context.generate_text_to_image(

|

| 493 |

+

settings=config,

|

| 494 |

+

device=DEVICE,

|

| 495 |

+

)

|

| 496 |

+

latencies.append(context.latency)

|

| 497 |

+

|

| 498 |

+

avg_latency_taesd = sum(latencies) / 3

|

| 499 |

+

|

| 500 |

+

benchmark_name = ""

|

| 501 |

+

|

| 502 |

+

if config.lcm_diffusion_setting.use_openvino:

|

| 503 |

+

benchmark_name = "OpenVINO"

|

| 504 |

+

else:

|

| 505 |

+

benchmark_name = "PyTorch"

|

| 506 |

+

|

| 507 |

+

bench_model_id = ""

|

| 508 |

+

if bench_lcm_setting.use_openvino:

|

| 509 |

+

bench_model_id = bench_lcm_setting.openvino_lcm_model_id

|

| 510 |

+

elif bench_lcm_setting.use_lcm_lora:

|

| 511 |

+

bench_model_id = bench_lcm_setting.lcm_lora.base_model_id

|

| 512 |

+

else:

|

| 513 |

+

bench_model_id = bench_lcm_setting.lcm_model_id

|

| 514 |

+

|

| 515 |

+

benchmark_result = [

|

| 516 |

+

["Device", f"{DEVICE.upper()},{get_device_name()}"],

|

| 517 |

+

["Stable Diffusion Model", bench_model_id],

|

| 518 |

+

[

|

| 519 |

+

"Image Size ",

|

| 520 |

+

f"{bench_lcm_setting.image_width}x{bench_lcm_setting.image_height}",

|

| 521 |

+

],

|

| 522 |

+

[

|

| 523 |

+

"Inference Steps",

|

| 524 |

+

f"{bench_lcm_setting.inference_steps}",

|

| 525 |

+

],

|

| 526 |

+