Spaces:

Runtime error

Runtime error

Commit

·

85db903

1

Parent(s):

670680d

First model version

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +1 -0

- .gitignore +5 -0

- app.py +47 -0

- images/image_1.jpg +0 -0

- images/image_2.jpg +0 -0

- images/image_3.jpg +0 -0

- metadata/dataset_utils/dataset_downloader.py +21 -0

- metadata/predictor_yolo_detector/__pycache__/detector_test.cpython-37.pyc +0 -0

- metadata/predictor_yolo_detector/__pycache__/detector_test.cpython-38.pyc +0 -0

- metadata/predictor_yolo_detector/best.pt +3 -0

- metadata/predictor_yolo_detector/detector_test.py +176 -0

- metadata/predictor_yolo_detector/inference/images/inputImage.jpg +0 -0

- metadata/predictor_yolo_detector/models/__init__.py +0 -0

- metadata/predictor_yolo_detector/models/__pycache__/__init__.cpython-36.pyc +0 -0

- metadata/predictor_yolo_detector/models/__pycache__/__init__.cpython-37.pyc +0 -0

- metadata/predictor_yolo_detector/models/__pycache__/__init__.cpython-38.pyc +0 -0

- metadata/predictor_yolo_detector/models/__pycache__/common.cpython-36.pyc +0 -0

- metadata/predictor_yolo_detector/models/__pycache__/common.cpython-37.pyc +0 -0

- metadata/predictor_yolo_detector/models/__pycache__/common.cpython-38.pyc +0 -0

- metadata/predictor_yolo_detector/models/__pycache__/experimental.cpython-36.pyc +0 -0

- metadata/predictor_yolo_detector/models/__pycache__/experimental.cpython-37.pyc +0 -0

- metadata/predictor_yolo_detector/models/__pycache__/experimental.cpython-38.pyc +0 -0

- metadata/predictor_yolo_detector/models/__pycache__/yolo.cpython-36.pyc +0 -0

- metadata/predictor_yolo_detector/models/__pycache__/yolo.cpython-37.pyc +0 -0

- metadata/predictor_yolo_detector/models/__pycache__/yolo.cpython-38.pyc +0 -0

- metadata/predictor_yolo_detector/models/common.py +189 -0

- metadata/predictor_yolo_detector/models/custom_yolov5s.yaml +48 -0

- metadata/predictor_yolo_detector/models/experimental.py +152 -0

- metadata/predictor_yolo_detector/models/export.py +94 -0

- metadata/predictor_yolo_detector/models/hub/yolov3-spp.yaml +51 -0

- metadata/predictor_yolo_detector/models/hub/yolov5-fpn.yaml +42 -0

- metadata/predictor_yolo_detector/models/hub/yolov5-panet.yaml +48 -0

- metadata/predictor_yolo_detector/models/yolo.py +283 -0

- metadata/predictor_yolo_detector/models/yolov5l.yaml +48 -0

- metadata/predictor_yolo_detector/models/yolov5m.yaml +48 -0

- metadata/predictor_yolo_detector/models/yolov5s.yaml +48 -0

- metadata/predictor_yolo_detector/models/yolov5x.yaml +48 -0

- metadata/predictor_yolo_detector/runs/exp0_yolov5s_results/events.out.tfevents.1604565595.828c870bfd5d.342.0 +3 -0

- metadata/predictor_yolo_detector/runs/exp0_yolov5s_results/hyp.yaml +27 -0

- metadata/predictor_yolo_detector/runs/exp0_yolov5s_results/opt.yaml +31 -0

- metadata/predictor_yolo_detector/runs/exp1_yolov5s_results/events.out.tfevents.1604565658.828c870bfd5d.369.0 +3 -0

- metadata/predictor_yolo_detector/runs/exp1_yolov5s_results/hyp.yaml +27 -0

- metadata/predictor_yolo_detector/runs/exp1_yolov5s_results/labels.png +0 -0

- metadata/predictor_yolo_detector/runs/exp1_yolov5s_results/labels_correlogram.png +0 -0

- metadata/predictor_yolo_detector/runs/exp1_yolov5s_results/opt.yaml +31 -0

- metadata/predictor_yolo_detector/runs/exp1_yolov5s_results/precision-recall_curve.png +0 -0

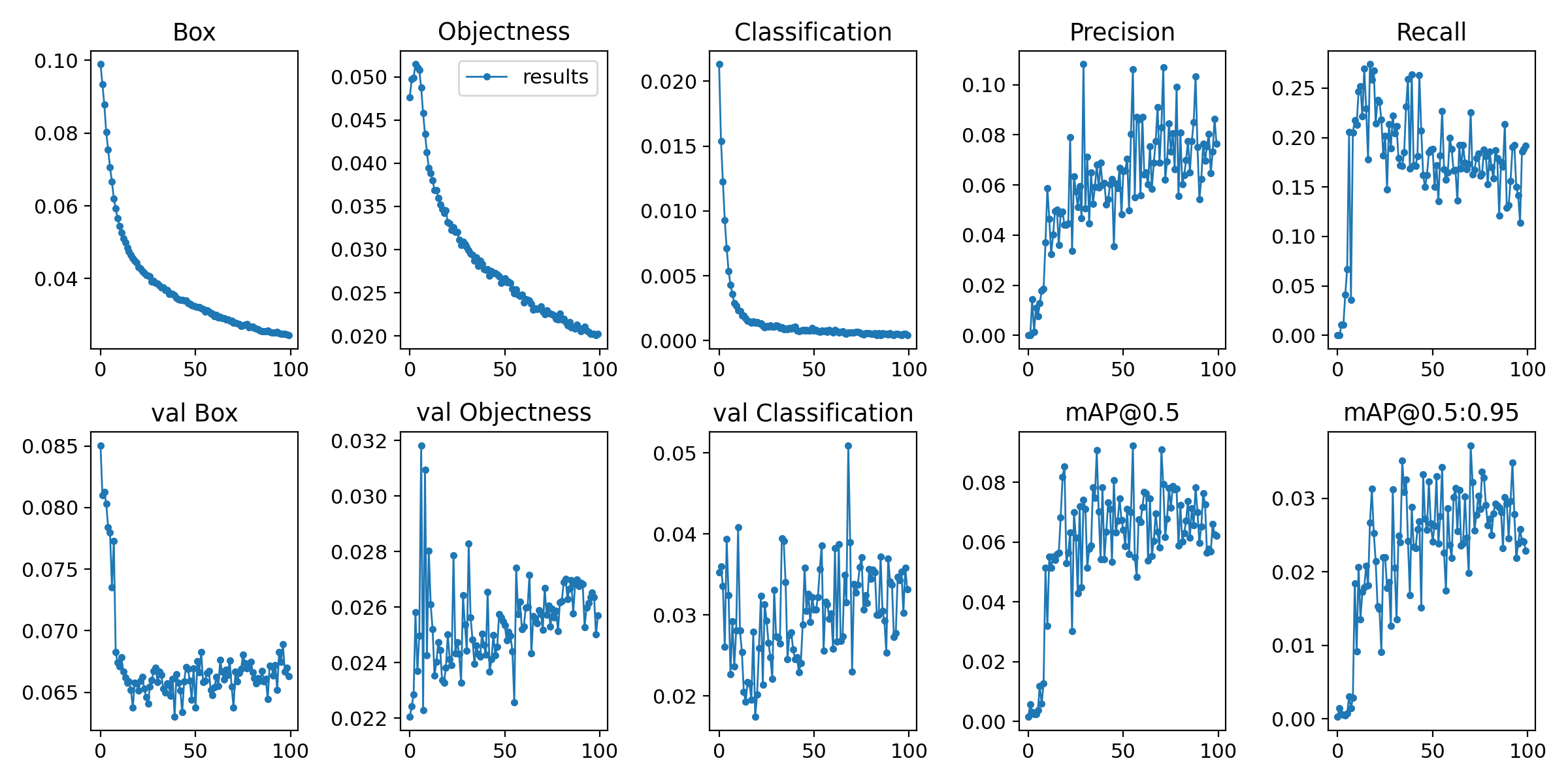

- metadata/predictor_yolo_detector/runs/exp1_yolov5s_results/results.png +0 -0

- metadata/predictor_yolo_detector/runs/exp1_yolov5s_results/results.txt +100 -0

- metadata/predictor_yolo_detector/runs/exp1_yolov5s_results/test_batch0_gt.jpg +0 -0

- metadata/predictor_yolo_detector/runs/exp1_yolov5s_results/test_batch0_pred.jpg +0 -0

.gitattributes

CHANGED

|

@@ -25,3 +25,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 25 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 26 |

*.zstandard filter=lfs diff=lfs merge=lfs -text

|

| 27 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 25 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 26 |

*.zstandard filter=lfs diff=lfs merge=lfs -text

|

| 27 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 28 |

+

**.pt filter=lfs diff=lfs merge=lfs -text

|

.gitignore

ADDED

|

@@ -0,0 +1,5 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

.ipynb_checkpoints

|

| 2 |

+

.vscode

|

| 3 |

+

flagged/

|

| 4 |

+

resolute/

|

| 5 |

+

.idea/

|

app.py

ADDED

|

@@ -0,0 +1,47 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from charset_normalizer import detect

|

| 2 |

+

import numpy as np

|

| 3 |

+

import gradio as gr

|

| 4 |

+

import torch

|

| 5 |

+

import torch.nn as nn

|

| 6 |

+

import cv2

|

| 7 |

+

import os

|

| 8 |

+

from numpy import random

|

| 9 |

+

from metadata.utils.utils import decodeImage

|

| 10 |

+

from metadata.predictor_yolo_detector.detector_test import Detector

|

| 11 |

+

from PIL import Image

|

| 12 |

+

|

| 13 |

+

class ClientApp:

|

| 14 |

+

def __init__(self):

|

| 15 |

+

self.filename = "inputImage.jpg"

|

| 16 |

+

#modelPath = 'research/ssd_mobilenet_v1_coco_2017_11_17'

|

| 17 |

+

self.objectDetection = Detector(self.filename)

|

| 18 |

+

|

| 19 |

+

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

clApp = ClientApp()

|

| 23 |

+

|

| 24 |

+

def predict_image(input_img):

|

| 25 |

+

|

| 26 |

+

img = Image.fromarray(input_img)

|

| 27 |

+

img.save("./metadata/predictor_yolo_detector/inference/images/"+ clApp.filename)

|

| 28 |

+

resultant_img = clApp.objectDetection.detect_action()

|

| 29 |

+

|

| 30 |

+

|

| 31 |

+

return resultant_img

|

| 32 |

+

|

| 33 |

+

demo = gr.Blocks()

|

| 34 |

+

|

| 35 |

+

with demo:

|

| 36 |

+

gr.Markdown(

|

| 37 |

+

"""

|

| 38 |

+

<h1 align = "center"> Warehouse Apparel Detection </h1>

|

| 39 |

+

""")

|

| 40 |

+

|

| 41 |

+

detect = gr.Interface(predict_image, 'image', 'image', examples=[

|

| 42 |

+

os.path.join(os.path.dirname(__file__), "images/image_1.jpg"),

|

| 43 |

+

os.path.join(os.path.dirname(__file__), "images/image_2.jpg"),

|

| 44 |

+

os.path.join(os.path.dirname(__file__), "images/image_3.jpg")

|

| 45 |

+

])

|

| 46 |

+

|

| 47 |

+

demo.launch()

|

images/image_1.jpg

ADDED

|

images/image_2.jpg

ADDED

|

images/image_3.jpg

ADDED

|

metadata/dataset_utils/dataset_downloader.py

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gdown

|

| 2 |

+

from zipfile import ZipFile

|

| 3 |

+

|

| 4 |

+

# Original Link :- https://drive.google.com/file/d/14QoqoZQLYnUmZgYblmFZ2u2eHo9yv2aA/view?usp=sharing

|

| 5 |

+

url = 'https://drive.google.com/uc?id=14QoqoZQLYnUmZgYblmFZ2u2eHo9yv2aA'

|

| 6 |

+

output = 'Fire_smoke.zip'

|

| 7 |

+

|

| 8 |

+

gdown.download(url, output, quiet=False)

|

| 9 |

+

|

| 10 |

+

# specifying the zip file name

|

| 11 |

+

file_name = output

|

| 12 |

+

|

| 13 |

+

# opening the zip file in READ mode

|

| 14 |

+

with ZipFile(file_name, 'r') as zip:

|

| 15 |

+

# printing all the contents of the zip file

|

| 16 |

+

zip.printdir()

|

| 17 |

+

|

| 18 |

+

# extracting all the files

|

| 19 |

+

print('Extracting all the files now...')

|

| 20 |

+

zip.extractall()

|

| 21 |

+

print('Done!')

|

metadata/predictor_yolo_detector/__pycache__/detector_test.cpython-37.pyc

ADDED

|

Binary file (5.53 kB). View file

|

|

|

metadata/predictor_yolo_detector/__pycache__/detector_test.cpython-38.pyc

ADDED

|

Binary file (5.05 kB). View file

|

|

|

metadata/predictor_yolo_detector/best.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:26c75a28c481bd9a22759e8b2a2a4a9be08bee37a864aed6cd442a1b3e199b0c

|

| 3 |

+

size 14785730

|

metadata/predictor_yolo_detector/detector_test.py

ADDED

|

@@ -0,0 +1,176 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import shutil

|

| 3 |

+

import time

|

| 4 |

+

from pathlib import Path

|

| 5 |

+

|

| 6 |

+

import cv2

|

| 7 |

+

import torch

|

| 8 |

+

import torch.backends.cudnn as cudnn

|

| 9 |

+

from numpy import random

|

| 10 |

+

from PIL import Image

|

| 11 |

+

|

| 12 |

+

from metadata.utils.utils import encodeImageIntoBase64

|

| 13 |

+

|

| 14 |

+

import sys

|

| 15 |

+

sys.path.insert(0, 'metadata/predictor_yolo_detector')

|

| 16 |

+

|

| 17 |

+

from metadata.predictor_yolo_detector.models.experimental import attempt_load

|

| 18 |

+

from metadata.predictor_yolo_detector.utils.datasets import LoadStreams, LoadImages

|

| 19 |

+

from metadata.predictor_yolo_detector.utils.general import (

|

| 20 |

+

check_img_size, non_max_suppression, apply_classifier, scale_coords,

|

| 21 |

+

xyxy2xywh, plot_one_box, strip_optimizer, set_logging)

|

| 22 |

+

from metadata.predictor_yolo_detector.utils.torch_utils import select_device, load_classifier, \

|

| 23 |

+

time_synchronized

|

| 24 |

+

|

| 25 |

+

|

| 26 |

+

class Detector():

|

| 27 |

+

def __init__(self, filename):

|

| 28 |

+

self.weights = "./metadata/predictor_yolo_detector/best.pt"

|

| 29 |

+

self.conf = float(0.5)

|

| 30 |

+

self.source = "./metadata/predictor_yolo_detector/inference/images/"

|

| 31 |

+

self.img_size = int(416)

|

| 32 |

+

self.save_dir = "./metadata/predictor_yolo_detector/inference/output"

|

| 33 |

+

self.view_img = False

|

| 34 |

+

self.save_txt = False

|

| 35 |

+

self.device = 'cpu'

|

| 36 |

+

self.augment = True

|

| 37 |

+

self.agnostic_nms = True

|

| 38 |

+

self.conf_thres = float(0.5)

|

| 39 |

+

self.iou_thres = float(0.45)

|

| 40 |

+

self.classes = 0

|

| 41 |

+

self.save_conf = True

|

| 42 |

+

self.update = True

|

| 43 |

+

self.filename = filename

|

| 44 |

+

|

| 45 |

+

def detect(self, save_img=False):

|

| 46 |

+

out, source, weights, view_img, save_txt, imgsz = \

|

| 47 |

+

self.save_dir, self.source, self.weights, self.view_img, self.save_txt, self.img_size

|

| 48 |

+

webcam = source.isnumeric() or source.startswith(('rtsp://', 'rtmp://', 'http://')) or source.endswith('.txt')

|

| 49 |

+

|

| 50 |

+

# Initialize

|

| 51 |

+

set_logging()

|

| 52 |

+

device = select_device(self.device)

|

| 53 |

+

if os.path.exists(out): # output dir

|

| 54 |

+

shutil.rmtree(out) # delete dir

|

| 55 |

+

os.makedirs(out) # make new dir

|

| 56 |

+

half = device.type != 'cpu' # half precision only supported on CUDA

|

| 57 |

+

|

| 58 |

+

# Load model

|

| 59 |

+

model = attempt_load(weights, map_location=device) # load FP32 model

|

| 60 |

+

imgsz = check_img_size(imgsz, s=model.stride.max()) # check img_size

|

| 61 |

+

if half:

|

| 62 |

+

model.half() # to FP16

|

| 63 |

+

|

| 64 |

+

# Second-stage classifier

|

| 65 |

+

classify = False

|

| 66 |

+

if classify:

|

| 67 |

+

modelc = load_classifier(name='resnet101', n=2) # initialize

|

| 68 |

+

modelc.load_state_dict(torch.load('weights/resnet101.pt', map_location=device)['model']) # load weights

|

| 69 |

+

modelc.to(device).eval()

|

| 70 |

+

|

| 71 |

+

# Set Dataloader

|

| 72 |

+

vid_path, vid_writer = None, None

|

| 73 |

+

if webcam:

|

| 74 |

+

view_img = True

|

| 75 |

+

cudnn.benchmark = True # set True to speed up constant image size inference

|

| 76 |

+

dataset = LoadStreams(source, img_size=imgsz)

|

| 77 |

+

else:

|

| 78 |

+

save_img = True

|

| 79 |

+

dataset = LoadImages(source, img_size=imgsz)

|

| 80 |

+

|

| 81 |

+

# Get names and colors

|

| 82 |

+

names = model.module.names if hasattr(model, 'module') else model.names

|

| 83 |

+

colors = [[random.randint(0, 255) for _ in range(3)] for _ in range(len(names))]

|

| 84 |

+

|

| 85 |

+

# Run inference

|

| 86 |

+

t0 = time.time()

|

| 87 |

+

img = torch.zeros((1, 3, imgsz, imgsz), device=device) # init img

|

| 88 |

+

_ = model(img.half() if half else img) if device.type != 'cpu' else None # run once

|

| 89 |

+

for path, img, im0s, vid_cap in dataset:

|

| 90 |

+

img = torch.from_numpy(img).to(device)

|

| 91 |

+

img = img.half() if half else img.float() # uint8 to fp16/32

|

| 92 |

+

img /= 255.0 # 0 - 255 to 0.0 - 1.0

|

| 93 |

+

if img.ndimension() == 3:

|

| 94 |

+

img = img.unsqueeze(0)

|

| 95 |

+

|

| 96 |

+

# Inference

|

| 97 |

+

t1 = time_synchronized()

|

| 98 |

+

pred = model(img, augment=self.augment)[0]

|

| 99 |

+

|

| 100 |

+

# Apply NMS

|

| 101 |

+

pred = non_max_suppression(pred, self.conf_thres, self.iou_thres, classes=self.classes,

|

| 102 |

+

agnostic=self.agnostic_nms)

|

| 103 |

+

t2 = time_synchronized()

|

| 104 |

+

|

| 105 |

+

# Apply Classifier

|

| 106 |

+

if classify:

|

| 107 |

+

pred = apply_classifier(pred, modelc, img, im0s)

|

| 108 |

+

|

| 109 |

+

# Process detections

|

| 110 |

+

for i, det in enumerate(pred): # detections per image

|

| 111 |

+

if webcam: # batch_size >= 1

|

| 112 |

+

p, s, im0 = path[i], '%g: ' % i, im0s[i].copy()

|

| 113 |

+

else:

|

| 114 |

+

p, s, im0 = path, '', im0s

|

| 115 |

+

|

| 116 |

+

save_path = str(Path(out) / Path(p).name)

|

| 117 |

+

txt_path = str(Path(out) / Path(p).stem) + ('_%g' % dataset.frame if dataset.mode == 'video' else '')

|

| 118 |

+

s += '%gx%g ' % img.shape[2:] # print string

|

| 119 |

+

gn = torch.tensor(im0.shape)[[1, 0, 1, 0]] # normalization gain whwh

|

| 120 |

+

if det is not None and len(det):

|

| 121 |

+

# Rescale boxes from img_size to im0 size

|

| 122 |

+

det[:, :4] = scale_coords(img.shape[2:], det[:, :4], im0.shape).round()

|

| 123 |

+

|

| 124 |

+

# Print results

|

| 125 |

+

for c in det[:, -1].unique():

|

| 126 |

+

n = (det[:, -1] == c).sum() # detections per class

|

| 127 |

+

s += '%g %ss, ' % (n, names[int(c)]) # add to string

|

| 128 |

+

|

| 129 |

+

# Write results

|

| 130 |

+

for *xyxy, conf, cls in reversed(det):

|

| 131 |

+

if save_txt: # Write to file

|

| 132 |

+

xywh = (xyxy2xywh(torch.tensor(xyxy).view(1, 4)) / gn).view(-1).tolist() # normalized xywh

|

| 133 |

+

line = (cls, conf, *xywh) if self.save_conf else (cls, *xywh) # label format

|

| 134 |

+

with open(txt_path + '.txt', 'a') as f:

|

| 135 |

+

f.write(('%g ' * len(line) + '\n') % line)

|

| 136 |

+

|

| 137 |

+

if save_img or view_img: # Add bbox to image

|

| 138 |

+

label = '%s %.2f' % (names[int(cls)], conf)

|

| 139 |

+

plot_one_box(xyxy, im0, label=label, color=colors[int(cls)], line_thickness=3)

|

| 140 |

+

|

| 141 |

+

# Print time (inference + NMS)

|

| 142 |

+

# print('%sDone. (%.3fs)' % (s, t2 - t1))

|

| 143 |

+

# detections = "Total No. of Cardboards:" + str(len(det))

|

| 144 |

+

# cv2.putText(img = im0, text = detections, org = (round(im0.shape[0]*0.08), round(im0.shape[1]*0.08)),fontFace = cv2.FONT_HERSHEY_DUPLEX, fontScale = 1.0,color = (0, 0, 255),thickness = 3)

|

| 145 |

+

im0 = cv2.cvtColor(im0, cv2.COLOR_RGB2BGR)

|

| 146 |

+

return im0

|

| 147 |

+

# if save_img:

|

| 148 |

+

# if dataset.mode == 'images':

|

| 149 |

+

|

| 150 |

+

# #im = im0[:, :, ::-1]

|

| 151 |

+

# im = Image.fromarray(im0)

|

| 152 |

+

|

| 153 |

+

# im.save("output.jpg")

|

| 154 |

+

# # cv2.imwrite(save_path, im0)

|

| 155 |

+

# else:

|

| 156 |

+

# print("Video Processing Needed")

|

| 157 |

+

|

| 158 |

+

|

| 159 |

+

# if save_txt or save_img:

|

| 160 |

+

# print('Results saved to %s' % Path(out))

|

| 161 |

+

|

| 162 |

+

# print('Done. (%.3fs)' % (time.time() - t0))

|

| 163 |

+

|

| 164 |

+

# return "Done"

|

| 165 |

+

|

| 166 |

+

def detect_action(self):

|

| 167 |

+

with torch.no_grad():

|

| 168 |

+

img = self.detect()

|

| 169 |

+

return img

|

| 170 |

+

# bgr_image = cv2.imread("output.jpg")

|

| 171 |

+

# im_rgb = cv2.cvtColor(bgr_image, cv2.COLOR_RGB2BGR)

|

| 172 |

+

# cv2.imwrite('color_img.jpg', im_rgb)

|

| 173 |

+

# opencodedbase64 = encodeImageIntoBase64("color_img.jpg")

|

| 174 |

+

# result = {"image": opencodedbase64.decode('utf-8')}

|

| 175 |

+

# return result

|

| 176 |

+

|

metadata/predictor_yolo_detector/inference/images/inputImage.jpg

ADDED

|

metadata/predictor_yolo_detector/models/__init__.py

ADDED

|

File without changes

|

metadata/predictor_yolo_detector/models/__pycache__/__init__.cpython-36.pyc

ADDED

|

Binary file (117 Bytes). View file

|

|

|

metadata/predictor_yolo_detector/models/__pycache__/__init__.cpython-37.pyc

ADDED

|

Binary file (204 Bytes). View file

|

|

|

metadata/predictor_yolo_detector/models/__pycache__/__init__.cpython-38.pyc

ADDED

|

Binary file (198 Bytes). View file

|

|

|

metadata/predictor_yolo_detector/models/__pycache__/common.cpython-36.pyc

ADDED

|

Binary file (8.92 kB). View file

|

|

|

metadata/predictor_yolo_detector/models/__pycache__/common.cpython-37.pyc

ADDED

|

Binary file (9.06 kB). View file

|

|

|

metadata/predictor_yolo_detector/models/__pycache__/common.cpython-38.pyc

ADDED

|

Binary file (8.92 kB). View file

|

|

|

metadata/predictor_yolo_detector/models/__pycache__/experimental.cpython-36.pyc

ADDED

|

Binary file (6.76 kB). View file

|

|

|

metadata/predictor_yolo_detector/models/__pycache__/experimental.cpython-37.pyc

ADDED

|

Binary file (6.91 kB). View file

|

|

|

metadata/predictor_yolo_detector/models/__pycache__/experimental.cpython-38.pyc

ADDED

|

Binary file (6.78 kB). View file

|

|

|

metadata/predictor_yolo_detector/models/__pycache__/yolo.cpython-36.pyc

ADDED

|

Binary file (9.85 kB). View file

|

|

|

metadata/predictor_yolo_detector/models/__pycache__/yolo.cpython-37.pyc

ADDED

|

Binary file (9.83 kB). View file

|

|

|

metadata/predictor_yolo_detector/models/__pycache__/yolo.cpython-38.pyc

ADDED

|

Binary file (9.79 kB). View file

|

|

|

metadata/predictor_yolo_detector/models/common.py

ADDED

|

@@ -0,0 +1,189 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# This file contains modules common to various models

|

| 2 |

+

|

| 3 |

+

import math

|

| 4 |

+

|

| 5 |

+

import numpy as np

|

| 6 |

+

import torch

|

| 7 |

+

import torch.nn as nn

|

| 8 |

+

|

| 9 |

+

from metadata.predictor_yolo_detector.utils.datasets import letterbox

|

| 10 |

+

from metadata.predictor_yolo_detector.utils.general import non_max_suppression, make_divisible, \

|

| 11 |

+

scale_coords

|

| 12 |

+

|

| 13 |

+

|

| 14 |

+

def autopad(k, p=None): # kernel, padding

|

| 15 |

+

# Pad to 'same'

|

| 16 |

+

if p is None:

|

| 17 |

+

p = k // 2 if isinstance(k, int) else [x // 2 for x in k] # auto-pad

|

| 18 |

+

return p

|

| 19 |

+

|

| 20 |

+

|

| 21 |

+

def DWConv(c1, c2, k=1, s=1, act=True):

|

| 22 |

+

# Depthwise convolution

|

| 23 |

+

return Conv(c1, c2, k, s, g=math.gcd(c1, c2), act=act)

|

| 24 |

+

|

| 25 |

+

|

| 26 |

+

class Conv(nn.Module):

|

| 27 |

+

# Standard convolution

|

| 28 |

+

def __init__(self, c1, c2, k=1, s=1, p=None, g=1, act=True): # ch_in, ch_out, kernel, stride, padding, groups

|

| 29 |

+

super(Conv, self).__init__()

|

| 30 |

+

self.conv = nn.Conv2d(c1, c2, k, s, autopad(k, p), groups=g, bias=False)

|

| 31 |

+

self.bn = nn.BatchNorm2d(c2)

|

| 32 |

+

self.act = nn.Hardswish() if act else nn.Identity()

|

| 33 |

+

|

| 34 |

+

def forward(self, x):

|

| 35 |

+

return self.act(self.bn(self.conv(x)))

|

| 36 |

+

|

| 37 |

+

def fuseforward(self, x):

|

| 38 |

+

return self.act(self.conv(x))

|

| 39 |

+

|

| 40 |

+

|

| 41 |

+

class Bottleneck(nn.Module):

|

| 42 |

+

# Standard bottleneck

|

| 43 |

+

def __init__(self, c1, c2, shortcut=True, g=1, e=0.5): # ch_in, ch_out, shortcut, groups, expansion

|

| 44 |

+

super(Bottleneck, self).__init__()

|

| 45 |

+

c_ = int(c2 * e) # hidden channels

|

| 46 |

+

self.cv1 = Conv(c1, c_, 1, 1)

|

| 47 |

+

self.cv2 = Conv(c_, c2, 3, 1, g=g)

|

| 48 |

+

self.add = shortcut and c1 == c2

|

| 49 |

+

|

| 50 |

+

def forward(self, x):

|

| 51 |

+

return x + self.cv2(self.cv1(x)) if self.add else self.cv2(self.cv1(x))

|

| 52 |

+

|

| 53 |

+

|

| 54 |

+

class BottleneckCSP(nn.Module):

|

| 55 |

+

# CSP Bottleneck https://github.com/WongKinYiu/CrossStagePartialNetworks

|

| 56 |

+

def __init__(self, c1, c2, n=1, shortcut=True, g=1, e=0.5): # ch_in, ch_out, number, shortcut, groups, expansion

|

| 57 |

+

super(BottleneckCSP, self).__init__()

|

| 58 |

+

c_ = int(c2 * e) # hidden channels

|

| 59 |

+

self.cv1 = Conv(c1, c_, 1, 1)

|

| 60 |

+

self.cv2 = nn.Conv2d(c1, c_, 1, 1, bias=False)

|

| 61 |

+

self.cv3 = nn.Conv2d(c_, c_, 1, 1, bias=False)

|

| 62 |

+

self.cv4 = Conv(2 * c_, c2, 1, 1)

|

| 63 |

+

self.bn = nn.BatchNorm2d(2 * c_) # applied to cat(cv2, cv3)

|

| 64 |

+

self.act = nn.LeakyReLU(0.1, inplace=True)

|

| 65 |

+

self.m = nn.Sequential(*[Bottleneck(c_, c_, shortcut, g, e=1.0) for _ in range(n)])

|

| 66 |

+

|

| 67 |

+

def forward(self, x):

|

| 68 |

+

y1 = self.cv3(self.m(self.cv1(x)))

|

| 69 |

+

y2 = self.cv2(x)

|

| 70 |

+

return self.cv4(self.act(self.bn(torch.cat((y1, y2), dim=1))))

|

| 71 |

+

|

| 72 |

+

|

| 73 |

+

class SPP(nn.Module):

|

| 74 |

+

# Spatial pyramid pooling layer used in YOLOv3-SPP

|

| 75 |

+

def __init__(self, c1, c2, k=(5, 9, 13)):

|

| 76 |

+

super(SPP, self).__init__()

|

| 77 |

+

c_ = c1 // 2 # hidden channels

|

| 78 |

+

self.cv1 = Conv(c1, c_, 1, 1)

|

| 79 |

+

self.cv2 = Conv(c_ * (len(k) + 1), c2, 1, 1)

|

| 80 |

+

self.m = nn.ModuleList([nn.MaxPool2d(kernel_size=x, stride=1, padding=x // 2) for x in k])

|

| 81 |

+

|

| 82 |

+

def forward(self, x):

|

| 83 |

+

x = self.cv1(x)

|

| 84 |

+

return self.cv2(torch.cat([x] + [m(x) for m in self.m], 1))

|

| 85 |

+

|

| 86 |

+

|

| 87 |

+

class Focus(nn.Module):

|

| 88 |

+

# Focus wh information into c-space

|

| 89 |

+

def __init__(self, c1, c2, k=1, s=1, p=None, g=1, act=True): # ch_in, ch_out, kernel, stride, padding, groups

|

| 90 |

+

super(Focus, self).__init__()

|

| 91 |

+

self.conv = Conv(c1 * 4, c2, k, s, p, g, act)

|

| 92 |

+

|

| 93 |

+

def forward(self, x): # x(b,c,w,h) -> y(b,4c,w/2,h/2)

|

| 94 |

+

return self.conv(torch.cat([x[..., ::2, ::2], x[..., 1::2, ::2], x[..., ::2, 1::2], x[..., 1::2, 1::2]], 1))

|

| 95 |

+

|

| 96 |

+

|

| 97 |

+

class Concat(nn.Module):

|

| 98 |

+

# Concatenate a list of tensors along dimension

|

| 99 |

+

def __init__(self, dimension=1):

|

| 100 |

+

super(Concat, self).__init__()

|

| 101 |

+

self.d = dimension

|

| 102 |

+

|

| 103 |

+

def forward(self, x):

|

| 104 |

+

return torch.cat(x, self.d)

|

| 105 |

+

|

| 106 |

+

|

| 107 |

+

class NMS(nn.Module):

|

| 108 |

+

# Non-Maximum Suppression (NMS) module

|

| 109 |

+

conf = 0.25 # confidence threshold

|

| 110 |

+

iou = 0.45 # IoU threshold

|

| 111 |

+

classes = None # (optional list) filter by class

|

| 112 |

+

|

| 113 |

+

def __init__(self):

|

| 114 |

+

super(NMS, self).__init__()

|

| 115 |

+

|

| 116 |

+

def forward(self, x):

|

| 117 |

+

return non_max_suppression(x[0], conf_thres=self.conf, iou_thres=self.iou, classes=self.classes)

|

| 118 |

+

|

| 119 |

+

|

| 120 |

+

class autoShape(nn.Module):

|

| 121 |

+

# input-robust model wrapper for passing cv2/np/PIL/torch inputs. Includes preprocessing, inference and NMS

|

| 122 |

+

img_size = 640 # inference size (pixels)

|

| 123 |

+

conf = 0.25 # NMS confidence threshold

|

| 124 |

+

iou = 0.45 # NMS IoU threshold

|

| 125 |

+

classes = None # (optional list) filter by class

|

| 126 |

+

|

| 127 |

+

def __init__(self, model):

|

| 128 |

+

super(autoShape, self).__init__()

|

| 129 |

+

self.model = model

|

| 130 |

+

|

| 131 |

+

def forward(self, x, size=640, augment=False, profile=False):

|

| 132 |

+

# supports inference from various sources. For height=720, width=1280, RGB images example inputs are:

|

| 133 |

+

# opencv: x = cv2.imread('image.jpg')[:,:,::-1] # HWC BGR to RGB x(720,1280,3)

|

| 134 |

+

# PIL: x = Image.open('image.jpg') # HWC x(720,1280,3)

|

| 135 |

+

# numpy: x = np.zeros((720,1280,3)) # HWC

|

| 136 |

+

# torch: x = torch.zeros(16,3,720,1280) # BCHW

|

| 137 |

+

# multiple: x = [Image.open('image1.jpg'), Image.open('image2.jpg'), ...] # list of images

|

| 138 |

+

|

| 139 |

+

p = next(self.model.parameters()) # for device and type

|

| 140 |

+

if isinstance(x, torch.Tensor): # torch

|

| 141 |

+

return self.model(x.to(p.device).type_as(p), augment, profile) # inference

|

| 142 |

+

|

| 143 |

+

# Pre-process

|

| 144 |

+

if not isinstance(x, list):

|

| 145 |

+

x = [x]

|

| 146 |

+

shape0, shape1 = [], [] # image and inference shapes

|

| 147 |

+

batch = range(len(x)) # batch size

|

| 148 |

+

for i in batch:

|

| 149 |

+

x[i] = np.array(x[i]) # to numpy

|

| 150 |

+

x[i] = x[i][:, :, :3] if x[i].ndim == 3 else np.tile(x[i][:, :, None], 3) # enforce 3ch input

|

| 151 |

+

s = x[i].shape[:2] # HWC

|

| 152 |

+

shape0.append(s) # image shape

|

| 153 |

+

g = (size / max(s)) # gain

|

| 154 |

+

shape1.append([y * g for y in s])

|

| 155 |

+

shape1 = [make_divisible(x, int(self.stride.max())) for x in np.stack(shape1, 0).max(0)] # inference shape

|

| 156 |

+

x = [letterbox(x[i], new_shape=shape1, auto=False)[0] for i in batch] # pad

|

| 157 |

+

x = np.stack(x, 0) if batch[-1] else x[0][None] # stack

|

| 158 |

+

x = np.ascontiguousarray(x.transpose((0, 3, 1, 2))) # BHWC to BCHW

|

| 159 |

+

x = torch.from_numpy(x).to(p.device).type_as(p) / 255. # uint8 to fp16/32

|

| 160 |

+

|

| 161 |

+

# Inference

|

| 162 |

+

x = self.model(x, augment, profile) # forward

|

| 163 |

+

x = non_max_suppression(x[0], conf_thres=self.conf, iou_thres=self.iou, classes=self.classes) # NMS

|

| 164 |

+

|

| 165 |

+

# Post-process

|

| 166 |

+

for i in batch:

|

| 167 |

+

if x[i] is not None:

|

| 168 |

+

x[i][:, :4] = scale_coords(shape1, x[i][:, :4], shape0[i])

|

| 169 |

+

return x

|

| 170 |

+

|

| 171 |

+

|

| 172 |

+

class Flatten(nn.Module):

|

| 173 |

+

# Use after nn.AdaptiveAvgPool2d(1) to remove last 2 dimensions

|

| 174 |

+

@staticmethod

|

| 175 |

+

def forward(x):

|

| 176 |

+

return x.view(x.size(0), -1)

|

| 177 |

+

|

| 178 |

+

|

| 179 |

+

class Classify(nn.Module):

|

| 180 |

+

# Classification head, i.e. x(b,c1,20,20) to x(b,c2)

|

| 181 |

+

def __init__(self, c1, c2, k=1, s=1, p=None, g=1): # ch_in, ch_out, kernel, stride, padding, groups

|

| 182 |

+

super(Classify, self).__init__()

|

| 183 |

+

self.aap = nn.AdaptiveAvgPool2d(1) # to x(b,c1,1,1)

|

| 184 |

+

self.conv = nn.Conv2d(c1, c2, k, s, autopad(k, p), groups=g, bias=False) # to x(b,c2,1,1)

|

| 185 |

+

self.flat = Flatten()

|

| 186 |

+

|

| 187 |

+

def forward(self, x):

|

| 188 |

+

z = torch.cat([self.aap(y) for y in (x if isinstance(x, list) else [x])], 1) # cat if list

|

| 189 |

+

return self.flat(self.conv(z)) # flatten to x(b,c2)

|

metadata/predictor_yolo_detector/models/custom_yolov5s.yaml

ADDED

|

@@ -0,0 +1,48 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# parameters

|

| 2 |

+

nc: 2 # number of classes

|

| 3 |

+

depth_multiple: 0.33 # model depth multiple

|

| 4 |

+

width_multiple: 0.50 # layer channel multiple

|

| 5 |

+

|

| 6 |

+

# anchors

|

| 7 |

+

anchors:

|

| 8 |

+

- [ 10,13, 16,30, 33,23 ] # P3/8

|

| 9 |

+

- [ 30,61, 62,45, 59,119 ] # P4/16

|

| 10 |

+

- [ 116,90, 156,198, 373,326 ] # P5/32

|

| 11 |

+

|

| 12 |

+

# YOLOv5 backbone

|

| 13 |

+

backbone:

|

| 14 |

+

# [from, number, module, args]

|

| 15 |

+

[ [ -1, 1, Focus, [ 64, 3 ] ], # 0-P1/2

|

| 16 |

+

[ -1, 1, Conv, [ 128, 3, 2 ] ], # 1-P2/4

|

| 17 |

+

[ -1, 3, BottleneckCSP, [ 128 ] ],

|

| 18 |

+

[ -1, 1, Conv, [ 256, 3, 2 ] ], # 3-P3/8

|

| 19 |

+

[ -1, 9, BottleneckCSP, [ 256 ] ],

|

| 20 |

+

[ -1, 1, Conv, [ 512, 3, 2 ] ], # 5-P4/16

|

| 21 |

+

[ -1, 9, BottleneckCSP, [ 512 ] ],

|

| 22 |

+

[ -1, 1, Conv, [ 1024, 3, 2 ] ], # 7-P5/32

|

| 23 |

+

[ -1, 1, SPP, [ 1024, [ 5, 9, 13 ] ] ],

|

| 24 |

+

[ -1, 3, BottleneckCSP, [ 1024, False ] ], # 9

|

| 25 |

+

]

|

| 26 |

+

|

| 27 |

+

# YOLOv5 head

|

| 28 |

+

head:

|

| 29 |

+

[ [ -1, 1, Conv, [ 512, 1, 1 ] ],

|

| 30 |

+

[ -1, 1, nn.Upsample, [ None, 2, 'nearest' ] ],

|

| 31 |

+

[ [ -1, 6 ], 1, Concat, [ 1 ] ], # cat backbone P4

|

| 32 |

+

[ -1, 3, BottleneckCSP, [ 512, False ] ], # 13

|

| 33 |

+

|

| 34 |

+

[ -1, 1, Conv, [ 256, 1, 1 ] ],

|

| 35 |

+

[ -1, 1, nn.Upsample, [ None, 2, 'nearest' ] ],

|

| 36 |

+

[ [ -1, 4 ], 1, Concat, [ 1 ] ], # cat backbone P3

|

| 37 |

+

[ -1, 3, BottleneckCSP, [ 256, False ] ], # 17 (P3/8-small)

|

| 38 |

+

|

| 39 |

+

[ -1, 1, Conv, [ 256, 3, 2 ] ],

|

| 40 |

+

[ [ -1, 14 ], 1, Concat, [ 1 ] ], # cat head P4

|

| 41 |

+

[ -1, 3, BottleneckCSP, [ 512, False ] ], # 20 (P4/16-medium)

|

| 42 |

+

|

| 43 |

+

[ -1, 1, Conv, [ 512, 3, 2 ] ],

|

| 44 |

+

[ [ -1, 10 ], 1, Concat, [ 1 ] ], # cat head P5

|

| 45 |

+

[ -1, 3, BottleneckCSP, [ 1024, False ] ], # 23 (P5/32-large)

|

| 46 |

+

|

| 47 |

+

[ [ 17, 20, 23 ], 1, Detect, [ nc, anchors ] ], # Detect(P3, P4, P5)

|

| 48 |

+

]

|

metadata/predictor_yolo_detector/models/experimental.py

ADDED

|

@@ -0,0 +1,152 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# This file contains experimental modules

|

| 2 |

+

|

| 3 |

+

import numpy as np

|

| 4 |

+

import torch

|

| 5 |

+

import torch.nn as nn

|

| 6 |

+

|

| 7 |

+

from metadata.predictor_yolo_detector.models.common import Conv, DWConv

|

| 8 |

+

from metadata.predictor_yolo_detector.utils.google_utils import attempt_download

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

class CrossConv(nn.Module):

|

| 12 |

+

# Cross Convolution Downsample

|

| 13 |

+

def __init__(self, c1, c2, k=3, s=1, g=1, e=1.0, shortcut=False):

|

| 14 |

+

# ch_in, ch_out, kernel, stride, groups, expansion, shortcut

|

| 15 |

+

super(CrossConv, self).__init__()

|

| 16 |

+

c_ = int(c2 * e) # hidden channels

|

| 17 |

+

self.cv1 = Conv(c1, c_, (1, k), (1, s))

|

| 18 |

+

self.cv2 = Conv(c_, c2, (k, 1), (s, 1), g=g)

|

| 19 |

+

self.add = shortcut and c1 == c2

|

| 20 |

+

|

| 21 |

+

def forward(self, x):

|

| 22 |

+

return x + self.cv2(self.cv1(x)) if self.add else self.cv2(self.cv1(x))

|

| 23 |

+

|

| 24 |

+

|

| 25 |

+

class C3(nn.Module):

|

| 26 |

+

# Cross Convolution CSP

|

| 27 |

+

def __init__(self, c1, c2, n=1, shortcut=True, g=1, e=0.5): # ch_in, ch_out, number, shortcut, groups, expansion

|

| 28 |

+

super(C3, self).__init__()

|

| 29 |

+

c_ = int(c2 * e) # hidden channels

|

| 30 |

+

self.cv1 = Conv(c1, c_, 1, 1)

|

| 31 |

+

self.cv2 = nn.Conv2d(c1, c_, 1, 1, bias=False)

|

| 32 |

+

self.cv3 = nn.Conv2d(c_, c_, 1, 1, bias=False)

|

| 33 |

+

self.cv4 = Conv(2 * c_, c2, 1, 1)

|

| 34 |

+

self.bn = nn.BatchNorm2d(2 * c_) # applied to cat(cv2, cv3)

|

| 35 |

+

self.act = nn.LeakyReLU(0.1, inplace=True)

|

| 36 |

+

self.m = nn.Sequential(*[CrossConv(c_, c_, 3, 1, g, 1.0, shortcut) for _ in range(n)])

|

| 37 |

+

|

| 38 |

+

def forward(self, x):

|

| 39 |

+

y1 = self.cv3(self.m(self.cv1(x)))

|

| 40 |

+

y2 = self.cv2(x)

|

| 41 |

+

return self.cv4(self.act(self.bn(torch.cat((y1, y2), dim=1))))

|

| 42 |

+

|

| 43 |

+

|

| 44 |

+

class Sum(nn.Module):

|

| 45 |

+

# Weighted sum of 2 or more layers https://arxiv.org/abs/1911.09070

|

| 46 |

+

def __init__(self, n, weight=False): # n: number of inputs

|

| 47 |

+

super(Sum, self).__init__()

|

| 48 |

+

self.weight = weight # apply weights boolean

|

| 49 |

+

self.iter = range(n - 1) # iter object

|

| 50 |

+

if weight:

|

| 51 |

+

self.w = nn.Parameter(-torch.arange(1., n) / 2, requires_grad=True) # layer weights

|

| 52 |

+

|

| 53 |

+

def forward(self, x):

|

| 54 |

+

y = x[0] # no weight

|

| 55 |

+

if self.weight:

|

| 56 |

+

w = torch.sigmoid(self.w) * 2

|

| 57 |

+

for i in self.iter:

|

| 58 |

+

y = y + x[i + 1] * w[i]

|

| 59 |

+

else:

|

| 60 |

+

for i in self.iter:

|

| 61 |

+

y = y + x[i + 1]

|

| 62 |

+

return y

|

| 63 |

+

|

| 64 |

+

|

| 65 |

+

class GhostConv(nn.Module):

|

| 66 |

+

# Ghost Convolution https://github.com/huawei-noah/ghostnet

|

| 67 |

+

def __init__(self, c1, c2, k=1, s=1, g=1, act=True): # ch_in, ch_out, kernel, stride, groups

|

| 68 |

+

super(GhostConv, self).__init__()

|

| 69 |

+

c_ = c2 // 2 # hidden channels

|

| 70 |

+

self.cv1 = Conv(c1, c_, k, s, None, g, act)

|

| 71 |

+

self.cv2 = Conv(c_, c_, 5, 1, None, c_, act)

|

| 72 |

+

|

| 73 |

+

def forward(self, x):

|

| 74 |

+

y = self.cv1(x)

|

| 75 |

+

return torch.cat([y, self.cv2(y)], 1)

|

| 76 |

+

|

| 77 |

+

|

| 78 |

+

class GhostBottleneck(nn.Module):

|

| 79 |

+

# Ghost Bottleneck https://github.com/huawei-noah/ghostnet

|

| 80 |

+

def __init__(self, c1, c2, k, s):

|

| 81 |

+

super(GhostBottleneck, self).__init__()

|

| 82 |

+

c_ = c2 // 2

|

| 83 |

+

self.conv = nn.Sequential(GhostConv(c1, c_, 1, 1), # pw

|

| 84 |

+

DWConv(c_, c_, k, s, act=False) if s == 2 else nn.Identity(), # dw

|

| 85 |

+

GhostConv(c_, c2, 1, 1, act=False)) # pw-linear

|

| 86 |

+

self.shortcut = nn.Sequential(DWConv(c1, c1, k, s, act=False),

|

| 87 |

+

Conv(c1, c2, 1, 1, act=False)) if s == 2 else nn.Identity()

|

| 88 |

+

|

| 89 |

+

def forward(self, x):

|

| 90 |

+

return self.conv(x) + self.shortcut(x)

|

| 91 |

+

|

| 92 |

+

|

| 93 |

+

class MixConv2d(nn.Module):

|

| 94 |

+

# Mixed Depthwise Conv https://arxiv.org/abs/1907.09595

|

| 95 |

+

def __init__(self, c1, c2, k=(1, 3), s=1, equal_ch=True):

|

| 96 |

+

super(MixConv2d, self).__init__()

|

| 97 |

+

groups = len(k)

|

| 98 |

+

if equal_ch: # equal c_ per group

|

| 99 |

+

i = torch.linspace(0, groups - 1E-6, c2).floor() # c2 indices

|

| 100 |

+

c_ = [(i == g).sum() for g in range(groups)] # intermediate channels

|

| 101 |

+

else: # equal weight.numel() per group

|

| 102 |

+

b = [c2] + [0] * groups

|

| 103 |

+

a = np.eye(groups + 1, groups, k=-1)

|

| 104 |

+

a -= np.roll(a, 1, axis=1)

|

| 105 |

+

a *= np.array(k) ** 2

|

| 106 |

+

a[0] = 1

|

| 107 |

+

c_ = np.linalg.lstsq(a, b, rcond=None)[0].round() # solve for equal weight indices, ax = b

|

| 108 |

+

|

| 109 |

+

self.m = nn.ModuleList([nn.Conv2d(c1, int(c_[g]), k[g], s, k[g] // 2, bias=False) for g in range(groups)])

|

| 110 |

+

self.bn = nn.BatchNorm2d(c2)

|

| 111 |

+

self.act = nn.LeakyReLU(0.1, inplace=True)

|

| 112 |

+

|

| 113 |

+

def forward(self, x):

|

| 114 |

+

return x + self.act(self.bn(torch.cat([m(x) for m in self.m], 1)))

|

| 115 |

+

|

| 116 |

+

|

| 117 |

+

class Ensemble(nn.ModuleList):

|

| 118 |

+

# Ensemble of models

|

| 119 |

+

def __init__(self):

|

| 120 |

+

super(Ensemble, self).__init__()

|

| 121 |

+

|

| 122 |

+

def forward(self, x, augment=False):

|

| 123 |

+

y = []

|

| 124 |

+

for module in self:

|

| 125 |

+

y.append(module(x, augment)[0])

|

| 126 |

+

# y = torch.stack(y).max(0)[0] # max ensemble

|

| 127 |

+

# y = torch.cat(y, 1) # nms ensemble

|

| 128 |

+

y = torch.stack(y).mean(0) # mean ensemble

|

| 129 |

+

return y, None # inference, train output

|

| 130 |

+

|

| 131 |

+

|

| 132 |

+

def attempt_load(weights, map_location=None):

|

| 133 |

+

# Loads an ensemble of models weights=[a,b,c] or a single model weights=[a] or weights=a

|

| 134 |

+

model = Ensemble()

|

| 135 |

+

for w in weights if isinstance(weights, list) else [weights]:

|

| 136 |

+

attempt_download(w)

|

| 137 |

+

model.append(torch.load(w, map_location=map_location)['model'].float().fuse().eval()) # load FP32 model

|

| 138 |

+

|

| 139 |

+

# Compatibility updates

|

| 140 |

+

for m in model.modules():

|

| 141 |

+

if type(m) in [nn.Hardswish, nn.LeakyReLU, nn.ReLU, nn.ReLU6]:

|

| 142 |

+

m.inplace = True # pytorch 1.7.0 compatibility

|

| 143 |

+

elif type(m) is Conv:

|

| 144 |

+

m._non_persistent_buffers_set = set() # pytorch 1.6.0 compatibility

|

| 145 |

+

|

| 146 |

+

if len(model) == 1:

|

| 147 |

+

return model[-1] # return model

|

| 148 |

+

else:

|

| 149 |

+

print('Ensemble created with %s\n' % weights)

|

| 150 |

+

for k in ['names', 'stride']:

|

| 151 |

+

setattr(model, k, getattr(model[-1], k))

|

| 152 |

+

return model # return ensemble

|

metadata/predictor_yolo_detector/models/export.py

ADDED

|

@@ -0,0 +1,94 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""Exports a YOLOv5 *.pt model to ONNX and TorchScript formats

|

| 2 |

+

|

| 3 |

+

Usage:

|

| 4 |

+

$ export PYTHONPATH="$PWD" && python models/export.py --weights ./weights/yolov5s.pt --img 640 --batch 1

|

| 5 |

+

"""

|

| 6 |

+

|

| 7 |

+

import argparse

|

| 8 |

+

import sys

|

| 9 |

+

import time

|

| 10 |

+

|

| 11 |

+

sys.path.append('./') # to run '$ python *.py' files in subdirectories

|

| 12 |

+

|

| 13 |

+

import torch

|

| 14 |

+

import torch.nn as nn

|

| 15 |

+

|

| 16 |

+

from metadata.predictor_yolo_detector.models import common

|

| 17 |

+

from metadata.predictor_yolo_detector.models.experimental import attempt_load

|

| 18 |

+

from metadata.predictor_yolo_detector.utils.activations import Hardswish

|

| 19 |

+

from metadata.predictor_yolo_detector.utils.general import set_logging, check_img_size

|

| 20 |

+

|

| 21 |

+

if __name__ == '__main__':

|

| 22 |

+

parser = argparse.ArgumentParser()

|

| 23 |

+

parser.add_argument('--weights', type=str, default='./yolov5s.pt', help='weights path') # from yolov5/models/

|

| 24 |

+

parser.add_argument('--img-size', nargs='+', type=int, default=[640, 640], help='image size') # height, width

|

| 25 |

+

parser.add_argument('--batch-size', type=int, default=1, help='batch size')

|

| 26 |

+

opt = parser.parse_args()

|

| 27 |

+

opt.img_size *= 2 if len(opt.img_size) == 1 else 1 # expand

|

| 28 |

+

print(opt)

|

| 29 |

+

set_logging()

|

| 30 |

+

t = time.time()

|

| 31 |

+

|

| 32 |

+

# Load PyTorch model

|

| 33 |

+

model = attempt_load(opt.weights, map_location=torch.device('cpu')) # load FP32 model

|

| 34 |

+

labels = model.names

|

| 35 |

+

|

| 36 |

+

# Checks

|

| 37 |

+

gs = int(max(model.stride)) # grid size (max stride)

|

| 38 |

+

opt.img_size = [check_img_size(x, gs) for x in opt.img_size] # verify img_size are gs-multiples

|

| 39 |

+

|

| 40 |

+

# Input

|

| 41 |

+

img = torch.zeros(opt.batch_size, 3, *opt.img_size) # image size(1,3,320,192) iDetection

|

| 42 |

+

|

| 43 |

+

# Update model

|

| 44 |

+

for k, m in model.named_modules():

|

| 45 |

+

m._non_persistent_buffers_set = set() # pytorch 1.6.0 compatibility

|

| 46 |

+

if isinstance(m, common.Conv) and isinstance(m.act, nn.Hardswish):

|

| 47 |

+

m.act = Hardswish() # assign activation

|

| 48 |

+

# if isinstance(m, models.yolo.Detect):

|

| 49 |

+

# m.forward = m.forward_export # assign forward (optional)

|

| 50 |

+

model.model[-1].export = True # set Detect() layer export=True

|

| 51 |

+

y = model(img) # dry run

|

| 52 |

+

|

| 53 |

+

# TorchScript export

|

| 54 |

+

try:

|

| 55 |

+

print('\nStarting TorchScript export with torch %s...' % torch.__version__)

|

| 56 |

+

f = opt.weights.replace('.pt', '.torchscript.pt') # filename

|

| 57 |

+

ts = torch.jit.trace(model, img)

|

| 58 |

+

ts.save(f)

|

| 59 |

+

print('TorchScript export success, saved as %s' % f)

|

| 60 |

+

except Exception as e:

|

| 61 |

+

print('TorchScript export failure: %s' % e)

|

| 62 |

+

|

| 63 |

+

# ONNX export

|

| 64 |

+

try:

|

| 65 |

+

import onnx

|

| 66 |

+

|

| 67 |

+

print('\nStarting ONNX export with onnx %s...' % onnx.__version__)

|

| 68 |

+

f = opt.weights.replace('.pt', '.onnx') # filename

|

| 69 |

+

torch.onnx.export(model, img, f, verbose=False, opset_version=12, input_names=['images'],

|

| 70 |

+

output_names=['classes', 'boxes'] if y is None else ['output'])

|

| 71 |

+

|

| 72 |

+

# Checks

|

| 73 |

+

onnx_model = onnx.load(f) # load onnx model

|

| 74 |

+

onnx.checker.check_model(onnx_model) # check onnx model

|

| 75 |

+

# print(onnx.helper.printable_graph(onnx_model.graph)) # print a human readable model

|

| 76 |

+

print('ONNX export success, saved as %s' % f)

|

| 77 |

+

except Exception as e:

|

| 78 |

+

print('ONNX export failure: %s' % e)

|

| 79 |

+

|

| 80 |

+

# CoreML export

|

| 81 |

+

try:

|

| 82 |

+

import coremltools as ct

|

| 83 |

+

|

| 84 |

+

print('\nStarting CoreML export with coremltools %s...' % ct.__version__)

|

| 85 |

+

# convert model from torchscript and apply pixel scaling as per detect.py

|

| 86 |

+

model = ct.convert(ts, inputs=[ct.ImageType(name='image', shape=img.shape, scale=1 / 255.0, bias=[0, 0, 0])])

|

| 87 |

+

f = opt.weights.replace('.pt', '.mlmodel') # filename

|

| 88 |

+

model.save(f)

|

| 89 |

+

print('CoreML export success, saved as %s' % f)

|

| 90 |

+

except Exception as e:

|

| 91 |

+

print('CoreML export failure: %s' % e)

|

| 92 |

+

|

| 93 |

+

# Finish

|

| 94 |

+

print('\nExport complete (%.2fs). Visualize with https://github.com/lutzroeder/netron.' % (time.time() - t))

|

metadata/predictor_yolo_detector/models/hub/yolov3-spp.yaml

ADDED

|

@@ -0,0 +1,51 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|