Upload folder using huggingface_hub

Browse files- .DS_Store +0 -0

- .gitattributes +1 -0

- 1_Pooling/config.json +10 -0

- 2_Dense/config.json +1 -0

- 2_Dense/model.safetensors +3 -0

- README.md +159 -3

- added_tokens.json +28 -0

- assets/.DS_Store +0 -0

- assets/1.png +0 -0

- config.json +33 -0

- config_sentence_transformers.json +10 -0

- merges.txt +0 -0

- model-00001-of-00004.safetensors +3 -0

- model-00002-of-00004.safetensors +3 -0

- model-00003-of-00004.safetensors +3 -0

- model-00004-of-00004.safetensors +3 -0

- model.safetensors.index.json +405 -0

- modeling_qzhou.py +664 -0

- modules.json +20 -0

- sentence_bert_config.json +4 -0

- special_tokens_map.json +31 -0

- tokenizer.json +3 -0

- tokenizer_config.json +246 -0

- vocab.json +0 -0

.DS_Store

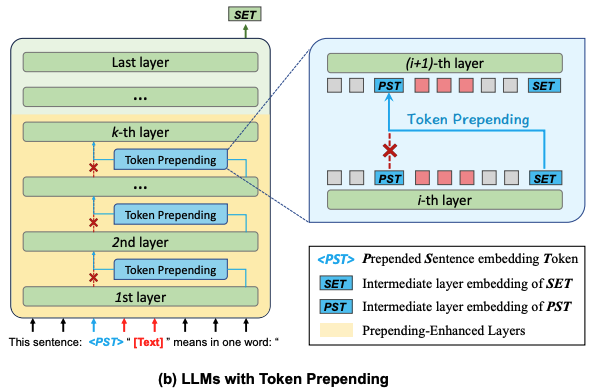

ADDED

|

Binary file (6.15 kB). View file

|

|

|

.gitattributes

CHANGED

|

@@ -33,3 +33,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

tokenizer.json filter=lfs diff=lfs merge=lfs -text

|

1_Pooling/config.json

ADDED

|

@@ -0,0 +1,10 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"word_embedding_dimension": 4096,

|

| 3 |

+

"pooling_mode_cls_token": false,

|

| 4 |

+

"pooling_mode_mean_tokens": false,

|

| 5 |

+

"pooling_mode_max_tokens": false,

|

| 6 |

+

"pooling_mode_mean_sqrt_len_tokens": false,

|

| 7 |

+

"pooling_mode_weightedmean_tokens": false,

|

| 8 |

+

"pooling_mode_lasttoken": true,

|

| 9 |

+

"include_prompt": true

|

| 10 |

+

}

|

2_Dense/config.json

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

{"in_features": 4096, "out_features": 1792, "bias": true, "activation_function": "torch.nn.modules.linear.Identity"}

|

2_Dense/model.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:101b92dd892283cdea788128b1cf031941a3b5c35e2e1a483daab893c870b82c

|

| 3 |

+

size 14683816

|

README.md

CHANGED

|

@@ -1,3 +1,159 @@

|

|

| 1 |

-

---

|

| 2 |

-

license: apache-2.0

|

| 3 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

license: apache-2.0

|

| 3 |

+

tags:

|

| 4 |

+

- sentence-transformers

|

| 5 |

+

- sentence-similarity

|

| 6 |

+

- mteb

|

| 7 |

+

- retriever

|

| 8 |

+

---

|

| 9 |

+

# QZhou-Embedding-Zh

|

| 10 |

+

|

| 11 |

+

## Introduction

|

| 12 |

+

We are pleased to announce the release of our new model, QZhou-Embedding-Zh, built upon the architecture and parameters of the Qwen3-8B base model, QZhou-Embedding-Zh was developed using the data construction and training methodology of QZhou-Embedding, and also incorporated MRL embedding inference.

|

| 13 |

+

|

| 14 |

+

## Key Enhancements and Optimizations

|

| 15 |

+

|

| 16 |

+

To build a more powerful and outstanding model, we have adopted proven approaches from QZhou-Embedding and further introduced the following optimizations:

|

| 17 |

+

|

| 18 |

+

1. **Based on Qwen3 Model:** In our practice with QZhou-Embedding, the Qwen3 base model did not show significant advantages over Qwen2.5-7B-Instruct in the first stage (Retrieval). However, notable improvements were observed in Chinese-language tasks, likely due to Qwen3’s stronger Chinese capabilities. We upgraded the base model to Qwen3-8B while retaining the original model architecture, using a last_token pooling strategy.

|

| 19 |

+

|

| 20 |

+

2. **Support for MRL:** MRL (Multi-Representation Learning) is highly demanded in practical applications, especially under high-concurrency and low-latency scenarios. Addressing the lack of MRL support in QZhou-Embedding, QZhou-Embedding-Zh now incorporates this feature with the following dimension options: "128, 256, 512, 768, 1024, 1280, 1536, 1792". The default output dimension is set to 1792.

|

| 21 |

+

|

| 22 |

+

3. **Token Prepending:** Originally proposed by Fu et al(ACL 2025, Volume 1: Long Papers, 3168–3181), this technique addresses the limitations of the unidirectional attention mechanism in decoder-only models. By prepending each layer’s decoded sentence embedding to the beginning of the sentence in the next layer’s input, allowing earlier tokens to attend to the complete sentence information under the causal attention mechanism, Token Prepending significantly improving performance in STS tasks and classification tasks. We retained the Stage-1 training strategy unchanged and integrated Token Prepending during Stage-2 training, using the PromptEOL template construction method described in their paper. Experimental results demonstrate that Token Prepending is not only a training-free enhancement but also further improves performance when fine-tuned with supervised datasets.

|

| 23 |

+

|

| 24 |

+

## Token Prepending

|

| 25 |

+

### Introduction

|

| 26 |

+

Token Prepending is a simple yet effective technique proposed by Fu et al., the core idea is prepending each layer’s decoded sentence embedding to the beginning of the sentence in the next layer’s input, allowing earlier tokens to attend to the complete sentence information under the causal attention mechanism. TP technique is a plug-and-play technique neither introduces new parameters nor alters the existing ones, allowing it to be seamlessly integrated with various prompt-based sentence embedding methods and autoregressive LLMs. The architecture described in the original paper is as follows:

|

| 27 |

+

<div align="center">

|

| 28 |

+

<img src="assets/1.png" width="600" height="400"></img>

|

| 29 |

+

</div>

|

| 30 |

+

|

| 31 |

+

### Our Adaptations and Optimizations

|

| 32 |

+

According to the conclusions presented in the original paper, TP technique is completely training-free and requires no extra learnable parameters, serving as a plug-and-play technique to improve the various prompt-based methods. Since QZhou-Embedding-Zh is built upon the Qwen3 base model—retaining its unidirectional attention mechanism and employing last_token pooling—it is ideally suited for the application of the TP technique. To further explore its potential, we conducted training utilizing the TP technique, building upon the Stage 1 retrieval base model through the following procedure:

|

| 33 |

+

1. We modified the model forward script by applying the TP specifically from layer-1 to layer-7(index), namely prepending the last embeddings to the input before processing through these layers;

|

| 34 |

+

2. For the input template design, we have integrated the PromptEOL template on top of the instruction-based input, using <|im_start|>as a placeholder—corresponding to the \<PST\> token in the original paper—to facilitate subsequent TP operations. The full template structure is designed as follows:

|

| 35 |

+

```

|

| 36 |

+

"This sentence: <|im_start|>“Instruct: [instruction]\nQuery: [user_input]” means in one word: “

|

| 37 |

+

```

|

| 38 |

+

3. Stage 2 training was conducted using the updated model architecture and input structure.

|

| 39 |

+

|

| 40 |

+

## Usage

|

| 41 |

+

To facilitate model inference and CMTEB result replication on your own machine, we provide detailed specifications for environmental dependencies and model implementation.

|

| 42 |

+

|

| 43 |

+

### Requirements

|

| 44 |

+

- Python: 3.10.12

|

| 45 |

+

- Sentence Transformers: 3.4.1

|

| 46 |

+

- Transformers: 4.51.1

|

| 47 |

+

- PyTorch: 2.4.1

|

| 48 |

+

- Accelerate: 1.3.0

|

| 49 |

+

- Datasets: 3.6.0

|

| 50 |

+

- Tokenizers: 0.21.1

|

| 51 |

+

- mteb: 1.38.30

|

| 52 |

+

|

| 53 |

+

### Quickstart

|

| 54 |

+

Since QZhou-Embedding-Zh incorporates a dedicated MRL linear projection module built on the sentence-transformers framework, we now only provide inference code specifically designed for sentence-transformers compatibility.

|

| 55 |

+

|

| 56 |

+

```

|

| 57 |

+

from sentence_transformers import SentenceTransformer

|

| 58 |

+

from sklearn.preprocessing import normalize

|

| 59 |

+

|

| 60 |

+

def get_prompteol_input(text: str) -> str:

|

| 61 |

+

return f"This sentence: <|im_start|>“{text}” means in one word: “"

|

| 62 |

+

|

| 63 |

+

def get_detailed_instruct(task_description: str, query: str) -> str:

|

| 64 |

+

return f'Instruct: {task_description}\nQuery:{query}'

|

| 65 |

+

|

| 66 |

+

model = SentenceTransformer(

|

| 67 |

+

"Kingsoft-LLM/QZhou-Embedding-Zh",

|

| 68 |

+

model_kwargs={"device_map": "cuda", "trust_remote_code": True},

|

| 69 |

+

tokenizer_kwargs={"padding_side": "left", "trust_remote_code": True},

|

| 70 |

+

trust_remote_code=True

|

| 71 |

+

)

|

| 72 |

+

|

| 73 |

+

task= "Given a web search query, retrieve relevant passages that answer the query"

|

| 74 |

+

queries = [

|

| 75 |

+

get_prompteol_input(get_detailed_instruct(task, "光合作用是什么?")),

|

| 76 |

+

get_prompteol_input(get_detailed_instruct(task, "电话是谁发明的?"))

|

| 77 |

+

]

|

| 78 |

+

|

| 79 |

+

documents = [

|

| 80 |

+

get_prompteol_input("光合作用是绿色植物利用阳光、二氧化碳和水生成葡萄糖和氧气的过程。这一生化反应发生在叶绿体中。"),

|

| 81 |

+

get_prompteol_input("亚历山大·格拉汉姆·贝尔(Alexander Graham Bell)因于1876年发明了第一台实用电话而广受认可,并为此设备获得了美国专利第174,465号。")

|

| 82 |

+

]

|

| 83 |

+

|

| 84 |

+

query_embeddings = model.encode(queries, normalize_embeddings=False)

|

| 85 |

+

document_embeddings = model.encode(documents, normalize_embeddings=False)

|

| 86 |

+

|

| 87 |

+

dim=1792 # 128, 256, 512, 768, 1024, 1280, 1536, 1792

|

| 88 |

+

query_embeddings = normalize(query_embeddings[:, :dim])

|

| 89 |

+

document_embeddings = normalize(document_embeddings[:, :dim])

|

| 90 |

+

|

| 91 |

+

similarity = model.similarity(query_embeddings, document_embeddings)

|

| 92 |

+

print(similarity)

|

| 93 |

+

```

|

| 94 |

+

|

| 95 |

+

### Completely replicate the benchmark results

|

| 96 |

+

|

| 97 |

+

```

|

| 98 |

+

normalize=true

|

| 99 |

+

use_instruction=true

|

| 100 |

+

export TOKENIZERS_PARALLELISM=true

|

| 101 |

+

embed_dim=1792 # 128, 256, 512, 768, 1024, 1280, 1536, 1792

|

| 102 |

+

|

| 103 |

+

model_name_or_path=<model dir>

|

| 104 |

+

|

| 105 |

+

python3 ./run_cmteb_all.py \

|

| 106 |

+

--model_name_or_path ${model_name_or_path} \

|

| 107 |

+

--normalize ${normalize} \

|

| 108 |

+

--dim ${embed_dim} \

|

| 109 |

+

--use_instruction ${use_instruction} \

|

| 110 |

+

--output_dir <output dir>

|

| 111 |

+

|

| 112 |

+

```

|

| 113 |

+

|

| 114 |

+

|

| 115 |

+

## Citation

|

| 116 |

+

If you find our work worth citing, please use the following citation:<br>

|

| 117 |

+

**Technical Report:**

|

| 118 |

+

```

|

| 119 |

+

@misc{yu2025qzhouembeddingtechnicalreport,

|

| 120 |

+

title={QZhou-Embedding Technical Report},

|

| 121 |

+

author={Peng Yu and En Xu and Bin Chen and Haibiao Chen and Yinfei Xu},

|

| 122 |

+

year={2025},

|

| 123 |

+

eprint={2508.21632},

|

| 124 |

+

archivePrefix={arXiv},

|

| 125 |

+

primaryClass={cs.CL},

|

| 126 |

+

url={https://arxiv.org/abs/2508.21632},

|

| 127 |

+

}

|

| 128 |

+

```

|

| 129 |

+

**Token Prepending: A Training-Free Approach for Eliciting Better Sentence Embeddings from LLMs:**

|

| 130 |

+

```

|

| 131 |

+

@inproceedings{fu-etal-2025-token,

|

| 132 |

+

title = "Token Prepending: A Training-Free Approach for Eliciting Better Sentence Embeddings from {LLM}s",

|

| 133 |

+

author = "Fu, Yuchen and

|

| 134 |

+

Cheng, Zifeng and

|

| 135 |

+

Jiang, Zhiwei and

|

| 136 |

+

Wang, Zhonghui and

|

| 137 |

+

Yin, Yafeng and

|

| 138 |

+

Li, Zhengliang and

|

| 139 |

+

Gu, Qing",

|

| 140 |

+

booktitle = "Proceedings of the 63rd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers)",

|

| 141 |

+

month = jul,

|

| 142 |

+

year = "2025",

|

| 143 |

+

publisher = "Association for Computational Linguistics",

|

| 144 |

+

url = "https://aclanthology.org/2025.acl-long.159/",

|

| 145 |

+

}

|

| 146 |

+

```

|

| 147 |

+

|

| 148 |

+

**Qwen3 Series:**

|

| 149 |

+

```

|

| 150 |

+

@misc{qwen3technicalreport,

|

| 151 |

+

title={Qwen3 Technical Report},

|

| 152 |

+

author={Qwen Team},

|

| 153 |

+

year={2025},

|

| 154 |

+

eprint={2505.09388},

|

| 155 |

+

archivePrefix={arXiv},

|

| 156 |

+

primaryClass={cs.CL},

|

| 157 |

+

url={https://arxiv.org/abs/2505.09388},

|

| 158 |

+

}

|

| 159 |

+

```

|

added_tokens.json

ADDED

|

@@ -0,0 +1,28 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"</think>": 151668,

|

| 3 |

+

"</tool_call>": 151658,

|

| 4 |

+

"</tool_response>": 151666,

|

| 5 |

+

"<think>": 151667,

|

| 6 |

+

"<tool_call>": 151657,

|

| 7 |

+

"<tool_response>": 151665,

|

| 8 |

+

"<|box_end|>": 151649,

|

| 9 |

+

"<|box_start|>": 151648,

|

| 10 |

+

"<|endoftext|>": 151643,

|

| 11 |

+

"<|file_sep|>": 151664,

|

| 12 |

+

"<|fim_middle|>": 151660,

|

| 13 |

+

"<|fim_pad|>": 151662,

|

| 14 |

+

"<|fim_prefix|>": 151659,

|

| 15 |

+

"<|fim_suffix|>": 151661,

|

| 16 |

+

"<|im_end|>": 151645,

|

| 17 |

+

"<|im_start|>": 151644,

|

| 18 |

+

"<|image_pad|>": 151655,

|

| 19 |

+

"<|object_ref_end|>": 151647,

|

| 20 |

+

"<|object_ref_start|>": 151646,

|

| 21 |

+

"<|quad_end|>": 151651,

|

| 22 |

+

"<|quad_start|>": 151650,

|

| 23 |

+

"<|repo_name|>": 151663,

|

| 24 |

+

"<|video_pad|>": 151656,

|

| 25 |

+

"<|vision_end|>": 151653,

|

| 26 |

+

"<|vision_pad|>": 151654,

|

| 27 |

+

"<|vision_start|>": 151652

|

| 28 |

+

}

|

assets/.DS_Store

ADDED

|

Binary file (6.15 kB). View file

|

|

|

assets/1.png

ADDED

|

config.json

ADDED

|

@@ -0,0 +1,33 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"architectures": [

|

| 3 |

+

"QZhouModel"

|

| 4 |

+

],

|

| 5 |

+

"attention_bias": false,

|

| 6 |

+

"attention_dropout": 0.0,

|

| 7 |

+

"auto_map": {

|

| 8 |

+

"AutoModel": "modeling_qzhou.QZhouModel"

|

| 9 |

+

},

|

| 10 |

+

"bos_token_id": 151643,

|

| 11 |

+

"eos_token_id": 151645,

|

| 12 |

+

"head_dim": 128,

|

| 13 |

+

"hidden_act": "silu",

|

| 14 |

+

"hidden_size": 4096,

|

| 15 |

+

"initializer_range": 0.02,

|

| 16 |

+

"intermediate_size": 12288,

|

| 17 |

+

"max_position_embeddings": 40960,

|

| 18 |

+

"max_window_layers": 36,

|

| 19 |

+

"model_type": "qwen3",

|

| 20 |

+

"num_attention_heads": 32,

|

| 21 |

+

"num_hidden_layers": 36,

|

| 22 |

+

"num_key_value_heads": 8,

|

| 23 |

+

"rms_norm_eps": 1e-06,

|

| 24 |

+

"rope_scaling": null,

|

| 25 |

+

"rope_theta": 1000000,

|

| 26 |

+

"sliding_window": null,

|

| 27 |

+

"tie_word_embeddings": false,

|

| 28 |

+

"torch_dtype": "bfloat16",

|

| 29 |

+

"transformers_version": "4.51.1",

|

| 30 |

+

"use_cache": true,

|

| 31 |

+

"use_sliding_window": false,

|

| 32 |

+

"vocab_size": 151936

|

| 33 |

+

}

|

config_sentence_transformers.json

ADDED

|

@@ -0,0 +1,10 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"__version__": {

|

| 3 |

+

"sentence_transformers": "3.4.1",

|

| 4 |

+

"transformers": "4.51.1",

|

| 5 |

+

"pytorch": "2.4.1+cu121"

|

| 6 |

+

},

|

| 7 |

+

"prompts": {},

|

| 8 |

+

"default_prompt_name": null,

|

| 9 |

+

"similarity_fn_name": "cosine"

|

| 10 |

+

}

|

merges.txt

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

model-00001-of-00004.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:9b3ccd3acd3e1f5a508f1bd46b18318a4a3fafa9d90d852fc614825a33da4415

|

| 3 |

+

size 4902257056

|

model-00002-of-00004.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:e35325a89c3985669f0f4e7260593795b4e7f6e0fdd181d68593d7b50cf5a08e

|

| 3 |

+

size 4915959512

|

model-00003-of-00004.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:50ae96454c69200c2f374ed3d3beb3f94739dc8970841eff4e8df8d30b958b40

|

| 3 |

+

size 4983067656

|

model-00004-of-00004.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:d0e76ec8c207af961424def0386393b37051fab776b73c1091c8146c20903de3

|

| 3 |

+

size 335570376

|

model.safetensors.index.json

ADDED

|

@@ -0,0 +1,405 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"metadata": {

|

| 3 |

+

"total_size": 15136811008

|

| 4 |

+

},

|

| 5 |

+

"weight_map": {

|

| 6 |

+

"embed_tokens.weight": "model-00001-of-00004.safetensors",

|

| 7 |

+

"layers.0.input_layernorm.weight": "model-00001-of-00004.safetensors",

|

| 8 |

+

"layers.0.mlp.down_proj.weight": "model-00001-of-00004.safetensors",

|

| 9 |

+

"layers.0.mlp.gate_proj.weight": "model-00001-of-00004.safetensors",

|

| 10 |

+

"layers.0.mlp.up_proj.weight": "model-00001-of-00004.safetensors",

|

| 11 |

+

"layers.0.post_attention_layernorm.weight": "model-00001-of-00004.safetensors",

|

| 12 |

+

"layers.0.self_attn.k_norm.weight": "model-00001-of-00004.safetensors",

|

| 13 |

+

"layers.0.self_attn.k_proj.weight": "model-00001-of-00004.safetensors",

|

| 14 |

+

"layers.0.self_attn.o_proj.weight": "model-00001-of-00004.safetensors",

|

| 15 |

+

"layers.0.self_attn.q_norm.weight": "model-00001-of-00004.safetensors",

|

| 16 |

+

"layers.0.self_attn.q_proj.weight": "model-00001-of-00004.safetensors",

|

| 17 |

+

"layers.0.self_attn.v_proj.weight": "model-00001-of-00004.safetensors",

|

| 18 |

+

"layers.1.input_layernorm.weight": "model-00001-of-00004.safetensors",

|

| 19 |

+

"layers.1.mlp.down_proj.weight": "model-00001-of-00004.safetensors",

|

| 20 |

+

"layers.1.mlp.gate_proj.weight": "model-00001-of-00004.safetensors",

|

| 21 |

+

"layers.1.mlp.up_proj.weight": "model-00001-of-00004.safetensors",

|

| 22 |

+

"layers.1.post_attention_layernorm.weight": "model-00001-of-00004.safetensors",

|

| 23 |

+

"layers.1.self_attn.k_norm.weight": "model-00001-of-00004.safetensors",

|

| 24 |

+

"layers.1.self_attn.k_proj.weight": "model-00001-of-00004.safetensors",

|

| 25 |

+

"layers.1.self_attn.o_proj.weight": "model-00001-of-00004.safetensors",

|

| 26 |

+

"layers.1.self_attn.q_norm.weight": "model-00001-of-00004.safetensors",

|

| 27 |

+

"layers.1.self_attn.q_proj.weight": "model-00001-of-00004.safetensors",

|

| 28 |

+

"layers.1.self_attn.v_proj.weight": "model-00001-of-00004.safetensors",

|

| 29 |

+

"layers.10.input_layernorm.weight": "model-00002-of-00004.safetensors",

|

| 30 |

+

"layers.10.mlp.down_proj.weight": "model-00002-of-00004.safetensors",

|

| 31 |

+

"layers.10.mlp.gate_proj.weight": "model-00002-of-00004.safetensors",

|

| 32 |

+

"layers.10.mlp.up_proj.weight": "model-00002-of-00004.safetensors",

|

| 33 |

+

"layers.10.post_attention_layernorm.weight": "model-00002-of-00004.safetensors",

|

| 34 |

+

"layers.10.self_attn.k_norm.weight": "model-00002-of-00004.safetensors",

|

| 35 |

+

"layers.10.self_attn.k_proj.weight": "model-00002-of-00004.safetensors",

|

| 36 |

+

"layers.10.self_attn.o_proj.weight": "model-00002-of-00004.safetensors",

|

| 37 |

+

"layers.10.self_attn.q_norm.weight": "model-00002-of-00004.safetensors",

|

| 38 |

+

"layers.10.self_attn.q_proj.weight": "model-00002-of-00004.safetensors",

|

| 39 |

+

"layers.10.self_attn.v_proj.weight": "model-00002-of-00004.safetensors",

|

| 40 |

+

"layers.11.input_layernorm.weight": "model-00002-of-00004.safetensors",

|

| 41 |

+

"layers.11.mlp.down_proj.weight": "model-00002-of-00004.safetensors",

|

| 42 |

+

"layers.11.mlp.gate_proj.weight": "model-00002-of-00004.safetensors",

|

| 43 |

+

"layers.11.mlp.up_proj.weight": "model-00002-of-00004.safetensors",

|

| 44 |

+

"layers.11.post_attention_layernorm.weight": "model-00002-of-00004.safetensors",

|

| 45 |

+

"layers.11.self_attn.k_norm.weight": "model-00002-of-00004.safetensors",

|

| 46 |

+

"layers.11.self_attn.k_proj.weight": "model-00002-of-00004.safetensors",

|

| 47 |

+

"layers.11.self_attn.o_proj.weight": "model-00002-of-00004.safetensors",

|

| 48 |

+

"layers.11.self_attn.q_norm.weight": "model-00002-of-00004.safetensors",

|

| 49 |

+

"layers.11.self_attn.q_proj.weight": "model-00002-of-00004.safetensors",

|

| 50 |

+

"layers.11.self_attn.v_proj.weight": "model-00002-of-00004.safetensors",

|

| 51 |

+

"layers.12.input_layernorm.weight": "model-00002-of-00004.safetensors",

|

| 52 |

+

"layers.12.mlp.down_proj.weight": "model-00002-of-00004.safetensors",

|

| 53 |

+

"layers.12.mlp.gate_proj.weight": "model-00002-of-00004.safetensors",

|

| 54 |

+

"layers.12.mlp.up_proj.weight": "model-00002-of-00004.safetensors",

|

| 55 |

+

"layers.12.post_attention_layernorm.weight": "model-00002-of-00004.safetensors",

|

| 56 |

+

"layers.12.self_attn.k_norm.weight": "model-00002-of-00004.safetensors",

|

| 57 |

+

"layers.12.self_attn.k_proj.weight": "model-00002-of-00004.safetensors",

|

| 58 |

+

"layers.12.self_attn.o_proj.weight": "model-00002-of-00004.safetensors",

|

| 59 |

+

"layers.12.self_attn.q_norm.weight": "model-00002-of-00004.safetensors",

|

| 60 |

+

"layers.12.self_attn.q_proj.weight": "model-00002-of-00004.safetensors",

|

| 61 |

+

"layers.12.self_attn.v_proj.weight": "model-00002-of-00004.safetensors",

|

| 62 |

+

"layers.13.input_layernorm.weight": "model-00002-of-00004.safetensors",

|

| 63 |

+

"layers.13.mlp.down_proj.weight": "model-00002-of-00004.safetensors",

|

| 64 |

+

"layers.13.mlp.gate_proj.weight": "model-00002-of-00004.safetensors",

|

| 65 |

+

"layers.13.mlp.up_proj.weight": "model-00002-of-00004.safetensors",

|

| 66 |

+

"layers.13.post_attention_layernorm.weight": "model-00002-of-00004.safetensors",

|

| 67 |

+

"layers.13.self_attn.k_norm.weight": "model-00002-of-00004.safetensors",

|

| 68 |

+

"layers.13.self_attn.k_proj.weight": "model-00002-of-00004.safetensors",

|

| 69 |

+

"layers.13.self_attn.o_proj.weight": "model-00002-of-00004.safetensors",

|

| 70 |

+

"layers.13.self_attn.q_norm.weight": "model-00002-of-00004.safetensors",

|

| 71 |

+

"layers.13.self_attn.q_proj.weight": "model-00002-of-00004.safetensors",

|

| 72 |

+

"layers.13.self_attn.v_proj.weight": "model-00002-of-00004.safetensors",

|

| 73 |

+

"layers.14.input_layernorm.weight": "model-00002-of-00004.safetensors",

|

| 74 |

+

"layers.14.mlp.down_proj.weight": "model-00002-of-00004.safetensors",

|

| 75 |

+

"layers.14.mlp.gate_proj.weight": "model-00002-of-00004.safetensors",

|

| 76 |

+

"layers.14.mlp.up_proj.weight": "model-00002-of-00004.safetensors",

|

| 77 |

+

"layers.14.post_attention_layernorm.weight": "model-00002-of-00004.safetensors",

|

| 78 |

+

"layers.14.self_attn.k_norm.weight": "model-00002-of-00004.safetensors",

|

| 79 |

+

"layers.14.self_attn.k_proj.weight": "model-00002-of-00004.safetensors",

|

| 80 |

+

"layers.14.self_attn.o_proj.weight": "model-00002-of-00004.safetensors",

|

| 81 |

+

"layers.14.self_attn.q_norm.weight": "model-00002-of-00004.safetensors",

|

| 82 |

+

"layers.14.self_attn.q_proj.weight": "model-00002-of-00004.safetensors",

|

| 83 |

+

"layers.14.self_attn.v_proj.weight": "model-00002-of-00004.safetensors",

|

| 84 |

+

"layers.15.input_layernorm.weight": "model-00002-of-00004.safetensors",

|

| 85 |

+

"layers.15.mlp.down_proj.weight": "model-00002-of-00004.safetensors",

|

| 86 |

+

"layers.15.mlp.gate_proj.weight": "model-00002-of-00004.safetensors",

|

| 87 |

+

"layers.15.mlp.up_proj.weight": "model-00002-of-00004.safetensors",

|

| 88 |

+

"layers.15.post_attention_layernorm.weight": "model-00002-of-00004.safetensors",

|

| 89 |

+

"layers.15.self_attn.k_norm.weight": "model-00002-of-00004.safetensors",

|

| 90 |

+

"layers.15.self_attn.k_proj.weight": "model-00002-of-00004.safetensors",

|

| 91 |

+

"layers.15.self_attn.o_proj.weight": "model-00002-of-00004.safetensors",

|

| 92 |

+

"layers.15.self_attn.q_norm.weight": "model-00002-of-00004.safetensors",

|

| 93 |

+

"layers.15.self_attn.q_proj.weight": "model-00002-of-00004.safetensors",

|

| 94 |

+

"layers.15.self_attn.v_proj.weight": "model-00002-of-00004.safetensors",

|

| 95 |

+

"layers.16.input_layernorm.weight": "model-00002-of-00004.safetensors",

|

| 96 |

+

"layers.16.mlp.down_proj.weight": "model-00002-of-00004.safetensors",

|

| 97 |

+

"layers.16.mlp.gate_proj.weight": "model-00002-of-00004.safetensors",

|

| 98 |

+

"layers.16.mlp.up_proj.weight": "model-00002-of-00004.safetensors",

|

| 99 |

+

"layers.16.post_attention_layernorm.weight": "model-00002-of-00004.safetensors",

|

| 100 |

+

"layers.16.self_attn.k_norm.weight": "model-00002-of-00004.safetensors",

|

| 101 |

+

"layers.16.self_attn.k_proj.weight": "model-00002-of-00004.safetensors",

|

| 102 |

+

"layers.16.self_attn.o_proj.weight": "model-00002-of-00004.safetensors",

|

| 103 |

+

"layers.16.self_attn.q_norm.weight": "model-00002-of-00004.safetensors",

|

| 104 |

+

"layers.16.self_attn.q_proj.weight": "model-00002-of-00004.safetensors",

|

| 105 |

+

"layers.16.self_attn.v_proj.weight": "model-00002-of-00004.safetensors",

|

| 106 |

+

"layers.17.input_layernorm.weight": "model-00002-of-00004.safetensors",

|

| 107 |

+

"layers.17.mlp.down_proj.weight": "model-00002-of-00004.safetensors",

|

| 108 |

+

"layers.17.mlp.gate_proj.weight": "model-00002-of-00004.safetensors",

|

| 109 |

+

"layers.17.mlp.up_proj.weight": "model-00002-of-00004.safetensors",

|

| 110 |

+

"layers.17.post_attention_layernorm.weight": "model-00002-of-00004.safetensors",

|

| 111 |

+

"layers.17.self_attn.k_norm.weight": "model-00002-of-00004.safetensors",

|

| 112 |

+

"layers.17.self_attn.k_proj.weight": "model-00002-of-00004.safetensors",

|

| 113 |

+

"layers.17.self_attn.o_proj.weight": "model-00002-of-00004.safetensors",

|

| 114 |

+

"layers.17.self_attn.q_norm.weight": "model-00002-of-00004.safetensors",

|

| 115 |

+

"layers.17.self_attn.q_proj.weight": "model-00002-of-00004.safetensors",

|

| 116 |

+

"layers.17.self_attn.v_proj.weight": "model-00002-of-00004.safetensors",

|

| 117 |

+

"layers.18.input_layernorm.weight": "model-00002-of-00004.safetensors",

|

| 118 |

+

"layers.18.mlp.down_proj.weight": "model-00002-of-00004.safetensors",

|

| 119 |

+

"layers.18.mlp.gate_proj.weight": "model-00002-of-00004.safetensors",

|

| 120 |

+

"layers.18.mlp.up_proj.weight": "model-00002-of-00004.safetensors",

|

| 121 |

+

"layers.18.post_attention_layernorm.weight": "model-00002-of-00004.safetensors",

|

| 122 |

+

"layers.18.self_attn.k_norm.weight": "model-00002-of-00004.safetensors",

|

| 123 |

+

"layers.18.self_attn.k_proj.weight": "model-00002-of-00004.safetensors",

|

| 124 |

+

"layers.18.self_attn.o_proj.weight": "model-00002-of-00004.safetensors",

|

| 125 |

+

"layers.18.self_attn.q_norm.weight": "model-00002-of-00004.safetensors",

|

| 126 |

+

"layers.18.self_attn.q_proj.weight": "model-00002-of-00004.safetensors",

|

| 127 |

+

"layers.18.self_attn.v_proj.weight": "model-00002-of-00004.safetensors",

|

| 128 |

+

"layers.19.input_layernorm.weight": "model-00002-of-00004.safetensors",

|

| 129 |

+

"layers.19.mlp.down_proj.weight": "model-00002-of-00004.safetensors",

|

| 130 |

+

"layers.19.mlp.gate_proj.weight": "model-00002-of-00004.safetensors",

|

| 131 |

+

"layers.19.mlp.up_proj.weight": "model-00002-of-00004.safetensors",

|

| 132 |

+

"layers.19.post_attention_layernorm.weight": "model-00002-of-00004.safetensors",

|

| 133 |

+

"layers.19.self_attn.k_norm.weight": "model-00002-of-00004.safetensors",

|

| 134 |

+

"layers.19.self_attn.k_proj.weight": "model-00002-of-00004.safetensors",

|

| 135 |

+

"layers.19.self_attn.o_proj.weight": "model-00002-of-00004.safetensors",

|

| 136 |

+

"layers.19.self_attn.q_norm.weight": "model-00002-of-00004.safetensors",

|

| 137 |

+

"layers.19.self_attn.q_proj.weight": "model-00002-of-00004.safetensors",

|

| 138 |

+

"layers.19.self_attn.v_proj.weight": "model-00002-of-00004.safetensors",

|

| 139 |

+

"layers.2.input_layernorm.weight": "model-00001-of-00004.safetensors",

|

| 140 |

+

"layers.2.mlp.down_proj.weight": "model-00001-of-00004.safetensors",

|

| 141 |

+

"layers.2.mlp.gate_proj.weight": "model-00001-of-00004.safetensors",

|

| 142 |

+

"layers.2.mlp.up_proj.weight": "model-00001-of-00004.safetensors",

|

| 143 |

+

"layers.2.post_attention_layernorm.weight": "model-00001-of-00004.safetensors",

|

| 144 |

+

"layers.2.self_attn.k_norm.weight": "model-00001-of-00004.safetensors",

|

| 145 |

+

"layers.2.self_attn.k_proj.weight": "model-00001-of-00004.safetensors",

|

| 146 |

+

"layers.2.self_attn.o_proj.weight": "model-00001-of-00004.safetensors",

|

| 147 |

+

"layers.2.self_attn.q_norm.weight": "model-00001-of-00004.safetensors",

|

| 148 |

+

"layers.2.self_attn.q_proj.weight": "model-00001-of-00004.safetensors",

|

| 149 |

+

"layers.2.self_attn.v_proj.weight": "model-00001-of-00004.safetensors",

|

| 150 |

+

"layers.20.input_layernorm.weight": "model-00002-of-00004.safetensors",

|

| 151 |

+

"layers.20.mlp.down_proj.weight": "model-00002-of-00004.safetensors",

|

| 152 |

+

"layers.20.mlp.gate_proj.weight": "model-00002-of-00004.safetensors",

|

| 153 |

+

"layers.20.mlp.up_proj.weight": "model-00002-of-00004.safetensors",

|

| 154 |

+

"layers.20.post_attention_layernorm.weight": "model-00002-of-00004.safetensors",

|

| 155 |

+

"layers.20.self_attn.k_norm.weight": "model-00002-of-00004.safetensors",

|

| 156 |

+

"layers.20.self_attn.k_proj.weight": "model-00002-of-00004.safetensors",

|

| 157 |

+

"layers.20.self_attn.o_proj.weight": "model-00002-of-00004.safetensors",

|

| 158 |

+

"layers.20.self_attn.q_norm.weight": "model-00002-of-00004.safetensors",

|

| 159 |

+

"layers.20.self_attn.q_proj.weight": "model-00002-of-00004.safetensors",

|

| 160 |

+

"layers.20.self_attn.v_proj.weight": "model-00002-of-00004.safetensors",

|

| 161 |

+

"layers.21.input_layernorm.weight": "model-00002-of-00004.safetensors",

|

| 162 |

+

"layers.21.mlp.down_proj.weight": "model-00002-of-00004.safetensors",

|

| 163 |

+

"layers.21.mlp.gate_proj.weight": "model-00002-of-00004.safetensors",

|

| 164 |

+

"layers.21.mlp.up_proj.weight": "model-00002-of-00004.safetensors",

|

| 165 |

+

"layers.21.post_attention_layernorm.weight": "model-00002-of-00004.safetensors",

|

| 166 |

+

"layers.21.self_attn.k_norm.weight": "model-00002-of-00004.safetensors",

|

| 167 |

+

"layers.21.self_attn.k_proj.weight": "model-00002-of-00004.safetensors",

|

| 168 |

+

"layers.21.self_attn.o_proj.weight": "model-00002-of-00004.safetensors",

|

| 169 |

+

"layers.21.self_attn.q_norm.weight": "model-00002-of-00004.safetensors",

|

| 170 |

+

"layers.21.self_attn.q_proj.weight": "model-00002-of-00004.safetensors",

|

| 171 |

+

"layers.21.self_attn.v_proj.weight": "model-00002-of-00004.safetensors",

|

| 172 |

+

"layers.22.input_layernorm.weight": "model-00003-of-00004.safetensors",

|

| 173 |

+

"layers.22.mlp.down_proj.weight": "model-00003-of-00004.safetensors",

|

| 174 |

+

"layers.22.mlp.gate_proj.weight": "model-00003-of-00004.safetensors",

|

| 175 |

+

"layers.22.mlp.up_proj.weight": "model-00003-of-00004.safetensors",

|

| 176 |

+

"layers.22.post_attention_layernorm.weight": "model-00003-of-00004.safetensors",

|

| 177 |

+

"layers.22.self_attn.k_norm.weight": "model-00002-of-00004.safetensors",

|

| 178 |

+

"layers.22.self_attn.k_proj.weight": "model-00002-of-00004.safetensors",

|

| 179 |

+

"layers.22.self_attn.o_proj.weight": "model-00002-of-00004.safetensors",

|

| 180 |

+

"layers.22.self_attn.q_norm.weight": "model-00002-of-00004.safetensors",

|

| 181 |

+

"layers.22.self_attn.q_proj.weight": "model-00002-of-00004.safetensors",

|

| 182 |

+

"layers.22.self_attn.v_proj.weight": "model-00002-of-00004.safetensors",

|

| 183 |

+

"layers.23.input_layernorm.weight": "model-00003-of-00004.safetensors",

|

| 184 |

+

"layers.23.mlp.down_proj.weight": "model-00003-of-00004.safetensors",

|

| 185 |

+

"layers.23.mlp.gate_proj.weight": "model-00003-of-00004.safetensors",

|

| 186 |

+

"layers.23.mlp.up_proj.weight": "model-00003-of-00004.safetensors",

|

| 187 |

+

"layers.23.post_attention_layernorm.weight": "model-00003-of-00004.safetensors",

|

| 188 |

+

"layers.23.self_attn.k_norm.weight": "model-00003-of-00004.safetensors",

|

| 189 |

+

"layers.23.self_attn.k_proj.weight": "model-00003-of-00004.safetensors",

|

| 190 |

+

"layers.23.self_attn.o_proj.weight": "model-00003-of-00004.safetensors",

|

| 191 |

+

"layers.23.self_attn.q_norm.weight": "model-00003-of-00004.safetensors",

|

| 192 |

+

"layers.23.self_attn.q_proj.weight": "model-00003-of-00004.safetensors",

|

| 193 |

+

"layers.23.self_attn.v_proj.weight": "model-00003-of-00004.safetensors",

|

| 194 |

+

"layers.24.input_layernorm.weight": "model-00003-of-00004.safetensors",

|

| 195 |

+

"layers.24.mlp.down_proj.weight": "model-00003-of-00004.safetensors",

|

| 196 |

+

"layers.24.mlp.gate_proj.weight": "model-00003-of-00004.safetensors",

|

| 197 |

+

"layers.24.mlp.up_proj.weight": "model-00003-of-00004.safetensors",

|

| 198 |

+

"layers.24.post_attention_layernorm.weight": "model-00003-of-00004.safetensors",

|

| 199 |

+

"layers.24.self_attn.k_norm.weight": "model-00003-of-00004.safetensors",

|

| 200 |

+

"layers.24.self_attn.k_proj.weight": "model-00003-of-00004.safetensors",

|

| 201 |

+

"layers.24.self_attn.o_proj.weight": "model-00003-of-00004.safetensors",

|

| 202 |

+

"layers.24.self_attn.q_norm.weight": "model-00003-of-00004.safetensors",

|

| 203 |

+

"layers.24.self_attn.q_proj.weight": "model-00003-of-00004.safetensors",

|

| 204 |

+

"layers.24.self_attn.v_proj.weight": "model-00003-of-00004.safetensors",

|

| 205 |

+

"layers.25.input_layernorm.weight": "model-00003-of-00004.safetensors",

|

| 206 |

+

"layers.25.mlp.down_proj.weight": "model-00003-of-00004.safetensors",

|

| 207 |

+

"layers.25.mlp.gate_proj.weight": "model-00003-of-00004.safetensors",

|

| 208 |

+

"layers.25.mlp.up_proj.weight": "model-00003-of-00004.safetensors",

|

| 209 |

+

"layers.25.post_attention_layernorm.weight": "model-00003-of-00004.safetensors",

|

| 210 |

+

"layers.25.self_attn.k_norm.weight": "model-00003-of-00004.safetensors",

|

| 211 |

+

"layers.25.self_attn.k_proj.weight": "model-00003-of-00004.safetensors",

|

| 212 |

+

"layers.25.self_attn.o_proj.weight": "model-00003-of-00004.safetensors",

|

| 213 |

+

"layers.25.self_attn.q_norm.weight": "model-00003-of-00004.safetensors",

|

| 214 |

+

"layers.25.self_attn.q_proj.weight": "model-00003-of-00004.safetensors",

|

| 215 |

+

"layers.25.self_attn.v_proj.weight": "model-00003-of-00004.safetensors",

|

| 216 |

+

"layers.26.input_layernorm.weight": "model-00003-of-00004.safetensors",

|

| 217 |

+

"layers.26.mlp.down_proj.weight": "model-00003-of-00004.safetensors",

|

| 218 |

+

"layers.26.mlp.gate_proj.weight": "model-00003-of-00004.safetensors",

|

| 219 |

+

"layers.26.mlp.up_proj.weight": "model-00003-of-00004.safetensors",

|

| 220 |

+

"layers.26.post_attention_layernorm.weight": "model-00003-of-00004.safetensors",

|

| 221 |

+

"layers.26.self_attn.k_norm.weight": "model-00003-of-00004.safetensors",

|

| 222 |

+

"layers.26.self_attn.k_proj.weight": "model-00003-of-00004.safetensors",

|

| 223 |

+

"layers.26.self_attn.o_proj.weight": "model-00003-of-00004.safetensors",

|

| 224 |

+

"layers.26.self_attn.q_norm.weight": "model-00003-of-00004.safetensors",

|

| 225 |

+

"layers.26.self_attn.q_proj.weight": "model-00003-of-00004.safetensors",

|

| 226 |

+

"layers.26.self_attn.v_proj.weight": "model-00003-of-00004.safetensors",

|

| 227 |

+

"layers.27.input_layernorm.weight": "model-00003-of-00004.safetensors",

|

| 228 |

+

"layers.27.mlp.down_proj.weight": "model-00003-of-00004.safetensors",

|

| 229 |

+

"layers.27.mlp.gate_proj.weight": "model-00003-of-00004.safetensors",

|

| 230 |

+

"layers.27.mlp.up_proj.weight": "model-00003-of-00004.safetensors",

|

| 231 |

+

"layers.27.post_attention_layernorm.weight": "model-00003-of-00004.safetensors",

|

| 232 |

+

"layers.27.self_attn.k_norm.weight": "model-00003-of-00004.safetensors",

|

| 233 |

+

"layers.27.self_attn.k_proj.weight": "model-00003-of-00004.safetensors",

|

| 234 |

+

"layers.27.self_attn.o_proj.weight": "model-00003-of-00004.safetensors",

|

| 235 |

+

"layers.27.self_attn.q_norm.weight": "model-00003-of-00004.safetensors",

|

| 236 |

+

"layers.27.self_attn.q_proj.weight": "model-00003-of-00004.safetensors",

|

| 237 |

+

"layers.27.self_attn.v_proj.weight": "model-00003-of-00004.safetensors",

|

| 238 |

+

"layers.28.input_layernorm.weight": "model-00003-of-00004.safetensors",

|

| 239 |

+

"layers.28.mlp.down_proj.weight": "model-00003-of-00004.safetensors",

|

| 240 |

+

"layers.28.mlp.gate_proj.weight": "model-00003-of-00004.safetensors",

|

| 241 |

+

"layers.28.mlp.up_proj.weight": "model-00003-of-00004.safetensors",

|

| 242 |

+

"layers.28.post_attention_layernorm.weight": "model-00003-of-00004.safetensors",

|

| 243 |

+

"layers.28.self_attn.k_norm.weight": "model-00003-of-00004.safetensors",

|

| 244 |

+

"layers.28.self_attn.k_proj.weight": "model-00003-of-00004.safetensors",

|

| 245 |

+

"layers.28.self_attn.o_proj.weight": "model-00003-of-00004.safetensors",

|

| 246 |

+

"layers.28.self_attn.q_norm.weight": "model-00003-of-00004.safetensors",

|

| 247 |

+

"layers.28.self_attn.q_proj.weight": "model-00003-of-00004.safetensors",

|

| 248 |

+

"layers.28.self_attn.v_proj.weight": "model-00003-of-00004.safetensors",

|

| 249 |

+

"layers.29.input_layernorm.weight": "model-00003-of-00004.safetensors",

|

| 250 |

+

"layers.29.mlp.down_proj.weight": "model-00003-of-00004.safetensors",

|

| 251 |

+

"layers.29.mlp.gate_proj.weight": "model-00003-of-00004.safetensors",

|

| 252 |

+

"layers.29.mlp.up_proj.weight": "model-00003-of-00004.safetensors",

|

| 253 |

+

"layers.29.post_attention_layernorm.weight": "model-00003-of-00004.safetensors",

|

| 254 |

+

"layers.29.self_attn.k_norm.weight": "model-00003-of-00004.safetensors",

|

| 255 |

+

"layers.29.self_attn.k_proj.weight": "model-00003-of-00004.safetensors",

|

| 256 |

+

"layers.29.self_attn.o_proj.weight": "model-00003-of-00004.safetensors",

|

| 257 |

+

"layers.29.self_attn.q_norm.weight": "model-00003-of-00004.safetensors",

|

| 258 |

+

"layers.29.self_attn.q_proj.weight": "model-00003-of-00004.safetensors",

|

| 259 |

+

"layers.29.self_attn.v_proj.weight": "model-00003-of-00004.safetensors",

|

| 260 |

+

"layers.3.input_layernorm.weight": "model-00001-of-00004.safetensors",

|

| 261 |

+

"layers.3.mlp.down_proj.weight": "model-00001-of-00004.safetensors",

|

| 262 |

+

"layers.3.mlp.gate_proj.weight": "model-00001-of-00004.safetensors",

|

| 263 |

+

"layers.3.mlp.up_proj.weight": "model-00001-of-00004.safetensors",

|

| 264 |

+

"layers.3.post_attention_layernorm.weight": "model-00001-of-00004.safetensors",

|

| 265 |

+

"layers.3.self_attn.k_norm.weight": "model-00001-of-00004.safetensors",

|

| 266 |

+

"layers.3.self_attn.k_proj.weight": "model-00001-of-00004.safetensors",

|

| 267 |

+

"layers.3.self_attn.o_proj.weight": "model-00001-of-00004.safetensors",

|

| 268 |

+

"layers.3.self_attn.q_norm.weight": "model-00001-of-00004.safetensors",

|

| 269 |

+

"layers.3.self_attn.q_proj.weight": "model-00001-of-00004.safetensors",

|

| 270 |

+

"layers.3.self_attn.v_proj.weight": "model-00001-of-00004.safetensors",

|

| 271 |

+

"layers.30.input_layernorm.weight": "model-00003-of-00004.safetensors",

|

| 272 |

+

"layers.30.mlp.down_proj.weight": "model-00003-of-00004.safetensors",

|

| 273 |

+

"layers.30.mlp.gate_proj.weight": "model-00003-of-00004.safetensors",

|

| 274 |

+

"layers.30.mlp.up_proj.weight": "model-00003-of-00004.safetensors",

|

| 275 |

+

"layers.30.post_attention_layernorm.weight": "model-00003-of-00004.safetensors",

|

| 276 |

+

"layers.30.self_attn.k_norm.weight": "model-00003-of-00004.safetensors",

|

| 277 |

+

"layers.30.self_attn.k_proj.weight": "model-00003-of-00004.safetensors",

|

| 278 |

+

"layers.30.self_attn.o_proj.weight": "model-00003-of-00004.safetensors",

|

| 279 |

+

"layers.30.self_attn.q_norm.weight": "model-00003-of-00004.safetensors",

|

| 280 |

+

"layers.30.self_attn.q_proj.weight": "model-00003-of-00004.safetensors",

|

| 281 |

+

"layers.30.self_attn.v_proj.weight": "model-00003-of-00004.safetensors",

|

| 282 |

+

"layers.31.input_layernorm.weight": "model-00003-of-00004.safetensors",

|

| 283 |

+

"layers.31.mlp.down_proj.weight": "model-00003-of-00004.safetensors",

|

| 284 |

+

"layers.31.mlp.gate_proj.weight": "model-00003-of-00004.safetensors",

|

| 285 |

+

"layers.31.mlp.up_proj.weight": "model-00003-of-00004.safetensors",

|

| 286 |

+

"layers.31.post_attention_layernorm.weight": "model-00003-of-00004.safetensors",

|

| 287 |

+

"layers.31.self_attn.k_norm.weight": "model-00003-of-00004.safetensors",

|

| 288 |

+

"layers.31.self_attn.k_proj.weight": "model-00003-of-00004.safetensors",

|

| 289 |

+

"layers.31.self_attn.o_proj.weight": "model-00003-of-00004.safetensors",

|

| 290 |

+

"layers.31.self_attn.q_norm.weight": "model-00003-of-00004.safetensors",

|

| 291 |

+

"layers.31.self_attn.q_proj.weight": "model-00003-of-00004.safetensors",

|

| 292 |

+

"layers.31.self_attn.v_proj.weight": "model-00003-of-00004.safetensors",

|

| 293 |

+

"layers.32.input_layernorm.weight": "model-00003-of-00004.safetensors",

|

| 294 |

+

"layers.32.mlp.down_proj.weight": "model-00003-of-00004.safetensors",

|

| 295 |

+

"layers.32.mlp.gate_proj.weight": "model-00003-of-00004.safetensors",

|

| 296 |

+

"layers.32.mlp.up_proj.weight": "model-00003-of-00004.safetensors",

|

| 297 |

+

"layers.32.post_attention_layernorm.weight": "model-00003-of-00004.safetensors",

|

| 298 |

+

"layers.32.self_attn.k_norm.weight": "model-00003-of-00004.safetensors",

|

| 299 |

+

"layers.32.self_attn.k_proj.weight": "model-00003-of-00004.safetensors",

|

| 300 |

+

"layers.32.self_attn.o_proj.weight": "model-00003-of-00004.safetensors",

|

| 301 |

+

"layers.32.self_attn.q_norm.weight": "model-00003-of-00004.safetensors",

|

| 302 |

+

"layers.32.self_attn.q_proj.weight": "model-00003-of-00004.safetensors",

|

| 303 |

+

"layers.32.self_attn.v_proj.weight": "model-00003-of-00004.safetensors",

|

| 304 |

+

"layers.33.input_layernorm.weight": "model-00003-of-00004.safetensors",

|

| 305 |

+

"layers.33.mlp.down_proj.weight": "model-00003-of-00004.safetensors",

|

| 306 |

+

"layers.33.mlp.gate_proj.weight": "model-00003-of-00004.safetensors",

|

| 307 |

+

"layers.33.mlp.up_proj.weight": "model-00003-of-00004.safetensors",

|

| 308 |

+

"layers.33.post_attention_layernorm.weight": "model-00003-of-00004.safetensors",

|

| 309 |

+

"layers.33.self_attn.k_norm.weight": "model-00003-of-00004.safetensors",

|

| 310 |

+

"layers.33.self_attn.k_proj.weight": "model-00003-of-00004.safetensors",

|

| 311 |

+

"layers.33.self_attn.o_proj.weight": "model-00003-of-00004.safetensors",

|

| 312 |

+

"layers.33.self_attn.q_norm.weight": "model-00003-of-00004.safetensors",

|

| 313 |

+

"layers.33.self_attn.q_proj.weight": "model-00003-of-00004.safetensors",

|

| 314 |

+

"layers.33.self_attn.v_proj.weight": "model-00003-of-00004.safetensors",

|

| 315 |

+

"layers.34.input_layernorm.weight": "model-00003-of-00004.safetensors",

|

| 316 |

+

"layers.34.mlp.down_proj.weight": "model-00003-of-00004.safetensors",

|

| 317 |

+

"layers.34.mlp.gate_proj.weight": "model-00003-of-00004.safetensors",

|

| 318 |

+

"layers.34.mlp.up_proj.weight": "model-00003-of-00004.safetensors",

|

| 319 |

+

"layers.34.post_attention_layernorm.weight": "model-00003-of-00004.safetensors",

|

| 320 |

+

"layers.34.self_attn.k_norm.weight": "model-00003-of-00004.safetensors",

|

| 321 |

+

"layers.34.self_attn.k_proj.weight": "model-00003-of-00004.safetensors",

|

| 322 |

+

"layers.34.self_attn.o_proj.weight": "model-00003-of-00004.safetensors",

|

| 323 |

+

"layers.34.self_attn.q_norm.weight": "model-00003-of-00004.safetensors",

|

| 324 |

+

"layers.34.self_attn.q_proj.weight": "model-00003-of-00004.safetensors",

|

| 325 |

+

"layers.34.self_attn.v_proj.weight": "model-00003-of-00004.safetensors",

|

| 326 |

+

"layers.35.input_layernorm.weight": "model-00004-of-00004.safetensors",

|

| 327 |

+

"layers.35.mlp.down_proj.weight": "model-00004-of-00004.safetensors",

|

| 328 |

+

"layers.35.mlp.gate_proj.weight": "model-00004-of-00004.safetensors",

|

| 329 |

+

"layers.35.mlp.up_proj.weight": "model-00004-of-00004.safetensors",

|

| 330 |

+

"layers.35.post_attention_layernorm.weight": "model-00004-of-00004.safetensors",

|

| 331 |

+

"layers.35.self_attn.k_norm.weight": "model-00004-of-00004.safetensors",

|

| 332 |

+

"layers.35.self_attn.k_proj.weight": "model-00003-of-00004.safetensors",

|

| 333 |

+

"layers.35.self_attn.o_proj.weight": "model-00004-of-00004.safetensors",

|

| 334 |

+

"layers.35.self_attn.q_norm.weight": "model-00004-of-00004.safetensors",

|

| 335 |

+

"layers.35.self_attn.q_proj.weight": "model-00003-of-00004.safetensors",

|

| 336 |

+

"layers.35.self_attn.v_proj.weight": "model-00003-of-00004.safetensors",

|

| 337 |

+

"layers.4.input_layernorm.weight": "model-00001-of-00004.safetensors",

|

| 338 |

+

"layers.4.mlp.down_proj.weight": "model-00001-of-00004.safetensors",

|

| 339 |

+

"layers.4.mlp.gate_proj.weight": "model-00001-of-00004.safetensors",

|

| 340 |

+

"layers.4.mlp.up_proj.weight": "model-00001-of-00004.safetensors",

|

| 341 |

+

"layers.4.post_attention_layernorm.weight": "model-00001-of-00004.safetensors",

|

| 342 |

+

"layers.4.self_attn.k_norm.weight": "model-00001-of-00004.safetensors",

|

| 343 |

+

"layers.4.self_attn.k_proj.weight": "model-00001-of-00004.safetensors",

|

| 344 |

+

"layers.4.self_attn.o_proj.weight": "model-00001-of-00004.safetensors",

|

| 345 |

+

"layers.4.self_attn.q_norm.weight": "model-00001-of-00004.safetensors",

|

| 346 |

+

"layers.4.self_attn.q_proj.weight": "model-00001-of-00004.safetensors",

|

| 347 |

+

"layers.4.self_attn.v_proj.weight": "model-00001-of-00004.safetensors",

|

| 348 |

+

"layers.5.input_layernorm.weight": "model-00001-of-00004.safetensors",

|

| 349 |

+

"layers.5.mlp.down_proj.weight": "model-00001-of-00004.safetensors",

|

| 350 |

+

"layers.5.mlp.gate_proj.weight": "model-00001-of-00004.safetensors",

|

| 351 |

+

"layers.5.mlp.up_proj.weight": "model-00001-of-00004.safetensors",

|

| 352 |

+

"layers.5.post_attention_layernorm.weight": "model-00001-of-00004.safetensors",

|

| 353 |

+

"layers.5.self_attn.k_norm.weight": "model-00001-of-00004.safetensors",

|

| 354 |

+